Sometimes You Just Have to Ship

I’ve been in this software racket, depending on where you start counting, about 25 years now. I’ve been fortunate to work on a lot of different things in my career—embedded systems, custom hardware, shrinkwrap, web systems, software as a service, desktop, mobile, government contracts, government-adjacent contracts, startups, little companies, big companies, education, telecom, insurance, internal tools, external services, commercial, open-source, Microsoft-based, Apple-based, hosted onvarious unicies, big iron, you name it. I think the only major “genres” of software I don’t have road miles on are console game dev and anything requiring a security clearance. If you can name a major technology used to ship software in the 21st century, I’ve probably touched it.

I don’t bring this up to humblebrag—although it is a kick to occasionally step back and take in the view—I bring it up because I’ve shipped a lot of “version one” products, and a lot of different kinds of “version ones”. Every project is different, every company and team are different, but here’s one thing I do know: No one is ever happy with their first version of anything. But how you decide what to be unhappy about is everything.

Because, sometimes you just have to ship.

Let’s back up and talk about Venture Capital for a second.

Something a lot of people intellectually know, but don’t fully understand, is that the sentences “I raised some VC” and “I sold the company” are the same sentence. It’s really, really easy to trick yourself into believing that’s not true. Sure, you have a great relationship with your investors now, but if they need to, they will absolutely prove to you that they’re calling the shots.

The other important thing to understand about VC is that it’s gambling for a very specific kind of rich person. And, mostly, that’s a fact that doesn’t matter, except—what’s the worst outcome when you’re out gambling? Losing everything? No. Then you get to go home, yell “I lost my shirt!” everyone cheers, they buy you drinks.

No, the worse outcome is breaking even.

No one wants to break even when they go gambling, because what was the point of that? Just about everyone, if they’re in danger of ending the night with the same number of dollars they started with, will work hard to prevent that—bet it all on black, go all-in on a wacky hand, something. Losing everything is so much better than passing on a chance to hit it big.

VC is no different. If you take $5 million from investors, the absolutely last thing they want is that $5 million back. They either want nothing, or $50 million. Because they want the one that hits big, and a company that breaks even just looks like one that didn’t try hard enough. They’ve got that same $5 mil in ten places, they only need one to hit to make up for the other nine bottoming out.

And we’ve not been totally positive about VC here at Icecano, so I want to pause for a moment and say this isn’t necessarily a bad thing. If you went to go get that same $5 million as a loan from a bank, they’d want you to pay that back, with interest, on a schedule, and they’d want you to prove that you could do it. And a lot of the time, you can’t! And that’s okay. There’s a whole lot of successful outfits that needed that additional flexibility to get off the ground. Nothing wrong with using some rich people’s money to pay some salaries, build something new.

This only starts being a problem if you forget this. And it’s easy to forget. In my experience, depending on your founder’s charisma, you have somewhere between five and eight years. The investors will spend years ignoring you, but eventually they’ll show up, and want to know if this is a bust or a hit. And there’s only one real way to find out.

Because, sometimes you just have to ship.

This sounds obvious when you say it out loud, but to build something, you have to imagine it first. People get very precious around words like “vision” or “design intent”, but at the end of the day, there was something you were trying to do. Some problem to solve. This is why we’re all here. We’re gonna do this.

But this is never what goes out the door.

There’s always cut features, things that don’t work quite right, bugs wearing tuxedoes, things “coming soon”, abandoned dead-ends. From the inside, from the perspective of the people who built the thing, it always looks like a shadow of what you wanted to build. “We’ll get it next time,” you tell each other, “Microsoft never gets it right until version 3.”

The dangerous thing is, it’s really, really easy to only see the thing you built through the lens of what you wanted to build.

The less toxic way this manifests is to get really depressed. “This sucks,” you say, “if only we’d had more time.”

The really toxic way, though, is to forget that your customers don’t have the context you have. They didn’t see the pitch deck. They weren’t there for that whiteboard session where the lightbulbs all went on. They didn’t see the prototype that wasn’t ready to go just yet. They don’t know what you’re planning next. Critically—they didn’t buy in to the vision, they’re trying to decide if they’re going to buy the thing you actually shipped. And you assume that even though this version isn’t there yet, wherever “there” is, that they’ll buy it anyway because they know what’s coming. Spoiler: they don’t, and they won’t.

The trick is to know all this ahead of time. Know that you won’t ship everything, know that you have to pick a slice you actually do, given the time, or money, or other constraints.

The trick is to know the difference between things you know and things you hope. And you gotta flush those out as fast as you can, before the VCs start knocking. And the only people who can tell you are your customers, the actual customers, the ones who are deciding if they’re gonna hand over a credit card. All the interviews, and research, and prototypes, and pitch sessions, and investor demos let you hope. Real people with real money is how you know. As fast as you can, as often as you can.

The longer you wait, the more you refine, or “pivot”, or do another round of ethnography, is just finding new ways to hope, is just wasting resources you could have used once you actually learned something.

Times up. Pencils down. Show your work.

Because, sometimes you just have to ship.

Reviews are a gift.

People spending money, or not, is a signal, but it’s a noisy one. Amazon doesn’t have a box where they can tell you “why.” Reviews are people who are actually paid to think about what you did, but without the bias of having worked on it, or the bias of spending their own money. They’re not perfect, but they’re incredibly valuable.

They’re not always fun. I’ve had work I’ve done written up on the real big-boy tech review sites, and it’s slightly dissociating to read something written by someone you’ve never met about something you worked on complaining about a problem you couldn’t fix.

Here’s the thing, though: they should never be a surprise. The amount that the reviews are a surprise are how you know how well you did keeping the bias, the vision, the hope, under control. The next time I ship a version one, I’m going to have the team write fake techblog reviews six months ahead of time, and then see how we feel about them, use that to fuel the last batch of duct tape.

What you don’t do is argue with them. You don’t talk about how disappointing it was, or how hard it was, or how the reviewers were wrong, how it wasn’t for them, that it’s immoral to write a bad review because think of the poor shareholders.

Instead, you do the actual hard work. Which you should have done already. Where you choose what to work on, what to cut. Where you put the effort into imaging how your customers are really going to react. What parts of the vision you have to leave behind to build the product you found, not the one you hoped for.

The best time to do that was a year ago. The second best time is now. So you get back to work, you stop tweeting, you read the reviews again, you look at how much money is left. You put a new plan together.

Because, sometimes you just have to ship.

Cyber-Curriculum

I very much enjoyed Cory Doctorow’s riff today on why people keep building torment nexii: Pluralistic: The Coprophagic AI crisis (14 Mar 2024).

He hits on an interesting point, namely that for a long time the fact that people couldn’t tell the difference between “science fiction thought experiments” and “futuristic predictions” didn’t matter. But now we have a bunch of aging gen-X tech billionaires waving dog-eared copies of Neuromancer or Moon is a Harsh Mistress or something, and, well…

I was about to make a crack that it sorta feels like high school should spend some time asking students “so, what’s do you think is going on with those robots in Blade Runner?” or the like, but you couldn’t actually show Blade Runner in a high school. Too much topless murder. (Whether or not that should be the case is besides the point.)

I do think we should spend some of that literary analysis time in high school english talking about how science fiction with computers works, but what book do you go with? Is there a cyberpunk novel without weird sex stuff in it? I mean, weird by high school curriculum standards. Off the top of my head, thinking about books and movies, Neuromancer, Snow Crash, Johnny Mnemonic, and Strange Days all have content that wouldn’t get passed the school board. The Matrix is probably borderline, but that’s got a whole different set of philosophical and technological concerns.

Goes and looks at his shelves for a minute

You could make Hitchhiker work. Something from later Gibson? I’m sure there’s a Bruce Sterling or Rudy Rucker novel I’m not thinking of. There’s a whole stack or Ursula LeGuin everyone should read in their teens, but I’m not sure those cover the same things I’m talking about here. I’m starting to see why this hasn’t happened.

(Also, Happy π day to everyone who uses American-style dates!)

The Sky Above The Headset Was The Color Of Cyberpunk’s Dead Hand

Occasionally I poke my head into the burned-out wasteland where twitter used to be, and whilw doing so stumbled over this thread by Neil Stephenson from a couple years ago:

I had to go back and look it up, and yep: Snow Crash came out the year before Doom did. I’d absolutely have stuck this fact in Playthings For The Alone if I’d had remembered, so instead I’m gonna “yes, and” my own post from last month.

One of the oft-remarked on aspects of the 80s cyberpunk movement was that the majority of the authors weren’t “computer guys” before-hand; they were coming at computers from a literary/artist/musician worldview which is part of why cyberpunk hit the way it did; it wasn’t the way computer people thought about computers—it was the street finding it’s own use for things, to quote Gibson. But a less remarked-on aspect was that they also weren’t gamers. Not just not computer games, but any sort of board games, tabletop RPGs.

Snow Crash is still an amazing book, but it was written at the last possible second where you could imagine a multi-user digital world and not treat “pretending to be an elf” as a primary use-case. Instead the Metaverse is sort of a mall? And what “games” there are aren’t really baked in, they’re things a bored kid would do at a mall in the 80s. It’s a wild piece of context drift from the world in which it was written.

In many ways, Neuromancer has aged better than Snow Crash, if for no other reason that it’s clear that the part of The Matrix that Case is interested in is a tiny slice, and it’s easy to imagine Wintermute running several online game competitions off camera, whereas in Snow Crash it sure seems like The Metaverse is all there is; a stack of other big on-line systems next to it doesn’t jive with the rest of the book.

But, all that makes Snow Crash a really useful as a point of reference, because depending on who you talk to it’s either “the last cyberpunk novel”, or “the first post-cyberpunk novel”. Genre boundaries are tricky, especially when you’re talking about artistic movements within a genre, but there’s clearly a set of work that includes Neuromancer, Mirrorshades, Islands in the Net, and Snow Crash, that does not include Pattern Recognition, Shaping Things, or Cryptonomicon; the central aspect probably being “books about computers written by people who do not themselves use computers every day”. Once the authors in question all started writing their novels in Word and looking things up on the web, the whole tenor changed. As such, Snow Crash unexpectedly found itself as the final statement for a set of ideas, a particular mix of how near-future computers, commerce, and the economy might all work together—a vision with strong social predictive power, but unencumbered by the lived experience of actually using computers.

(As the old joke goes, if you’re under 50, you weren’t promised flying cars, you were promised a cyberpunk dystopia, and well, here we are, pick up your complementary torment nexus at the front desk.)

The accidental predictive power of cyberpunk is a whole media thesis on it’s own, but it’s grimly amusing that all the places where cyberpunk gets the future wrong, it’s usually because the author wasn’t being pessimistic enough. The Bridge Trilogy is pretty pessimistic, but there’s no indication that a couple million people died of a preventable disease because the immediate ROI on saving them wasn’t high enough. (And there’s at least two diseases I could be talking about there.)

But for our purposes here, one of the places the genre overshot was this idea that you’d need a 3d display—like a headset—to interact with a 3d world. And this is where I think Stephenson’s thread above is interesting, because it turns out it really didn’t occur to him that 3d on a flat screen would be a thing, and assumed that any sort of 3d interface would require a head-mounted display. As he says, that got stomped the moment Doom came out. I first read Snow Crash in ’98 or so, and even then I was thinking none of this really needs a headset, this would all work find on a decently-sized monitor.

And so we have two takes on the “future of 3d computing”: the literary tradition from the cyberpunk novels of the 80s, and then actual lived experience from people building software since then.

What I think is interesting about the Apple Cyber Goggles, in part, is if feels like that earlier, literary take on how futuristic computers would work re-emerging and directly competing with the last four decades of actual computing that have happened since Neuromancer came out.

In a lot of ways, Meta is doing the funniest and most interesting work here, as the former Oculus headsets are pretty much the cutting edge of “what actually works well with a headset”, while at the same time, Zuck’s “Metaverse” is blatantly an older millennial pointing at a dog-eared copy of Snow Crash saying “no, just build this” to a team of engineers desperately hoping the boss never searches the web for “second life”. They didn’t even change the name! And this makes a sort of sense, there are parts of Snow Crash that read less like fiction and more like Stephenson is writing a pitch deck.

I think this is the fundamental tension behind the reactions to Apple Vision Pro: we can now build the thing we were all imagining in 1984. The headset is designed by cyberpunk’s dead hand; after four decades of lived experience, is it still a good idea?

Time Zones Are Hard

In honor of leap day, my favorite story about working with computerized dates and times.

A few lifetimes ago, I was working on a team that was developing a wearable. It tracked various telemetry about the wearer, including steps. As you might imagine, there was an option to set a Step Goal for the day, and there was a reward the user got for hitting the goal.

Skipping a lot of details, we put together a prototype to do a limited alpha test, a couple hundred folks out in the real world walking around. For reasons that aren’t worth going into, and are probably still under NDA, we had to do this very quickly; on the software side we basically had to start from scratch and have a fully working stack in 2 or 3 months, for a feature set that was probably at minimum 6-9 months worth of work.

There were a couple of ways to slice what we meant by “day”, but we went with the most obvious one, midnight to midnight. Meaning that the user had until midnight to hit your goal, and then at 12:00 your steps for the day resets to 0.

Dates and Times are notoriously difficult for computers. Partly, this is because Dates and Times are legitimately complex. Look at the a full date: “February 29th 2024, 11:00:00 am”. Every value there has a different base, a different set of legal values. Month lengths, 24 vs 12 hour times, leap years, leap seconds. It’s a big tangle of arbitrary rules. If you take a date and time, and want to add 1000 minutes to it, the value of the result is “it depends”. This gets even worse when you add time zones, and the time zone’s angry sibling, daylight saving time. Now, the result of adding two times together also depends on where you were when it happened. It’s gross!

But the other reason it’s hard to use dates and times in computers is that they look easy. Everyone does this every day! How hard can it be?? So developers, especially developers working on platforms or frameworks, tend to write new time handling systems from scratch. This is where I link to this internet classic: Falsehoods programmers believe about time.

The upshot of all that is that there’s no good standard way to represent or transmit time data between systems, the way there is with, say, floating point numbers, or even unicode multi-language strings. It’s a stubbornly unsolved problem. Java, for example, has three different separate systems for representing dates and times built in to the language, none of which solve the whole problem. They’re all terrible, but in different ways.

Which brings me back to my story. This was a prototype, built fast. We aggressively cut features, anything that wasn’t absolutely critical went by the wayside. One of the things we cut out was Time Zone Support, and chose to run the whole thing in Pacific Time. We were talking about a test that was going to run about three months, which didn’t cross a DST boundary, and 95% of the testers were on the west coast. There were a handful of folks on the east cost, but, okay, they could handle their “day” starting and ending at 3am. Not perfect, but made things a whole lot simpler. They can handle a reset-to-zero at 3 am, sure.

We get ready to ship, to light to test run up.

“Great news!” someone says. “The CEO is really excited about this, he wants to be in the test cohort!”

Yeah, that’s great! There’s “executive sponsorship”, and then there’s “the CEO is wearing the device in meetings”. Have him come on down, we’ll get him set up with a unit.

“Just one thing,” gets causally mentioned days later, “this probably isn’t an issue, but he’s going to be taking them on his big publicized walking trip to Spain.”

Spain? Yeah, Spain. Turns out he’s doing this big charity walk thing with a whole bunch of other exec types across Spain, wants to use our gizmo, and at the end of the day show off that he’s hit his step count.

Midnight in California is 9am in Spain. This guy gets up early. Starts walking early. His steps are going to reset to zero every day somewhere around second breakfast.

Oh Shit.

I’m not sure we even said anything else in that meeting, we all just stood without a word, acquired a concerning amount of Mountain Dew, and proceeded to spend the next week and change hacking in time zone support to the whole stack: various servers, database, both iOS and Android mobile apps.

It was the worst code I have ever written, and of course it was so much harder to hack in after the fact in a sidecar instead of building it in from day one. But the big boss got hit step count reward at the end of the day every day, instead of just after breakfast.

From that point on, whenever something was described as hard, the immediate question was “well, is this just hard, or is it ’time zone’ hard?”

Playthings For The Alone

Previously on Icecano: Space Glasses, Apple Vision Pro: New Ways to be Alone.

I hurt my back at the beginning of January. (Wait! This isn’t about to become a recipe blog, or worse, a late-90s AICN movie review. This is gonna connect, bare with me.) I did some extremely unwise “lift with your back, not your knees” while trying to get our neutronium-forged artificial Christmas tree out of the house. As usual for this kind of injury, that wasn’t the problem, the problem was when I got overconfident while full of ibuprofen and hurt it asecond time at the end of January. I’m much improved now, but that’s the one that really messed me up. I have this back brace thing that helps a lot—my son has nicknamed it The Battle Harness, and yeah, if there was a He-Man character that was also a middle-aged programmer, that’s what I look like these days. This is one of those injuries where what positions are currently comfortable is an ever-changing landscape, but the consistant element has been that none of them allow me to use a screen and a keyboard at the same time. (A glace back at the Icecano archives since the start of the year will provided a fairly accurate summary of how my back was doing.)

One of the many results of this is that I’ve been reading way, way more reviews of the Apple Cyber Goggles than I was intending to. I’ve very much been enjoying the early reactions and reviews of the Apple Cyber Goggles, and the part I enjoy the most has been that I no longer need to have a professional opinion about them.

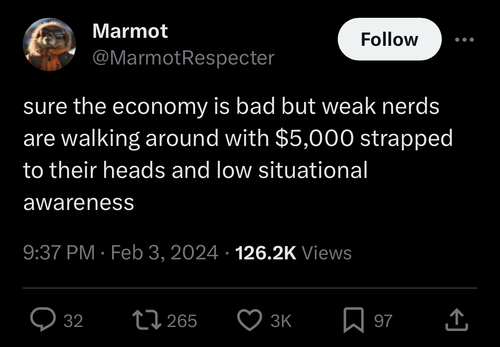

That’s a lie, actually my favorite part has been that as near as I can tell everyone seems to have individually invented “guy driving a cybertruck wearing vision pro”, which if I were Apple I’d treat as a 5-alarm PR emergency. Is anyone wearing these outside for any reason other than Making Content? I suspect not. Yet, anyway. Is this the first new Apple product that “normal people” immediately treated as being douchebag techbro trash? I mean, usually it gets dismissed as overly expensive hipster gear, but this feels different, somehow. Ed Zitron has a solid take on why: How Tech Outstayed Its Welcome.

Like jetpacks and hover cars before it, computers replacing screens with goggles is something science fiction decided was Going To Happen(tm). There’s a sense of inevitability about it, in the future we’re all going to use ski goggles to look at our email. If I was feeling better I’d go scare up some clips, but we’re all picturing the same things: Johnny Mnemonic, any cover art for any William Gibson book, that one Michael Douglas / Demi Moore movie, even that one episode of Murder She Wrote where Mrs. Potts puts on a Nintendo Power Glove and hacks into the Matrix.

(Also, personal sidebar: something I learned about myself the last couple of weeks is somehow I’ve led a life where in my mid-40s I can type “mnemonic” perfectly the first time every time, and literally can never write “johnny” without making a typo.)

But I picked jetpacks and hover cars on purpose—this isn’t something like Fusion Power that everyone also expects but stubbornly stays fifty years into the future, this is something that keeps showing up and turning out to not actually be a good idea. Jetpacks, it turns out, are kinda garbage in most cases? Computers, but for your face, was a concept science fiction latched on to long before anyone writing in the field had used a computer for much, and the idea never left. And people kept trying to build them! I used to work with a guy who literally helped build a prototype face-mounted PC during the Reagan administration, and he was far from the first person to work on it. Heck, I tried out a prototype VR headset in the early 90s. But the reality on the ground is that what we actually use computers for tends to be a bad fit for big glasses.

I have a very clear memory of watching J. Mnemonic the first time with an uncle who was probably the biggest science fiction fan of all time. The first scene where Mr. Mnemonic logs into cyberspace, and the screen goes all stargate as he puts on his ski goggles and flies into the best computer graphics that a mid-budget 1995 movie can muster, I remember my uncle, in full delight and without irony, yelling “that’s how you make a phone call!” He’d have ordered one of these on day one. But you know what’s kinda great about my actual video phone I have in my pocket he didn’t live to see? It just connects to the person I’m calling instead of making me fly around the opening credits of Tron first.

Partly because it’s such a long-established signifier of the future, and lots of various bits of science fiction have made them look very cool, there’s a deep, built-up reservoir of optimism and good will about the basic concept, despite many of the implementations being not-so-great. And because people keep taking swings at it, like jetpacks, face-mounted displays have carved out a niche where they excel. (VR; games mostly, for jetpacks: the opening of James Bond movies.)

And those niches have gotten pretty good! Just to be clear: Superhot VR is one of my favorite games of all time. I do, in fact, own an Oculus from back when they still used that name, and there’s maybe a half-dozen games where the head-mounted full-immersion genuinely results in a different & better experience than playing on a big screen.

And I think this has been one of the fundamental tensions around this entire space for the last 40 years: there’s a narrow band of applications where they’re clearly a good idea, but science fiction promised that these are the final form. Everyone wants to join up with Case and go ride with the other Console Jockeys. Someday, this will be the default, not just the fancy thing that mostly lives in the cabinet.

The Cyber Goggles keep making me thing about the Magic Leap. Remember them? Let me tell you a story. For those of you who have been living your lives right, Magic Leap is a startup who’ve been working on “AR goggles” for some time now. Back when I had to care about this professionally, we had one.

The Leap itself, was pair of thick goggles. I think there was a belt pack with a cable? I can’t quite remember now. The lenses were circular and mostly clear. It had a real steampunk dwarf quality, minus the decorative gears. The central feature was that the lenses were clear, but had an embedded transparent display, so the virtual elements would overlay with real life. It was heavy, heavier than you wanted it to be, and the lenses had the quality of looking through a pair of very dirty glasses, or walking out of a 3D movie without giving the eyewear back. You could see, but you could tell the lenses were there. But it worked! It did a legitimately amazing job of drawing computer-generated images over the real world, and they really looked like they were there, and then you could dismiss them, and you were back to looking at the office through Thorin’s welding goggles.

What was it actually do though? It clearly wasn’t sure. This was shortly after google glass, and it had a similar approach to being a stand-along device running “cut down” apps. Two of them have stuck in my memory.

The first was literally Angy Birds. But it projected the level onto the floor of the room you were in, blocks and pigs and all, so you could walk around it like it was a set of discarded kids toys. Was it better than “regular” angry birds? No, absolutely not, but it was a cool demo. The illusion was nearly perfect, the scattered blocks really stayed in the same place.

The second was the generic productivity apps. Email, calendar, some other stuff. I think there was a generic web browser? It’s been a while. They lived in these virtual screens, rectangles hanging in air. You could position them in space, and they’d stay there. I took a whole set of these screens and arrayed them in the air about and around my desk. I had three monitors in real life, and then another five or six virtual ones, each of the virtual ones showing one app. I could glance up, and see a whole array of summary information. The resolution was too low to use them for real work, and the lenses themselves were too cloudy to use my actual computer while wearing them.

You can guess the big problem though: I coudn’t show them to anybody. Anything I needed to share with a coworker needed to be on one of the real screens, not the virtual ones. Theres nothing quite so isolating as being able to see things no one else can see.

That’s stuck with me all these years. Those virtual screens surrounding my desk. That felt like something. Now, Magic Leap was clearly not the company to deliver a full version of that, even then it was clear they weren’t the ones to make a real go of the idea. But like I said, it stuck with me. Flash forward, and Apple goes and makes that one of the signature features of their cyber goggles.

I can’t ever remember a set of reviews for a new product that were trying this hard to get past the shortcomings and like the thing they were imagining. I feel like most reviews of this thing can be replaced with a clip from that one Simpson’s episode where Homer buys the first hover car, but it sucks, and Bart yells over the wind “Why’d you buy the first hover car ever made?”, to which Homer gleefully responds with “I know! It’s a hover car!” as the car rattles along, bouncing off the ground.

It’s clear what everyone suspected back over the summer is true; this isn’t the “real” product, this is a sketch, a prototype, a test article that Tim Apple is charging 4 grand to test. And, good for him, honestly. The “real” Apple Vision is probably rev 3 or 4, coming in 2028 or thereabouts.

I’m stashing the links to all the interesting ones I read here, mostly so I can find them again. (I apologize to those of you to whom this is a high-interest open tabs balance transfer.)

- Apple Vision Pro review: magic, until it’s not - The Verge

- Apple Vision Pro Review: The Best Headset Yet Is Just a Glimpse of the Future - WSJ

- Daring Fireball: The Vision Pro

- The Apple Vision Pro: A Review

- Michael Tsai - Blog - Apple Vision Pro Reviews

- Apple Vision Pro hands-on, again, for the first time - The Verge

- Hypercritical: Spatial Computing

- Manton Reece - Apple needs a flop

- Why Tim Cook Is Going All In on the Apple Vision Pro | Vanity Fair

- The Apple Vision Pro Is Spectacular and Sad - The Atlantic

- Daring Fireball: Simple Tricks and Nonsense

- All My Thoughts After 40 Hours in the Vision Pro — Wait But Why

But my favorite summary was from Today in Tabs The Business Section:

This is all a lot to read and gadget reviews are very boring so my summary is: the PDF Goggles aren’t a product but an expensive placeholder for a different future product that will hypothetically provide augmented reality in a way that people will want to use, which isn’t this way. If you want to spend four thousand dollars on this placeholder you definitely already know that, and you don’t need a review to find out.

The reviews I thought were the most interesting were the ones from Thompson and Zitron; They didn’t get review units, they bought them with their own money and with very specific goals of what they wanted to use them for, which then slammed into the reality of what the device actually can do:

Both of those guys really, really wanted to use their cyber goggles as a primary productivity tool, and just couldn’t. It’s not unlike the people who tried really hard to make their iPad their primary computer—you know who you are; hey man, how ya doing?—it’s possible, but barely.

There’s a definite set of themes across all that:

- The outward facing “eyesight” is not so great

- The synthetic faces for video calls as just as uncanny valley weird as we thought

- Watching movies is really, really cool—everyone really seems to linger on this one

- Big virtual screens for anything other than movies and other work seems mixed

- But why is this, though? “What’s the problem this is solving?”

Apple, I think at this point, has earned the benefit of the doubt when they launch new things, but I’ve spent the last week reading this going, “so that’s it, huh?” Because okay, it’s the thing from last summer. There’s no big “aha, this is why they made this”.

There’s a line from the first Verge hands-on, before the full review, where the author says:

I know what I saw, but I’m still trying to figure out where this headset fits in real life.

Ooh, ooh, pick me! I know! It’s a Plaything For The Alone.

They know—they know—that headsets like this are weird and isolating, but seem to have decided to just shrug, accept that as the cost of doing business. As much as they pay lip service to being able to dial into the real world, this is more about dialing out, about tuning out the world, about having an experience only you can have. They might not be by themselves, but they’re Alone.

A set of sci-fi sketches as placeholders for features, entertaining people who don’t have anyone to talk to.

My parents were over for the Super Bowl last weekend. Sports keep being cited as a key feature for the cyber goggles, cool angles, like you’re really there. I’ve never in my life watched sports by myself. Is the idea that everyone will have their own goggles for big games? My parents come over with their own his-and-hers headsets, we all sit around with these things strapped to our faces and pass snacks around? Really? There’s no on-court angle thats better than gesturing wildly at my mom on the couch next to me.

How alone do you have to be to even think of this?

With all that said, what’s this thing for? Not the future version, not the science fiction hallucination, this thing you can go out and buy today? A Plaything for the Alone, sure, but to what end?

The consistent theme I noticed, across nearly every article I read, was that just about everyone stops and mentions that thing where you can sit on top of mount hood and watch a movie by yourself.

Which brings me back around to my back injury. I’ve spent the last month-and-change in a variety of mostly inclined positions. There’s basically nowhere to put a screen or a book that’s convenient for where my head needs to be, so in addition to living on ibuprofen, I’ve been bored out of my mind.

And so for a second, I got it. I’m wedged into the couch, looking at the TV at a weird angle, and, you know what? Having a screen strapped to my face that just oriented to where I could look instead of where I wanted to look sounded pretty good. Now that you mention it, I could go for a few minutes on top of Mount Hood.

All things considered, as back injuries go, I had a pretty mild one. I’ll be back to normal in a couple of weeks, no lasting damage, didn’t need professional medical care. But being in pain/discomfort all day is exhausting. I ended up taking several days off work, mostly because I was just to tired to think or talk to anyone. There’d be days where I was done before sundown; not that I didn’t enjoy hanging out with my kids when they got home from school, but I just didn’t have any gas left in the tank to, you know, say anything. I was never by myself, but I frequently needed to be alone.

And look, I’m lucky enough that when I needed to just go feel sorry for myself by myself, I could just go upstairs. And not everyone has that. Not everyone has something making them exhausted that gets better after a couple of weeks.

The question every one keeps asking is “what problem are these solving?”

Ummm, is the problem cities?

When they announced this thing last summer, I thought the visual of a lady in the cramped IKEA demo apartment pretending to be out on a mountain was a real cyberpunk dystopia moment, life in the burbclaves got ya down? CyberGoggles to the rescue. But every office I ever worked in—both cube farms and open-office bullpens—was full of people wearing headphones. Trying to blot out the noise, literal and figurative, of the environment around them and focus on something. Or just be.

Are these headphones for the eyes?

Maybe I’ve been putting the emphasis in the wrong place. They’re a Plaything for the Alone, but not for those who are by themselves, but for those who wish they could be.

Apple Vision Pro: New Ways to be Alone

A man sits alone in an apartment. The apartment is small, and furnished with modern-looking inexpensive furniture. The furniture looks new, freshly installed. This man is far too old to be sitting in a small, freshly furnished apartment for any good or happy reason. Newly divorced? He puts on his Apple Vision Pro(tm) headset. He opens the photos app, and looks on as photos of his children fill the open space of an apartment no child has ever lived in. “Relive your happiest memories,” intones the cheerful narrator. The apartment is silent. It is one of the most quietly devastating short films I have ever seen. Apple Inc made this movie hoping it would convince you to buy their new headset. I am now hoping this man is only divorced, and not a widower. There is hope, because the fact that he has spent $3,500 on a headset strongly indicates he himself is the biggest tragedy in his own life.

The year is 2023. Apple would like to sell you a new way to be alone.

And there is is, the Apple Vision Pro. The hardware looks incredible. The software looks miraculous. The product is very, very strange.

Back when I worked in the Space Glasses racket, I used to half-joke that space glasses designers should just own how big the thing has to be and make them look like cyberpunk 80s ski goggles. Apple certainly leaned into that—not Space Glasses, but Cyber Goggles.

Let’s start with the least intersting thing: the Price. “Does Tim Apple really expect me to pay 3,500 bucks for cyber goggles?” No, he literally doesn't. More so that any other Apple product in recent memory, this is a concept car.. The giveaway is the name, this is the Apple Vision Pro.. The goal is to try things out and build up anticipation, so that in three years when they release the Apple Vision Air for 1,800 bucks they’ll sell like hotcakes.

Apple being Apple, of course, figured out a way to sell their concept car at retail.

It’s status as a concept car goes a long way towards explaining many—but not all—of the very strange things about this product.

From a broad hardware/software features & functionality, this is close to what we were expecting. AR/Mixed Reality as the default operating mode, Apps and objects appearing as if they were part of the real-life environment, hand gesture control, a focus on experiences and enhanced productivity, with games getting only a passing glance.

Of course, there were several things I did find surprising.

First, I didn’t expect it to be a standalone unit, I was really expecting a “phone accessory” like the Watch (or arguably the Apple TV was to begin with.). But no, for all intents and purposes, there’s an entire laptop jammed into a pair of goggles. That’s a hell of an impressive feat of industrial engineering.

I was certainly not expecting the “external screen showing your eyes.” That got rumored, and I dismissed it out of hand, because that’s crazy. But okay, as implemented, now I can see what they were going for.

One of the biggest social problems with space glasses—or cyber goggles—is how you as the operator can communicate to other people that you’re paying attention to cyberspace as opposed to meat space. Phones, laptops, books all solve this the same way—you point your face at them and are clearly looking at the thing, instead of the people around you.

Having the screen hide your eyes while in cyberspace certainly communicates which mode the operator is in and solves the “starting a fight by accident” problem.

Using eye tracking as a key UI interaction shouldn’t have been surprising, but was. I spent that whole part of the keynote slapping my forehead; _of course! Of course that’s how that would work!

I expected games to get short shrift, but the lack of any sort of VR gaming attention at all really surprised me. Especially given that in the very same keynote they had actual real-life KOJIMA announcing that Death Stranding was coming to the Mac! Gaming is getting more attention at Apple than it’s gotten in years, and they just… didn’t talk about that with the headset?

Also strange was the lack of new “spacial” UIs? All the first party Apple software they showed was basically the same as on the Mac or iOS, just in a window floating in space. By comparison, when the Touch Bar launched, they went out of their way to show what every app they made used it for, from the useful (Final Cut’s scrub timeline, emoji pickers, predictive text options) to the mediocre (Safari’s tabs). Or Force Touch on the iPhone, for “right click” menus in iOS. Here? None of that. This is presumably a side effect of Apple’s internal secrecy and the schedule being such that they needed to announce it at the dev conference half a year before it shipped, but that’s strange. I was expecting at least a Final Cut Pro spacial interface that looks like an oldschool moviola, given they just ported FCP X to the iPad, and therefore presumably, the Vision.

Maybe the software group learned from all the time they poured into the Toubchbar & Force Touch. Or more likely, this was the first time most of the internal app dev groups got to see the new device, and are starting their UI designs now, to be ready for release with the device next year.

And so, if I may be so crude as to grade my own specific predictions:

- Extremely aware of it's location in physical space, more so than just GPS, via both LIDAR and vision processing. Yes.

- Able to project UI from phone apps onto a HUD. Nope! Turns out, it runs locally!

- Able to download new apps by looking at a visual code. Unclear? Presumably this will work?

- Hand tracking and handwriting recognition as a primary input paradigm. Yes, although I missed the eye tracking. And a much stronger emphasis on voice input than I expected, although it’s obvious in retrospect.

- Spacial audio. Yes.

- Able to render near-photoreal "things" onto a HUD blended with their environment. Heck yes.

- Able to do real-time translation of languages, including sign language. Unclear at this time. Maybe?

But okay! Zooming out, they really did it—they built Tony Stark’s sunglasses. At least, as close as the bleeding edge of technology can get you here in 2023. It’s only going to get lighter and smaller from here on out.

And here’s the thing: this is clearly going to be successful. The median response from the people who got hands-on time last week has been very positive. It might not fly off the shelves, but it’ll do at least as well as the new Mac Pro, whose whole selling point is the highly advanced technology of “PCI slots”.

By the time the Apple Vision Air ships in 2027, they’ll have cut the weight and size of the goggles, and there’s going to be an ecosystem built up from developers figuring out how to build a Spacial UI for the community of early adopters.

I’m skeptical the Cyber Goggles form factor will replace the keyboard-screen laptop or iPhone as a daily driver, but this will probably end up with sales somewhere around the iPad Pro at the top of the B-tier, beloved by a significant but narrow user base.

But all that’s not even remotely the most interesting thing. The most interesting thing is the story they told.

As usual, Apple showed a batch of filmed demos and ads demonstrating “real world” use, representing their best take on what the headset is for.

Apple’s sweet spot has always been “regular, creative people who have things to do that they’d like to make easier with a computer.” Not “computers for computer’s sake”—that’s *nix, not “big enterprise capital-W Work”—that’s Windows. But, regular folks, going about their day, their lives being improved by some piece of Apple kit.

And their ads & demos always lean in the aspirational nature of this. Attractive young people dancing to fun music from their iPods! Hanging out in cool coffee shops with their MacBooks! Creative pros working on fun projects in a modern office with colorful computers! Yes! That all looks fun! I want to be those people!

Reader, let me put my cards directly out on the table: I do not want to be any of the people in the Apple Vision demos.

First, what kind of work are these people doing? Other than watching movies, they’re doing—productivity software? Reviewing presentations, reading websites, light email, checking messages. Literally Excel spreadsheets. And meetings. Reviewing presentations in a meeting. Especially for Apple, this is a strangely “corporate” vision of the product.

But more importantly, where are they? Almost always, they’re alone.

Who do we see? A man, alone, looking at photos. A woman, alone in her apartment, watching a movie. Someone else, alone in a hotel room, reviewing a work presentation with people who are physically elsewhere. Another woman alone in a hotel room using FaceTime to talk to someone—her mother? “I miss you!” she says in one of the few audible pieces of dialog. A brief scene of someone playing an Apple Arcade game, alone in a dark room. A man in a open floor-plan office, reading webpages and reading email, turns the dial to hide his eyes from his coworkers. A woman on a flight pulls her headset on to tune out the other people om the plane.

Alone, alone, alone.

Almost no one is having fun. Almost no one is happy to be where they are. They’re doing Serious Work. Serious, meaning no one is creating anything, just reviewing and responding. Or consuming. Consuming, and wishing they were somewhere, anywhere, else.

It’s a sterile, corporate vision of computing, where we use computers to do, basically, what IBM would have imagined in the 1970s. A product designed _by_ and for upper middle management at large corporations. Work means presentation, spreadsheets, messages, light email.

Sterile, and with a grim undercurrent of “we know things are bad. We know you can’t afford an apartment big enough for the TV you want, or get her take you back, or have the job you wanted. But at least you can watch Avatar while pretending to be on top of a mountain.”

And with all these apps running on the space glasses, no custom UIs. Just, your existing apps floating in a spectral window, looking mostly the same.

Effectively, no games. There was a brief shot of someone playing something with a controller in a hovering window? But nothing that used the unique capabilities platform. No VR games. No Beat Saber, No Mans Sky, Superhot, Half-Life: Alyx. Even by Apple standards, this is a poor showing.

Never two headsets in the same place. Just one, either alone, or worn by someone trying to block out their surroundings.

The less said about the custom deepfake facetime golems, the better.

And, all this takes place in a parallel world untouched by the pandemic. We know this product was already well along before anyone had heard of COVID, and it’s clear the the last three years didn’t change much about what they wanted to build. This is a product for a world where “Remote Work” means working from a hotel on a trip to the customer. The absolute best use case for the product they showed was to enable Work From Home in apartments too small to have a dedicated office space, but Apple is making everyone come back to the office, and they can’t even acknowledge that use.

There are ways to be by yourself without being alone. They could have showed a DJ prepping their next set, a musician recording music, an artist building 3d models for a game. Instead, they chose presentations in hotels and photos dark, empty apartments.

I want to end the same way they ended the keynote, with that commercial. A dad with long hair is working while making his daughter toast. This is more like it! I am this Dad! I’ve done exactly this! With close to that hair!

And by the standards they’s already set, this is much better! He’s interacting with his kids while working. He’s working on his Surf Shop! By which we mean he’s editing a presentation to add some graphics that were sent to him.

But.

That edit couldn’t wait until you made your kid toast? It’s toast, it doesn’t take that long. And he’s not designing a surfboard, he’s not even building a presentation about surfboards, he’s just adding art someone sent him to a presentation that already exists.

His kid is staring at a screen with a picture of her dad’s eyes, not the real thing. And not to put too fine a point on it, but looking at his kid without space glasses in the way is the moment Darth Vader stopped being evil. Tony Stark took his glasses off when he talked to someone.

I can already do all that with my laptop. And when I have my laptop in the kitchen, when my daughter asks what I’m working on, I can just gesture to the screen and show her. I can share.

This is a fundamentally isolating view of computing, one where we retreat into unsharable private worlds, where our work email hovers menacingly over the kitchen island.

No one ever looks back and their life and thinks, “thank goodness I worked all those extra hours instead of spending time with my kids.” No one looks back and celebrates the times they made a presentation at the same time as lunch. No one looks back and smiles when they think of all the ways work has wormed into every moment, eroding our time with our families or friends, making sure we were never present, but always thinking about the next slide, the next tab, the next task..

No one will think , “thank goodness I spent three thousand five hundred dollars so I had a new way to be alone.”

Space Glasses

Wearable Technology, for your face

Once computers got small enough that “wearable technology” was a thing we could talk about with a straight face, glasses were an obvious form factor. Eye glasses were already the world’s oldest wearable technology! But glasses are tricky. For starters, they’re small. But also, they already work great at what they do, a nearly peerless piece of accessibility technology. They last for years, work on all kinds of faces, work in essentially any environment you can think of, and can seamlessly treat any number of conditions simultaneously. It’s not immediately obvious what value there is in adding electricity and computers. My glasses already work great, why should I need to charge them, exactly? Plus, if you need glasses you need them. I can drive home if my watch crashes, I can’t go anywhere if my glasses break.

There’s a bit in the Hitchhiker’s Guide to the Galaxy which has sort of lost it’s context now, about how goofy digital watches were, considering they didn’t do anything that clockwork watches couldn’t do except “need new batteries.” Digital Glasses have that problem, but more so.

So instead smartphones happened, and then smart watches.

But still, any number of companies have tried to sell you a computer you strap to your head and over your eyes. Mostly, these exist on an axis between 3d headsets, a form factor that mostly froze somewhere around the VirtualBoy in the early 90s, and the Google Glass, which sounded amazing if you never saw or wore one. Now it looks like Apple is ”finally” going to lift the curtain on their version of a VR/AR glasses headset.

A couple of lifetimes ago, I worked with smart glasses. Specifically, I was on the team that shipped Level Smart Glasses, along with a bunch of much more interesting stuff that was never released. For a while, I was a major insurance company’s “Lead Engineer for Smart Glasses”. (“Hey, what can I tell ya? It was the best of times, it was the worst of times. Truthfully, I don’t think about those guys that much since all that stuff went down.”)

I spent a lot of time thinking about what a computer inside your glasses could do. The terminology slid around a lot. “Smart Glasses.” “Wearable Tech.” “Digital Eyewear.” “Smart Systems.” “VR headsets.” “Reality Goggles.”

I needed a name that encompassed the whole universe of head-mounted wearable computing devices. I called them Space Glasses. Internally at least, the name stuck.

Let me tell you about Space Glasses.

Let’s Recap

Traditionally the have been two approaches to a head-mounted computer.

First, you have the VR Headset. This broke out into the mainstream in the early 90s with products like Nintendo’s Virtual Boy, but also all those “VR movies” (Johnny Mnemonic, Disclosure, Lawnmower Man, Virtuosity,) and a whole host of game initiatives lost to time. (Who else remembers System Shock had a VR mode? Or Magic Carpet?)

On the other hand, you have the Heads Up Display, which from a pop-culture perspective goes back to mid 80s movies like Terminator or Robocop, and maybe all the way back to Razor Molly in Neuromancer. These stayed fictional while the VR goggles thrashed around. And then Google Glass happened.

Google Glass was a fantastic pitch followed up by a genuinely terrible product. I was at CES a couple years back, and there’s an entire cottage industry of people trying to ship a product that matches the original marketing for Glass.

Glass managed to be the best and worst thing that could have happened to the the industry. It demonstrated that such a thing was possible, but did it in a way that massively repulsed most of the population.

My glass story goes like this: I was at a convention somewhere in the greater Silicon Valley area, probably the late lamented O’Reilly Velocity. I’m getting coffee before the keynote. It’s the usual scrum of folks milling around a convention center lobby, up too early, making small talk with strangers. And there’s the guy. Very valley software engineer type, pasty, button down shirt. Bit big, a real husky guy. And he’s staring at me. Right at me, eyes drilling in. He’s got this look. This look.. I have no idea who he is, I look up, make eye contact. He keeps starting with that expression. And for a split second, I think, “Well, huh, I guess I’m about to get into a fistfight at a convention.” Because everything about this guy’s expression says he’s about to take a swing. Then he reaches up and taps his google glasses. And I realize that he had no idea I was there, he was reading email. And thats when I knew that product was doomed. Because pulling out your phone and starting at it serves an incredibly valuable social indicator that you’re using a device.. With a seamless heads-up display like glass, there was no way to communicate when you were reading twitter as opposed to starting down a stranger.

Which is a big part of why everyone wearing them became glassholes.

Plus, you looked like a massive, unredeemable dork. To mis-quote a former boss of mine, no produc tis going to work if it’ll make it harder for you to get laid, and Glass was the most effective form of birth control known to lifekind.

Underreported between the nuclear-level dorkiness and the massive privacy concerns was the fact that Glass was incredibly uncomfortable to wear for more than a couple of minutes at a time.

Despite that, the original Glass pitch is compelling, and there’s clearly a desire to find an incarnation of the idea that doesn’t set off the social immune system.

Glass and Better-made VirtualBoy’s aren’t the only ways to go, though.

Spectrums of Possibilities

There are a lot of ways to mount a microprocessor to someone’s head. I thought of all the existing space glasses form factors operating on two main orthogonal axes, or spectrums. I’ll spare you the 2x2 consultant chart, and just describe them:

- With a screen, or without. There are plenty of other sensors or ways to share information with the wearer, but “does it have a screen or heads-up-display” is a key differentiator.

- All Day wear vs Single Task wear. Do you wear them all the time, like prescription spectacles, or do you put them on for a specific time and reason, like sunglasses?

There are also two lesser dimensions I mention for completeness:

- Headset-style design vs “normal” glasses design. This is more a factor of the current state of miniaturization than a real design choice. Big headsets are big only because they can’t fit all that in a package that looks like a Ray-bans wayfarer. Yet. You can bet the second that the PS VR can look like the Blues Brother’s sunglasses, they will.

- VR vs AR. If you have a screen, does the picture replace the real world completely, or merge with it? While this is a pretty major difference now—think VR headset vs Google glass—like the above this is clearly a quirk of an immature technology. It wont take long before any mature product can do both, and swap between them seamlessly.

What do we use them for, though?

This is all well and good, but what are the use cases, really?

On the “no screen” side of the house: not much. Those are, fundamentally, regular “dumb” non-electric glasses. Head mounted sensors are intersting, but not interesting enough to remember to charge another device on their own. People did some interesting things using sound instead of vision (Bose, for example,) but ultimately, the correct form factor for an audio augmented reality device are AirPods.

Head-mounted sensors, on their own, are interesting. You get very different, and much cleaner, data than from a watch or a phone in a pocket, mostly because you have a couple million years of biological stabilization working for you, instead of against you. Plus, they’re open to the air, they have the same “sight-lines” as the operator, and they have direct skin contact.

But not interesting enough to get someone to plug their glasses in every night.

With a screen, then, or some kind of heads-up display.

For all-day wear, it’s hard to imagine something compelling enough to be successful. Folks who need prescriptions have already hired their glasses to do something very specific, and folks who don’t need corrective eyewear will, rounding to the nearest significant digit, never wear spectacles all day if they don’t need to.

Some kind of head’s up display is, again, sort of interesting, but does anyone really want their number of unread emails hovering in their peripheral vision at all times?

I saw a very cool demo once where the goggles used the video camera, some face recognition technology, and a database to essentially overlay people’s business cards—their name & title—under their faces. “Great for people who can’t remember names!” And, like, that’s a cool demo, and great you could pull that off, but buddy, I think you might be mistaking your own social anxiety for a product market just a little bit. And man, if you think you’re awkward at social events when you can’t remember someone’s name, I hate to break it to you, but reading their name off your cyber goggles is not going to help things.

For task-based wear, the obvious use remains games. Games, and game-like “experiences”, see what this couch looks like in your own living room, and the like. There’s some interesting cases around 3d design, being able to interact with an object under design as if it was really there.

So, essentially, we’ve landed on VR goggles, which have been sputtering right on the edge of success for close to 30 years now, assuming we only start counting with the Virtual Boy.

There’s currently at least three flavors of game-focused headwear—Meta’s Quest (the artist formerly known as the Oculus,) Sony’s Playstation VR, and Valve’s index. Nearby, you have things like Microsoft’s HoloLens and MagicLeap which are the same thing but “For Business”, and another host of similar devices I can’t think of. (Google Cardboard! Nintendo Labo VR!)

But, fundamentally, these are all the same—strap some screens directly to your eyes and complete a task.

And, that’s a pretty decent model! VR googles are fun, certainly in short bursts. Superhot VR is a great game!

Let’s briefly recap the still-unsolved challenges.

First, they’re all heavy, uncomfortable, and expensive. These are the sort of problems that Moore’s Law and Efficiency of Scale will solve assuming people keep pouring money in, so can largely write those off.

Second, you look like a dork when you wear these. In addition to having half a robot face, reacting to things no one else can see looks deeply, deeply silly. There is no less-attractive person than a person playing a VR game.

Which brings us to the third, and hardest problem: VR goggles as they exist today are fundamentally isolating.

An insufficiently acknowledged truth is that at their core, computers and their derivatives are fundamentally social devices. Despite the pop-culture archetype of the lone hacker, people are constantly waving people over to look at what’s on their screen, passing their phone around, trading the controller back and forth. Consoles games might be “single player,” but they’re rarely played by one person.

VR goggles deeply break this. You can’t drop in and look over someone’s shoulder when they have the headwear, easily pass the controller back and forth, have a casual game night.

Four friends on a couch playing split screen Mario Kart is a very, very different game than four friends each with a headset strapped over their eyes.

Not an unsolvable set of problems, but space glasses that don’t solve for these will never break out past a niche market.

AR helps this a lot. The most compelling use for AR to date is still Pokemon Go, using the phone’s camera to show Pokemon out in the real world. Pokemon Go was a deeply social activity when it was a its peak, nearly sidestepping all the isolating qualities AV/VR tends to have.

Where do they fit?

At this point, it’s probably worth stepping back and looking at a slightly bigger picture. What role do space glasses fill, or fill better that the other computing technology we have?

Everyone likes to compare the introduction of new products to the the smartphone, but that isn’t a terribly useful comparison; the big breakthrough there was to realize that it was possible to demote “making phone calls” to an app instead of a whole device, and then make a computer with that app on it small enough to hold in your hand.

The watch is a better example. Wristwatches are, fundamentally, information radiators. Classic clockwork based watches radiated a small set of information all the time. The breakthrough was to take that idea and run with it, and use the smart part of smart watches to radiate more and different kinds of information. Then, as a bonus, pack some extra human-facing sensors in there. Largely, anything that tried to expand the watch past an information radiator has not gone so well, but adding new kinds of information has.

What about glasses then? Regular eye glasses, help you see things you couldn’t otherwise see. In the case of prescription glasses, they bring things into focus. Sunglasses help you see things in other environments. Successful smart glasses will take this and run with it, adding more and different things you can see.

Grasping towards Conclusions

Which all (conveniently) leads us to what I think is the best theoretical model for space glasses—Tony Stark’s sunglasses.

They essentially solve for all of the above problems. They look good—ostentatious but not unattractive. It’s obvious when he’s using them. While on, they offer the wearer an unobstructed view of the world with a detailed display overlayed. Voice controlled.

And, most critically, they’re presented as an interface to a “larger” computer somewhere else—in the cloud, or back at HQ. They’re a terminal. They don’t replace the computer, they replace the monitor.

And that’s where we sit today. Some expensive game hardware, and a bunch of other startups and prototypes. What’s next?

Space Glasses, Apple Style

What, then, about Apple?

From the rumor mill, it seems clear that they had multiple form factors in play over the course of their headset project, they seem to have settled on the larger VR goggles/headset style that most everyone else has also landed on.

It also seems clear that this has been in the works for a while, with various hints and seemingly imminent announcements. Personally, I was convinced that this was going to be announced in 2020, and there was a bunch of talks at WWDC that year that seemed to have an empty space where “and you can do this on the goggles!” was supposed to go.

And of course that tracks with the rumor that that Apple was all in on a VR-headset, which then got shot by Jonny Ive and they pivoted to AR. Which jives with the fact that Apple made a big developer play into AR/VR back in 2017, and then just kinda... let it sit. And now Ive is out and they seem to be back to a headset?

What will they be able to do?

Famously, Apple also never tells people what's coming... but they do often send signals out to the developer community so they can get ready ahead of time. (The definitive example was the year they rolled out the ability for iOS apps to support multiple screen sizes 6 months before they shipped a second size of phone.)

So. Some signals from over the last couple of years that seem to be hinting at what their space glasses can do. (In the parlance of our times, it's time for some Apple glasses kremlinology game theory!)

ArKit's location detection. AR Kit can now use a combination of the camera, apple maps data, and the iPad's LIDAR to get a crazy accurate physical location in real space. There's no reason to get hyper-accurate device location for an iPad. But for a head-mounted display, with a HUD...?

Not to mention some very accurate people Occlusion & Detection in AR video.

RealityKit, meanwhile, has some insane AR composition tools, which also leverage the LIDAR camera from the iPad, and can render essentially photo-real objects ito the "real world”.

Meanwhile, some really interesting features on the AirPods, like spatial audio in AirPods Pro. Spacial has been out for a while now, and seems like the sort of thing you try once and then gorfet about? A cool demo. But, it seems like a way better idea if when you turn your head, you can also see what’s making the sounds?

Opening up the AirPods API: "AirPods Pro Motion API provides developers with access to orientation, user acceleration, and rotational rates for AirPods Pro — ideal for fitness apps, games, and more." Did anyone make apps for AirPods? But as a basic API for head-tracking?

Widgets! A few versions back, Apple rolled a way to do Konfabulator-esque (or, if you rather, Android-style) widgets for the iOS home screen. There's some strong indications that these came out of the Apple watch team (codenamed chrono, built around SwiftUI,) and may have been intended as a framework for custom watch faces. But! A lightweight way to take a slice of an app and "project" a minimal UI as part of a larger screen? That's perfect for a glasses-based HUD. I can easily see allowing iOS widgets to run on the glasses with no extra modifications on top of what the develoer had to do to get them running on the home screen. Day 1 of the product and you have a whole app store full of ready-to-go HUD components.

App Clips! On the one hand, it's "QR codes, but by Apple!" On the other hand, what we have here is a way to load up an entire app experience by just looking at a picture. Seems invaluable for a HUD+camera form factor? Especially a headset with a strong AR component—looking at elements in AR space download new features?

Hand and pose tracking. Part of greater ML/Vision frameworks, they rolled out crazy-accurate hand tracking, using their on-device ML. Check out the demo at 6:40 in this developer talk

Which is pretty cool on it's own except they ALSO rolled out:

Handwriting detection. Scribble is the new-and-improved iPad+pencil handwriting detector, and there's some room for a whole bunch of Newton jokes here. But mixed with the hand tracking? That's a terribly compelling interaction paradigm for a HUD-based device. Just write in the air in front of you, the space glasses turn that into text on the fly.

And related, iOS 14 added ML detection and real time translation of sign language. (?!)

Finally, there's a strong case to be made that the visual overhaul they gave MacOS 11 and iOS14 is about making it more "AR-friendly”, which would be right about the last time the goggles were rumored to be close to shipping.

In short, this points to a device:

- Extremely aware of it's location in physical space, more so than just GPS, via both LIDAR and vision processing.

- Able to project UI from phone apps onto a HUD.

- Able to download new apps by looking at a visual code.

- Hand tracking and handwriting recognition as a primary input paradigm.

- Spacial audio.

- Able to render near-photoreal "things" onto a HUD blended with their environment.

- Able to do real-time translation of languages, including sign language.

From a developer story, this seems likely to operate like the watch was at first. Tethered to a phone, which drives most of the processing power and projects the UI elements on to the glasses screen.

What are they For?

What they can do is all well and good, but what’s the pitch? Those are all features, or parts of features. Speeds and Feeds, which isn’t Apple’s style.What will Apple say they’re for?

The Modern-era (Post-Next) Apple doesn’t ship anything without a story. Which is good, more companies should spend the effort to build a story about why you need this, what this new thing is for, how it fits into your life. What problems you have this solves.

The iPod was “carry all your music with you all the time”.

The iPhone was the classic “three devices” in one.

The iPod Touch struggled with “the iPhone, but without a phone!”, but landed on “the thing you buy your kids to play games before you’re willing to buy them their own phone.”

The iPad was “your phone, but bigger!”

The Watch halfheartedly tried to sell itself as an enhanced communication device (remember the heartbeat thing?) before realizing it was a fitness device.

AirPods were “how great would it be if your earbuds didn’t have wires? Also, check out this background noise reduction.”

The HomePod is “a speaker you can yell requests at.”

So, what’s will the Space Glasses be?

For anyone else, the obvious play would be games, but games just aren’t a thing Apple is willing to be good at. There’s pretty much a straight line from letting Halo, made by Mac developers, become a huge hit as an XBOX exclusive to this story from Panic’s Cabel Sasser about why Untitled Goose Game is on every platform except the Mac App Store.

This is not unlike their failures to get their pro audio/video apps out into the Hollywood ecosystem. Both require a level of coöperation with other companies that Apple has never been willing to do.

Presumably, they’ll announce some VR games to go on the Apple Glasses. The No Mans Sky team is strongly hinting they’ll be there, so, okay? That’s a great game, but a popular VR-compatible game from six years ago is table stakes. Everyone else already has that. What’s new?

They’ve never treated games as a primary feature of a new platform. Games are always a “oh yeah, them too” feature.

What, then?

I suspect they’ll center around “Experiences”. VR/AR environments. Attend a live concert like you’re really there! Music is the one media type Apple is really, really good at, so I expect them to lean heavily into that. VR combined with AirPods-style spacial audio could be compelling? (This would be easier to believe if they were announcing the goggles at their music event in September instead of WWDC.)

Presumably this will have a heavily social component as well—attend concerts with your family from out of town. Hang out in cyberspace! Explore the Pyramids with your friends!

There’s probably also going to be a remote-but-together shared workspace thing. Do your zoom meetings in VR instead of starting at the Brady Bunch credits on your laptop.

There’s probably also going to be a whole “exciting new worlds of productivity” where basic desktop use gets translated to VR/AR. Application windows floating in air around your monitor! Model 3d objects with your hands over your desk!

Like the touch bar before it, what’s really going to be interesting here is what 1st party apps gets headset support on day one. What’s the big demo from the built-in apps? Presumably, Final Cut gets a way to edit 360 video in 360, but what else? Can I spread my desktop throughout the volume of my office? Can I write an email by waving my hands in empty space?

Anyway.

The whole time I was being paid to think about Space Glasses, Apple was the Big Wave. The Oncoming Storm. We knew they were going to release something, and if anyone could make it work, it would be them. I spent hours on hours trying to guess what they would do, so we could either get out ahead or get out of the way.

I’m so looking forward to finding out what they were really building all that time.