And Another Thing: Pianos

I thought I had said everything I had to say about that Crush ad, but… I keep thinking about the Piano.

One of the items crushed by the hydraulic press into the new iPad was an upright piano. A pretty nice looking one! There was some speculation at first about how much of that ad was “real” vs CG, but the apology didn’t include Apple reassuring everyone that it wasn’t a real piano, I have to assume they really did sacrifice a perfectly good upright piano for a commercial. Which is sad, and stupid expensive, but not the point.

I grew up in a house with, and I swear I am not making this up, two pianos. One was an upright not unlike the one in the ad—that piano has since found a new home, and lives at my uncle’s house now. The other piano is a gorgeous baby grand. It’s been the centerpiece of my parent’s living room for forty-plus years now. It was the piano in my mom’s house when she was a little girl, and I think it belonged to her grandparents before that. If I’m doing my math right, it’s pushing 80 or so years old. It hasn’t been tuned since the mid-90s, but it still sounds great. The pedals are getting a little soft, there’s some “battle damage” here and there, but it’s still incredible. It’s getting old, but barring any looney tunes–style accidents, it’ll still be helping toddlers learn chopsticks in another 80 years.

My point is: This piano is beloved. My cousins would come over just so they could play it. We’ve got pictures of basically every family member for four generations sitting at, on, or around it. Everyone has played it. It’s currently covered in framed pictures of the family, in some cases with pictures of little kids next to pictures of their parents at the same age. When estate planning comes up, as it does from time to time, this piano gets as much discussion as just about everything else combined. I am, by several orders of magnatude, the least musically adept member of my entire extended family, and even I love this thing. It’s not a family heirloom so much as a family member.

And, are some ad execs in Cupertino really suggesting I replace all that with… an iPad?

I made the point about how fast Apple obsoletes things last time, so you know what? Let’s spot them that, and while we’re at it, let’s spot them how long we know that battery will keep working. Hell, let’s even spot them the “playing notes that sound like a piano” part of being a piano, just to be generous.

Are they seriously suggesting that I can set my 2-year old down on top of the iPad to take the camera from my dad to take a picture while my mom shows my 4-year old how to play chords? That we’re all going to stand in front of the iPad to get a group shot at thanksgiving? That the framed photos of the wedding are going to sit on top of the iPad? That the iPad is going to be something there will be tense negotiations about who inherits?

No, of course not.

What made that ad so infuriating was that they weren’t suggesting any such thing, because it never occurred to them. They just thought they were making a cute ad, but instead they (accidentally?) perfectly captured the zeitgeist.

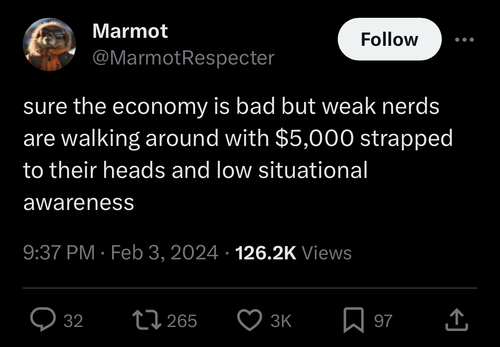

One of the many reasons why people are fed up with “big tech” is that as “software eats the world” and tries to replace everything, it doesn’t actually replace everything. It just replaces the top line thing, the thing in the middle, the thing thats easy. And then abandons everything else that surrounds it. And it’s that other stuff, the people crowded around the piano, the photos, that really actually matters. You know, culture. Which is how you end up with this “stripping the copper out of the walls” quality the world has right now; it’s a world being rebuilt by people whose lives are so empty they think the only thing a piano does is play notes.

Crushed

What’s it look like when a company just runs out of good will?

I am, of course, talking about that ad Apple made and then apologized for where the hydraulic press smashes things down and reveals—the new iPad!

The Crush ad feels like a kind of inflection point. Because a few years ago, this would have gone over fine. Maybe a few grumps would have grouched about it, but you can imagine most people would have taken it in good humor, there would have been a lot of tweets on the theme of “look, what they meant was…”

Ahhh, that’s not how this one went? It’s easy to understand why some folks felt so angry; my initial response was more along the lines of “yeaaah, read the room.”

As more than one person pointed out, Apple’s far from the first company to use this metaphor to talk about a new smaller product; Nintendo back in the 90s, Nokia in ’08. And, look, first of all, “Nokia did it” isn’t the quality of defense you think it is, and second, I don’t know guys, maybe some stuff has happened over the last fifteen years to change the relationship artists have with big tech companies?

Apple has built up a lot of good will over the last couple of decades, mostly by making nice stuff that worked for regular people, without being obviously an ad or a scam, some kind of corporate nightmare, or a set of unserious tinkertoys that still doesn’t play sound right.

They’ve been withdrawing from that account quite a lot the last decade: weird changes, the entire app store “situation”, the focus on subscriptions and “services”. Squandering 20 years of built-up good will on “not fixing the keyboards.” And you couple that with the state of the whole tech industry: everyone knows Google doesn’t work as well as it used to, email is all spam, you can’t answer the phone anymore because a robot is going to try and rip you off, how many scam text messages thye get, Amazon is full of bootleg junk, etsy isn’t hand-made anymore, social media is all bots and fascists, most things that made tech fun or exciting a decade or more ago has rotted out. And then, as every other tech company falls over themselves to gut the entirety of the arts and humanities to feed them into their Plagiarism Machines so techbros don’t have to pay artists, Apple—the “intersection of technology and liberal arts”—goes and does this? Et tu?

I picture last week as the moment Apple looked down and realized, Wile E Coyote style, they they were standing out in mid-air having walked off the edge of their accumulated good will.

On the one hand, no, that’s not what they meant, it was misinterpreted. But on the other hand—yes, maybe it really was what they meant, the people making just hadn’t realized the degree to which they were saying the quiet part out loud.

Because a company smashing beautiful tools that have worked for decades to reveal a device that’ll stop being eligible for software updates in a few years is the perfect metaphor for the current moment.

Last Week In Good Sentences

It’s been a little while since I did an open tab balance transfer, so I’d like to tell you about some good sentences I read last week.

Up first, old-school blogger Jason Kottke links to a podcast conversation between Ezra Klein and Nilay Patel in The Art of Work in the Age of AI Production. Kottke quotes a couple of lines that I’m going to re-quote here because I like them so much:

EZRA KLEIN: You said something on your show that I thought was one of the wisest, single things I’ve heard on the whole last decade and a half of media, which is that places were building traffic thinking they were building an audience. And the traffic, at least in that era, was easy, but an audience is really hard. Talk a bit about that.

NILAY PATEL: Yeah first of all, I need to give credit to Casey Newton for that line. That is something — at The Verge, we used to say that to ourselves all the time just to keep ourselves from the temptations of getting cheap traffic. I think most media companies built relationships with the platforms, not with the people that were consuming their content.

“Building traffic instead of an audience” sums up the last decade and change of the web perfectly. I don’t even have anything to add there, just a little wave and “there you go.”

Kottke ends by linking out to The Revenge of the Home Page in the The New Yorker, talking about the web starting to climb back towards a pre-social media form. And that’s a thought thats clearly in the air these days, because other old school blogger Andy Baio linked to We can have a different web.

I have this theory that we’re slowly reckoning with the amount of cognitive space that was absorbed by twitter. Not “social media”, but twitter, specifically. As someone who still mostly consumes the web via his RSS reader, and has been the whole time, I’ve had to spend a lot of time re-working my feeds the last several months because I didn’t realize how many feeds had rotted away but I hadn’t noticed because those sites were posting update as tweets.

Twitter absorbed so much oxygen, and there was so much stuff that migrated from “other places” onto twitter in a way that didn’t happen with other social media systems. And now that twitter is mostly gone, and all that creativity and energy is out there looking for new places to land.

If you’ll allow me a strained metaphor, last summer felt like last call before the party at twitter fully shut down; this summer really feels like that next morning, where we’ve all shook off the hangover and now everyone is looking at each other over breakfast asking “okay, what do you want to go do now?”

Jumping back up the stack to Patel talking about AI for a moment, a couple of extra sentences:

But these models in their most reductive essence are just statistical representations of the past. They are not great at new ideas. […] The human creativity is reduced to a prompt, and I think that’s the message of A.I. that I worry about the most, is when you take your creativity and you say, this is actually easy. It’s actually easy to get to this thing that’s a pastiche of the thing that was hard, you just let the computer run its way through whatever statistical path to get there. Then I think more people will fail to recognize the hard thing for being hard.

(The whole interview is great, you should go read it.)

But that bit about ideas and reducing creativity to a prompt brings me to my last good sentences, in this depressing-but-enlightening article over on 404 media: Flood of AI-Generated Submissions ‘Final Straw’ for Small 22-Year-Old Publisher

A small publisher for speculative fiction and roleplaying games is shuttering after 22 years, and the “final straw,” its founder said, is an influx of AI-generated submissions. […] “The problem with AI is the people who use AI. They don't respect the written word,” [founder Julie Ann] Dawson told me. “These are people who think their ‘ideas’ are more important than the actual craft of writing, so they churn out all these ‘ideas’ and enter their idea prompts and think the output is a story. But they never bothered to learn the craft of writing. Most of them don't even read recreationally. They are more enamored with the idea of being a writer than the process of being a writer. They think in terms of quantity and not quality.”

And this really gets to one of the things that bothers me so much about The Plagiarism Machine—the sheer, raw entitlement. Why shouldn’t they get to just have an easy copy of something someone else worked hard on? Why can’t they just have the respect of being an artist, while bypassing the work it takes to earn it?

My usual metaphor for AI is that it’s asbestos, but it’s also the art equivalent of steroids in pro sports. Sure, you hit all those home runs or won all those races, but we don’t care, we choose to live in a civilization where those don’t count, where those are cheating.

I know several people who have become enamored with the Plagiarism Machines over the last year—as I imagine all of us do now—and I’m always struck by a couple of things whenever they accidentally show me their latest works:

First, they’re always crap, just absolute dogshit garbage. And I think to myself, how did you make it to adulthood without being able to tell what’s good or not? There’s a basic artistic media literacy that’s just missing.

Second, how did we get to the point where you’ve got the nerve to be proud that you were cheating?

Antiderivitives

I’ve been thinking all week about this macbook air review by Paul Thurrott: Apple MacBook Air 15-Inch M3 Review - Thurrott.com (via Daring Fireball).

For a little bit of context Thurrott has spend most of the last couple decades as The Windows Guy. I haven’t really kept up on the folks in the Windows ecosystem now that I’m not in that ecosystem as much anymore, so it’s wild to see him give a glowing review to the macbook.

It’s a great review, and his observations really mirrored mine when I made the switch fifteen years or so ago (“you mean, I can just close the lid, and that works?”). And it’s interesting to see what the Macbook looks like from someone who still has a Windows accent. But that’s not what I keep thinking about. What I keep thinking about is this little aside in the middle:

From a preload perspective, the MacBook Air is bogged down with far too many Apple apps, just as iPhones and iPads are. And I’m curious that Apple doesn’t get dinged on this, though you can, at least, remove what you don’t want, and some of the apps are truly useful. Sonoma includes over 30 (!) apps, and while none are literally crapware, many are gratuitous and unnecessary. I spent quite a bit of time cleaning that up, but it was a one-time job. And new users should at least examine what’s there. Some of these apps—Safari, Pages, iMovie, and others—are truly excellent and may surprise you. Obviously, I also installed a lot of the third-party apps I need.

And this is just the perfect summary of the difference in Operating System Philosophy between Redmond and Cupertino.

Microsoft has always taken the world-view that the OS exists to provide a platform for other people to sell you things. A new PC with just the Microsoft OS of the day, DOS, Windows 3, Win 95 Windows 11, whichever, is basically worthless. That machine won’t do anything for you that you want to do, you have to go buy more software from what Microsoft calls an “Independent software vendor” or from them, but they’re not gonna throw Word in for free, that’s crazy. PCs are a platform to make money.

Apple, on the other hand, thinks of computers as appliances. You buy a computing device from Apple, a Mac, iPhone, whatever, out of the box that’ll do nearly everything you need it to do. All the core needs are met, and then some. Are those apps best-in-class? In some cases, yes, but mostly if you need something better you’re probably a professional and already know that. They’re all-in-one appliances, and 3rd party apps are bonus value, but not required.

And I think this really strikes to the heart of a lot of the various anti-monopoly regulatory cases swirling around Apple, and Google, and others. Not all, but a whole lot of them boil down to basically, “Is Integration Bad?” Because one of the core principles of the last several decades of tech product design has been essentially “actually, it would be boss if movie studios owned their own theatres”.

And there’s a lot more to it than that, of course, but also? Kinda not. We’ve been doing this experiment around tightly integrated product design for the last couple of decades, and how do “we” (for certain values of “we”) feel about it?

I don’t have a strong conclusion here, so this feels like one of those posts where I end by either linking to Libya is a land of contrasts or the dril tweet about drunk driving..

But I think there’s an interesting realignment happening, and you can smell the winds shifting around whats kinds of tradeoffs people are willing to accept. So maybe I’ll just link to this instead?

Hardware is Hard

Friday’s post was, of course, a massive subtweet of Human’s "Ai" pin, which finally made it out the door to what can only be described as “disastrous” reviews.

We’ve been not entirely kind to Humane here at Icecano, so I was going to sort of discretely ignore the whole situation, the way you would someone who fell down a flight of stairs at a party but was already getting the help they needed. But now we’re going on a week and change of absolutely excruciating discourse about whether it’s okay to give bad products bad reviews. It’s the old “everything gets a 7” school of video game reviews, fully metastasized.

And, mostly, it’s all bad-faith garbage. There’s aways a class of grifter who thinks the only real crime is revealing the grift.

Just tobe crystal clear: the only responsibility a critic or reviewer of any kind has it to the audience, never to the creators, and even less to the creator’s shareholders.

But also, we’re at the phase in the cycle where a certain kind of tech bro climbs out of the woodwork and starts saying things like “hardware is hard.” And it is! I’ve worked on multiple hardware projects, and they were all hard, incredibly hard. I once watched someone ask the VP of Hardware Engineering “do the laws of physics even allow that?” and the answer was a half-grin followed by “we’re not sure!”

I hate to break it to you, hard work isn’t an incantation to deflect criticism. Working hard on something stupid and useless isn’t the brag you think it is.

Because, you know what’s harder? Not having a hudred million dollars plus of someone elese’s money to play with interest-free for years on end. They were right about one thing though: we did deserve better.

Sometimes You Just Have to Ship

I’ve been in this software racket, depending on where you start counting, about 25 years now. I’ve been fortunate to work on a lot of different things in my career—embedded systems, custom hardware, shrinkwrap, web systems, software as a service, desktop, mobile, government contracts, government-adjacent contracts, startups, little companies, big companies, education, telecom, insurance, internal tools, external services, commercial, open-source, Microsoft-based, Apple-based, hosted onvarious unicies, big iron, you name it. I think the only major “genres” of software I don’t have road miles on are console game dev and anything requiring a security clearance. If you can name a major technology used to ship software in the 21st century, I’ve probably touched it.

I don’t bring this up to humblebrag—although it is a kick to occasionally step back and take in the view—I bring it up because I’ve shipped a lot of “version one” products, and a lot of different kinds of “version ones”. Every project is different, every company and team are different, but here’s one thing I do know: No one is ever happy with their first version of anything. But how you decide what to be unhappy about is everything.

Because, sometimes you just have to ship.

Let’s back up and talk about Venture Capital for a second.

Something a lot of people intellectually know, but don’t fully understand, is that the sentences “I raised some VC” and “I sold the company” are the same sentence. It’s really, really easy to trick yourself into believing that’s not true. Sure, you have a great relationship with your investors now, but if they need to, they will absolutely prove to you that they’re calling the shots.

The other important thing to understand about VC is that it’s gambling for a very specific kind of rich person. And, mostly, that’s a fact that doesn’t matter, except—what’s the worst outcome when you’re out gambling? Losing everything? No. Then you get to go home, yell “I lost my shirt!” everyone cheers, they buy you drinks.

No, the worse outcome is breaking even.

No one wants to break even when they go gambling, because what was the point of that? Just about everyone, if they’re in danger of ending the night with the same number of dollars they started with, will work hard to prevent that—bet it all on black, go all-in on a wacky hand, something. Losing everything is so much better than passing on a chance to hit it big.

VC is no different. If you take $5 million from investors, the absolutely last thing they want is that $5 million back. They either want nothing, or $50 million. Because they want the one that hits big, and a company that breaks even just looks like one that didn’t try hard enough. They’ve got that same $5 mil in ten places, they only need one to hit to make up for the other nine bottoming out.

And we’ve not been totally positive about VC here at Icecano, so I want to pause for a moment and say this isn’t necessarily a bad thing. If you went to go get that same $5 million as a loan from a bank, they’d want you to pay that back, with interest, on a schedule, and they’d want you to prove that you could do it. And a lot of the time, you can’t! And that’s okay. There’s a whole lot of successful outfits that needed that additional flexibility to get off the ground. Nothing wrong with using some rich people’s money to pay some salaries, build something new.

This only starts being a problem if you forget this. And it’s easy to forget. In my experience, depending on your founder’s charisma, you have somewhere between five and eight years. The investors will spend years ignoring you, but eventually they’ll show up, and want to know if this is a bust or a hit. And there’s only one real way to find out.

Because, sometimes you just have to ship.

This sounds obvious when you say it out loud, but to build something, you have to imagine it first. People get very precious around words like “vision” or “design intent”, but at the end of the day, there was something you were trying to do. Some problem to solve. This is why we’re all here. We’re gonna do this.

But this is never what goes out the door.

There’s always cut features, things that don’t work quite right, bugs wearing tuxedoes, things “coming soon”, abandoned dead-ends. From the inside, from the perspective of the people who built the thing, it always looks like a shadow of what you wanted to build. “We’ll get it next time,” you tell each other, “Microsoft never gets it right until version 3.”

The dangerous thing is, it’s really, really easy to only see the thing you built through the lens of what you wanted to build.

The less toxic way this manifests is to get really depressed. “This sucks,” you say, “if only we’d had more time.”

The really toxic way, though, is to forget that your customers don’t have the context you have. They didn’t see the pitch deck. They weren’t there for that whiteboard session where the lightbulbs all went on. They didn’t see the prototype that wasn’t ready to go just yet. They don’t know what you’re planning next. Critically—they didn’t buy in to the vision, they’re trying to decide if they’re going to buy the thing you actually shipped. And you assume that even though this version isn’t there yet, wherever “there” is, that they’ll buy it anyway because they know what’s coming. Spoiler: they don’t, and they won’t.

The trick is to know all this ahead of time. Know that you won’t ship everything, know that you have to pick a slice you actually do, given the time, or money, or other constraints.

The trick is to know the difference between things you know and things you hope. And you gotta flush those out as fast as you can, before the VCs start knocking. And the only people who can tell you are your customers, the actual customers, the ones who are deciding if they’re gonna hand over a credit card. All the interviews, and research, and prototypes, and pitch sessions, and investor demos let you hope. Real people with real money is how you know. As fast as you can, as often as you can.

The longer you wait, the more you refine, or “pivot”, or do another round of ethnography, is just finding new ways to hope, is just wasting resources you could have used once you actually learned something.

Times up. Pencils down. Show your work.

Because, sometimes you just have to ship.

Reviews are a gift.

People spending money, or not, is a signal, but it’s a noisy one. Amazon doesn’t have a box where they can tell you “why.” Reviews are people who are actually paid to think about what you did, but without the bias of having worked on it, or the bias of spending their own money. They’re not perfect, but they’re incredibly valuable.

They’re not always fun. I’ve had work I’ve done written up on the real big-boy tech review sites, and it’s slightly dissociating to read something written by someone you’ve never met about something you worked on complaining about a problem you couldn’t fix.

Here’s the thing, though: they should never be a surprise. The amount that the reviews are a surprise are how you know how well you did keeping the bias, the vision, the hope, under control. The next time I ship a version one, I’m going to have the team write fake techblog reviews six months ahead of time, and then see how we feel about them, use that to fuel the last batch of duct tape.

What you don’t do is argue with them. You don’t talk about how disappointing it was, or how hard it was, or how the reviewers were wrong, how it wasn’t for them, that it’s immoral to write a bad review because think of the poor shareholders.

Instead, you do the actual hard work. Which you should have done already. Where you choose what to work on, what to cut. Where you put the effort into imaging how your customers are really going to react. What parts of the vision you have to leave behind to build the product you found, not the one you hoped for.

The best time to do that was a year ago. The second best time is now. So you get back to work, you stop tweeting, you read the reviews again, you look at how much money is left. You put a new plan together.

Because, sometimes you just have to ship.

“And Then My Reward Was More Crunch”

For reasons that are probably contextually obvious, I spent the weekend diving into Tim Cain’s YouTube Channel. Tim Cain is still probably best known as “the guy who did the first Fallout,” but he spent decades working on phenominal games. He’s semi-retired these days, and rather than write memoirs, he’s got a “stories from the old days” YouTube channel, and it’s fantastic.

Fallout is one of those bits of art that seems to accrete urban legends. One of the joys of his channel has been having one of the people who was really there say “let me tell you what really happened.”

One of the more infamous beats around Fallout was that Cain and the other leads of the first Fallout left partway through development of Fallout 2 and founded Troika Games. What happened there? Fallout was a hit, and it’s from the outside it’s always been baffling that Interplay just let the people who made it… walk out the door?

I’m late to this particular party, but a couple months ago Cain went on the record with what happened:

and a key postscript:

Listening To My Stories With Nuance

…And oh man, did that hit me where I live, because something very similar happened to me once.

Several lifetimes ago. I was the lead on one of those strange projects that happen in corporate America where it absolutely had to happen, but it wasn’t considered important enough to actually put people or resources on it. We had to completely retool a legacy system by a hard deadline or lose a pretty substantial revenue stream, but it wasn’t one of the big sexy projects, so my tiny team basically got told to figure it out and left alone for the better part of two years.

Theoretically the lack of “adult supervision” gaves us a bunch of flexibility, but in practice it was a hige impediment every time we needed help or resources or infrastructure. It came down to the wire, but we pulled it off, mostly by sheer willpower. It was one of those miracles you can sometimes manage to pull off; we hit the date, stayed in budget, produced a higher-quality system with more features that was easier to maintain and build on. Not only that, but transition from the old system to the new went off with barely a ripple, and we replaced a system that was constantly falling over with one that last I heard was still running on years of 100% uptime. The end was nearly a year-long sprint, barely getting it over the finish line. We were all exhausted, I was about ready to die.

And the reward was: nothing. No recognition, no bonus, no time off, the promotion that kept getting talked about evaporated. Even the corp-standard “keep inflation at bay” raise was not only lower than I expected but lower than I was told it was going to be; when I asked about that, the answer was “oh, someone wrote the wrong number down the first time, don’t worry about it.”

I’m, uh, gonna worry about it a little bit, if that’s all the same to you, actually.

Morale was low, is what I’m saying.

But the real “lemon juice in the papercut” moment was the next project. We needed to do something similar to the next legacy system over, and now armed with the results of the past two years, I went in to talk about how that was going to go. I didn’t want to do that next one at all, and said so. I also thought maybe I had earned the right to move up to one of the projects that people did care about? But no, we really want you do run this one too. Okay, fine. It’s nice to be wanted, I guess?

It was, roughly, four times as much work as the previous, and it needed to get done in about the same amount of of time. Keeping in mind we barely made it the first time, I said, okay, here’s what we need to do to pull this off, here’s the support I need, the people, here’s my plan to land this thing. There’s aways more than one way to get something done, I either needed some more time, or more people, I had some underperformers on the team I needed rotated out. And got told, no, you can’t have any version of that. We have a hard deadline, you can’t have any more people, you have to keep the dead weight. Just find a way to get four times as much work done with what you have in less time. Maybe just keep working crazy hours? All with a tone that I can’t possibly know what I’m talking about.

And this is the part of Tim Cain’s story I really vibrated with. I had pulled off a miracle, and the only reward was more crunch. I remember sitting in my boss’s boss’s office, thinking to myself “why would I do this? Why would they even think I would say yes to this?”

Then, they had the unmitigated gall to be surprised when I took another job offer.

I wasn’t the only person that left. The punchline, and you can probably see this coming, is that it didn’t ship for years after that hard deadline and they had to throw way more people on it after all.

But, okay, other than general commiserating with an internet stranger about past jobs, why bring all this up? What’s the point?

Because this is exactly what I was talking about on Friday in Getting Less out of People. Because we didn’t get a whole lot of back story with Barry. What’s going on with that guy?

The focus was on getting Maria to be like Barry, but does does Barry want to be like Barry? Does he feel like he’s being taken advantage of? Is he expecting a reward and then a return to normal while you’re focusing on getting Maria to spend less time on her novel and more time on unpaid overtime? What’s he gonna do when he realizes that what he thinks is “crunch” is what you think is “higher performing”?

There’s a tendency to think of productivity like a ratchet; more story points, more velocity, more whatever. Number go up! But people will always find an equilibrium. The key to real success to to figure out how to provide that equilibrium to your people, because if you don’t, someone else will.

Getting Less out of People

Like a lot of us, I find myself subscribed to a whole lot of substack-style newsletter/blogs1 written by people I used to follow on twitter. One of these is The Beautiful Mess by John Cutler, which is about (mostly software) product management. I was doing a lot of this kind of work a couple of lifetimes back, but this one of the resources that has stayed in my feeds, because even when I disagree it’s at least usually thoughtful and interesting. For example, these two links I’ve had sitting in open tabs for a month (They’re a short read, but I’ll meet you on the other side of these links with a summary):

TBM 271: The Biggest Untapped Opportunity - by John Cutler

TBM 272: The Biggest Opportunity (Part 2) - by John Cutler

He makes a really interesting point that I completely disagree with. His thesis is that the biggest untapped opportunity for companies are people who are only doing good work, but give off the indications that they could be doing great work. “Skilled Pragmatists” he calls them—people who do good work, but aren’t motivated to go “above and beyond”, and are probably bored but are getting fulfillment outside of work. Not risk takers, not big on conflict, probably don’t say a lot in meetings. And most importantly, people who have decided to not step it all the way up, the’ve got agency, and deployed it.

In a truly world-class, olympic level of accidentally revealing gender bias, he posits two hypothetical workers, “Maria” who is the prototypical “Skilled Pragmatist” and by comparison, “Barry”, who takes a lot of big risks but gets a lot of big things done.

He then kicks around some reasons why Maria might do what she does, and proposes some frameworks for figuring out how to, bluntly, get more out of those people, how to “achieve more together”.

Not to use too much tech industry jargon here, but my response to this is:

HAHAHAHA, Fuck You, man.

Because let’s back way the hell up. The hypothetical situation here is that things are going well. Things are getting done on time, the project isn’t in trouble, the company isn’t in trouble, there aren’t performance problems of any kind. There’s no promotion in the wings, no career goals that aren’t being achieved. Just a well-preforming, non-problematic employee who gets her job done and goes home on time. And for some reason, this is a problem?

Because, I’ll tell you what, I’ll take a team of Marias over a team of Barrys any day.

Barry is going to burn out. Or he’s going to get mad he isn’t already in charge of the place and quit. Or get into one too many fights with a VP with a lot of political juice. Or just, you know, meet someone outside of work. Have a kid. Adopt a pet. That’s a fragile situation.

Maria is dependable, reliable. She’s getting the job done! She’s not going to burn out, or get pissed and leave because the corporate strategy changed and suddenly the thing she’s getting her entire sense of self-worth from has been cancelled. She’s not working late? She’s taking her kids to sports, or spending time with her wife, or writing a novel. She’s balanced.

The issue is not how do we get Maria to act more like Barry, it’s the other way around—how do we get Barry to find some balance and act more like Maria?

I’ve been in the software development racket for a long time now, and I’ve had a lot of conversations with people I was either directly managing, implicitly managing, or mentoring, and I can tell you I’ve had a lot more conversations that boiled down to “you’re working too hard” than I’ve had “you need to step it up.”

Maybe the single most toxic trait of tech industry culture is to treat anything less than “over-performing” as “under-performing”. There are underperformers out there, and I’ve met a few. But I’ve met a whole lot more overperformers who are all headed for a cliff at full speed. In my experience, the real gems are the solid performers with a good sense of balance. They’ve got hobbies, families, whatever.

Overperformers are the sort of people who volunteer to monitor the application logs over the weekend to make sure nothing goes wrong. Balanced performers are the ones that build a system where you don’t have to. They’re doing something with the kids this weekend, so they engineer up something that doesn’t need that much care and feeding.

I suspect a lot of this is based on the financialization of everything—line go up is good! More story points, more features, ship more, more quickly. It’s the root mindset that settled on every two weeks being named a “sprint” instead of an “increment.” Must go faster! Faster, programmer! Kill, Kill!

And as always, that works for a while. It doesn’t last, though. Sure, we could ship that in June instead of September. But you’re also going to have to hire a whole new team afterwards, because everyone is going to have quit after what we had to do to get it out in June. No one ever thinks about the opportunity costs of burning out the team and needing a new one when they’re trying to shave a few weeks off the schedule.

Is it actually going to matter if we ship in August?

Because, most of the time, most places, it’s not a sprint, and never will be. It’s a marathon, a long drive, a garden. Imagine what we could be building if everyone was still here five, ten, fifteen years from now! If we didn’t burn everyone out trying to “achieve more”.

Because, here’s the secret. Those overperformers? They’re going to get tired. Maybe not today, maybe not tomorrow. But eventually. And sooner than you think. And they’re going to realize that no one at the end of their life looks back and thinks, “thank goodness we landed those extra story points!” Those underperformers? They actually wont get better. Not for you. It’s rude, but true.

The only people who you’re still going to have are the balanced people, the “skilled pragmatists”, the Marias. They’ve figured out how to operate for the long haul. Let’s figure out how to get more people to act like them. And they want to work in a place that values going home on time.

Let’s figure out how to get less out of people.

-

I feel like we need a better name for these, since substack went and turned itself into the “nazi bar”. It’s funny to me that as the social medias started imploding, “email newsletters” were the new hotness everyone seemed to land on. But they’re just blogs? Blogs that email themselves to you when they publish? I mean, substack and its ilk also have RSS feeds, I read all the ones I’m subscribed to in NetNewsWire, not my email client.

Of course, the big innovation was “blog with out-of-the-box support for subscriptions and a configurable paywall” which is nothing to sneeze at, but I don’t get why email was the thing everyone swarmed around?

Did google reader really crater so hard that we’ve settled on reading our favorite websites as emails? What?

Electronics Does What, Now?

A couple months back, jwz dug up this great interview of Bill Gates conducted by Terry Pratchett in 1996 which includes this absolute gem: jwz: "Electronics gives us a way of classifying things"

TP: OK. Let's say I call myself the Institute for Something-or-other and I decide to promote a spurious treatise saying the Jews were entirely responsible for the Second World War and the Holocaust didn't happen. And it goes out there on the Internet and is available on the same terms as any piece of historical research which has undergone peer review and so on. There's a kind of parity of esteem of information on the Net. It's all there: there's no way of finding out whether this stuff has any bottom to it or whether someone has just made it up.

BG: Not for long. Electronics gives us a way of classifying things. You will have authorities on the Net and because an article is contained in their index it will mean something. For all practical purposes, there'll be an infinite amount of text out there and you'll only receive a piece of text through levels of direction, like a friend who says, "Hey, go read this", or a brand name which is associated with a group of referees, or a particular expert, or consumer reports, or the equivalent of a newspaper... they'll point out the things that are of particular interest. The whole way that you can check somebody's reputation will be so much more sophisticated on the Net than it is in print today.

“Electronics gives us a way of classifying things,” you say?

One of the most maddening aspects of this timeline we live in was that all our troubles were not only “forseeable”, but actually actively “forseen”.

But we already knew that; that’s not why this has been, as they say, living rent-free in my head. I keep thinking about this because it’s so funny.

First, you just have the basic absurdity of Bill Gates and Terry Pratchett in the same room, that’s just funny. What was that even like?

Then, you have the slightly sharper absurdity of PTerry saying “so, let me exactly describe 2024 for you” and then BillG waves his hands and is all “someone will handle it, don’t worry.” There’s just something so darkly funny to BillG patronizing Terry Pratchet of all people, whose entire career was built around imagining ways people could misuse systems for their own benefit. Just a perfect example of the people who understood people doing a better job predicting the future than the people who understood computers. It’s extra funny that it wasn’t thaaat long after this he wrote his book satirizing the news?

Then, PTerry fails to ask the really obvious follow-up question, namely “okay great, whose gonna build all that?”

Because, let’s pause and engage with the proposal on it’s own merits for a second. Thats a huge system Bill is proposing that “someone” is gonna build. Whose gonna build all that, Bill? Staff it? You? What’s the business model? Is it going to be grassroots? That’s probably not what he means, since this is the mid-90s and MSFT still thinks that open source is a cancer. Instead: magical thinking.

Like the plagiarism thing with AI, there’s just no engagement with the fact that publishing and journalism have been around for literally centuries and have already worked out most of the solutions to these problems. Instead, we had guys in business casual telling us not to worry about bad things happening, because someone in charge will solve the problem, all while actively setting fire to the systems that were already doing it.

And it’s clear there’s been no thought to “what if someone uses it in bad faith”. You can tell that even in ’96, Terry is getting more email chain letters than Bill was.

But also, it’s 1996, baby, the ship has sailed. The fuse is lit, and all the things that are making our lives hard now are locked in.

But mostly, what I think is so funny about this is that Terry is talking to the wrong guy. Bill Gates is still “Mister Computer” to the general population, but “the internet” happened in spite of his company, not due to any work they actually did. Instead, very shortly after this interview, Bill’s company is going to get shanked by the DOJ for trying to throttle the web in its crib.

None of this “internet stuff” is going to center around what Bill thinks is going to happen, so even if he was able to see the problem, there wasn’t anything he could do about it. The internet was going well before MICROS~1 noticed, and routed around it and kept going. There were some Stanford grad students Terry needed to get to instead.

But I’m sure Microsoft’s Electronic System for classifying reputation will ship any day now.

I don’t have a big conclusion here other than “Terry Pratchett was always right,” and we knew that already.

Apple Report Card, Early 2024

So, lets take a peek behind the ol’ curtain here at icecano dot com. I’ve got a drafts folder on my laptop—which for reasons which are unlikely to become clear at the moment—is named “on deck”, and when I come across something that might be a blog post but isn’t yet, I make a new file and toss it in. Stray observations, links, partially-constructed jokes, whatever. A couple lifetimes back, these probably would have just been tweets? Instead, I kind of let them simmer in the background until I have something to do with them. For example, this spent two months as a text file containing the only the phrase “that deranged rolling stone list”. I have a soft rule that after about three months they get moved to another folder named “never mind.”

And so, over the last couple of months that drafts pile had picked up a fair number of stray Apple-related observations. There’s been a lot going on! Lawsuits, the EU, chat protocols, shenanigans galore. So I kept noting down bits and bobs, but no coherent takes or anything. There was no structure. Then, back in February, Six Colors published their annual “Apple Report Card”.

Every year for the last decade or so, SixColors has done an “Apple Report Card”, where they poll a panel on a variety of Apple-related topics and get a sense of how the company is doing, or at least perceived as doing. This year was: Apple in 2023: The Six Colors report card . There are a series of categories, where the panel grades the company on an A-F scale, and adds commentary as desired.

The categories are a little funny, because they make a lot of sense for a decade ago but aren’t quite what I’d make them in 2024, but having longitudinal data is more interesting than revising the buckets. And it’s genuinely interesting to see how the aggregate scores have changed over the years.

And, so, I think, aha, there’s the structure, I can wedge all these japes into that shape, have a little fun with it. Alert readers will note this was about when I hurt my back, so wasn’t in shape to sit down a write a longer piece for a while there, so the lashed-together draft kinda floated along. Finally, this week, I said to myself, I said, look, just wrap that sucker up and post it, it’s not like there’s gonna be any big Apple news this week!

Let me take a big sip of coffee and check the news, and then let’s go ahead and add a category up front so we can talk about the antitrust lawsuit, I guess?

The Antitrust Lawsuit, I Guess: F

This has been clearly coming for a while, as the antitrust and regulatory apparatus continues to slowly awaken from its long slumber.

At first glance, I have a very similar reaction to this as I had to the Microsoft Antritrust thing back in the 90s, in that:

- This action is long overdue

- The company in question should have seen this coming and easily dodged, but instead they’re sucking claw to the face

- The DOJ has their attention pointed all the wrong things, and then the legal action is either gonna ricochet off or cause more harm than good. They actually mention the bubble colors in the filing, for chrissakes. Mostly they seem determined to go after the things people actually like about Apple’s gear?

But this is all so much dumber than last time, mostly because Microsoft wasn’t living in a world where the Microsoft lawsuit had already happened. This was so, so avoidable; a little performative rule changes, cut some prices, form a few industry working groups, maybe start a Comics Code Authority, whatever. Instead, Apple’s entire response to the whole situation has been somewhere between a little kid refusing to leave the toy store mixed with an old guy yelling “it’s better to reign in hell than serve in heaven!” at the Arby’s drive through. I’m not sure I can think of another example where a company blew their own legs off because deep down they really don’t believe that regulators are real. That said, the entire post-dot-com Big Tech world only exists because the entire regulatory system has been hibernating out of sight. Well, it’s roused now, baby.

You know the part at the start of that Mork Movie where The Martian keeps getting into streetfights, but then keeps getting himself out of trouble because he knows some obscure legal technicality, but then the judge that looks exactly like Kurt Vonnegut says something like “I don’t care, you hit a cop and you’re going down,” so that the rest of the movie can happen? I think Apple is about to learn some cool life lessons as a janitor, is what I’m saying.

I may have let that metaphor get away from me. Let’s move on, I’m sure there aren’t any other sections here where the recent news will cause me to have to revise from future-tense to past-tense!

Mac: A-

Honestly, as a product line, the mac is probably as coherent and healthy as it’s ever been. Now that they’re fully moved over to their own processors and no longer making “really nice Intel computers”, we’re starting to see some real action.

A line of computers where they all have the same “guts” and the form factor is an aesthetic & functional choice, but not a performance one, is something no one’s ever done before? It seems like they’re on the verge of getting into an annual or annual-and-a-half regular upgrade cycle like the iOS devices have, and that’ll really be a thing when it lands.

Well, all except The Mac Pro, which still feels like a placeholder?

Are they expensive? Yes they are. Pound-for-pound, they’re not as expensive as they seem, because they don’t make anything on the lower-end of the spectrum, and so a lot of complaints about price have the same tenor as complaining that BMWs cost more than an entry-level Toyota Camry, where you just go “yeah, man, they absolutely do.” Then I go look at what it costs to upgrade to a usable amount of RAM and throw my hands up in disgust. How is that not the lead on the DOJ action? They want HOW much to get up to 16 gigs of RAM?

MacOS as a platform is evolving well beyond “BSD, but with a nice UI” the way it was back when it was named OS X. I’m not personally crazy about a lot of the design moves, but I’d be hard pressed to call most of them “objectively bad”, as opposed to “not to my taste”. Except for that new settings panel, that’s garbage.

All that said… actually, I’m going to finish this sentence down under “SW Quality”. I’ll meet ya down there.

iPhone: 🔥

On the one hand, the iPhone might be the most polished and refined tech product of all time. Somewhere around the iPhone 4 or 5, it was “done”; all major features were there, the form factor was locked in. And Apple kept refining, polishing. These supercomputers-in-slabs-of-glass are really remarkable.

On the other hand, that’t not what anyone means when they talk about the iPhone in 2024. Yeah, let’s talk about the App Store.

I had a whole thing here about the app store was clearly the thing that was going to summon the regulators, which I took out partly because it was superfluous now, but also because apparently it was actually the bubble colors?

There’s a lot of takes on the nature of software distribution, and what kind of device the phone is, and how the ecosystem around it should work, and “what Apple customers want.” Well, okay, I’m an Apple user. Mostly a fairly satisfied one. And you know what I want? I want the app store they fucking advertise.

Instead, I had to keep having conversations with my kids about “trick games”, and explain that no, the thing called “the Oregon trail” in the app store isn’t the game they’ve heard about, but is actually a fucking casino. I like Apple’s kit quite a bit, and I keep buying it, but never in a million years will I forgive Tim Apple for the conversations I had to keep having with them about one fucking scam app after another.

Because this is what drives me the most crazy in all the hoopla around the app stores: if it worked like they claim it works, none of this would be happening. Instead, we have bizarre and inconsistant app review, apps getting pulled after being accepted, openly predatory in-app purchases, and just scam after casino after should-be-illegal-knock-off-clone after scam.

The idea is great: Phones for people who don’t use computers as a source of self-actualization. Phones and Macs are different products, with a different deal. Part of the deal is that with the iPhone you can do “less”, but what you can do is rock solid, safe, and you don’t ever have to worry about what your mom or your kid might download on their device.

I know the deal, I signed up for that deal on purpose! I want them to hold up their side of the bargain.

Which brings me to my next point. One of the metaphors people use for iOS devices—which I think is a good one!—is that they’re more like game consoles than general purpose computers. They’re “app consoles”. And I like that, that’s a solid way of looking at the space. It’s Jobs’ “cars vs trucks” metaphor but with a slightly less-leaky abstraction.

But you know who doesn’t have these legal and developer relations problems, and who isn’t currently having their ass handed to them by the EU and the DOJ? Nintendo.

This is what kills me. You can absolutely sell a computer where every piece of software is approved by you, where you get a cut, where the store isn’t choked by garbage, where everyone is basically happy. Nintendo has been doing that for checks notes 40 years now?

Hell, Nintendo even kept the bottom from falling out of the prices by enforcing that while you could sell for any price, you had to sell the physical and digital copies at the same amount, and then left all their stuff at 60-70 bucks, giving air cover to the small guys to charge a sustainable price.

Apple and Nintendo are very similar companies, building their own hardware and software, at a slightly different angle from the rest of their industries. They both have a solid “us first, customers second, devs third” world-view. But Nintendo has maintained a level of control over their platform that Apple could only dream of. And I’m really oversimplifying here, but mostly they did this by just not being assholes? Nintendo is not a perfect company, because none of them are, but you know what? I can play Untitled Goose Game on my Switch.

In the end, Apple was so averse to games, they couldn’t even bring themselves to use Nintendo’s playbook to keep the Feds off their back.

iPad: C

I’m utterly convinced that somewhere around 1979 Steve Jobs had a vision—possibly chemically assisted—of The Computer. A device that was easy to use, fully self-contained, an appliance, not a specialist’s tool. Something kids could pick up and use.

Go dial up his keynote where he introduces the first iPad. He knows he’s dying, even if he wasn’t admitting it, and he knows this is the last “new thing” he’s going to present. The look on his face is satisfaction: he made it. The thing he saw in the desert all those years ago, he’s holding it in his hands on stage. Finally.

So, ahhhh, why isn’t it actually good for anything?

I take that back; it’s good for two things: watching video, and casual video games. Anything else… not really?

I’m continually baffled by the way the iPad just didn’t happen. It’s been fourteen years; fourteen years after the first Mac, the Mac Classic was basically over, all the stuff the Mac opened up was well in the past-tense. I’m hard-pressed to think of anything that happened because the iPad existed. Maybe in a world with small laptops and big-ass phones, the iPad just doesn’t have a seat at the big-kids table?

Watch & Wearables: C

I like my watch enough that when my old one died, I bought a new one, but not so much that I didn’t have to really, really think about it.

Airpods are pretty cool, except they make my ears hurt so I stopped using them.

Is this where we talk about the Cyber Goggles?

AppleTV

Wait, which Apple TV?

AppleTV (the hardware): B

For the core use-case, putting internet video on my TV, it’s great. Great picture, the streaming never stutters, even the remote is decent now. It’s my main way to use my TV, and it’s a solid, reliable workhorse.

But look, that thing is a full-ass iPhone without a screen. It’s got more compute power than all of North America in the 70s! Is this really all we’re going to use this for? This is an old example, but the AppleTV feels like it could easily slide into being the 3rd or 2nd best game console with almost no effort, and it just… doesn’t.

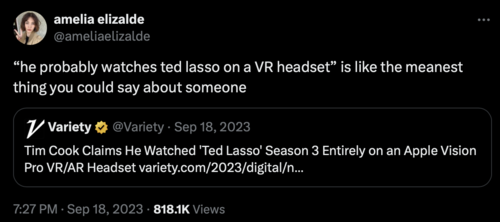

AppleTV (the service): B

Ted Lasso notwithstanding, this is a service filled exclusively with stuff I have no interest in. I’m not even saying it’s bad! But a pass from me, chief.

AppleTV (the app): F

Absolute total garbage, just complete trash. I’ll go to almost any length to avoid using it.

Services: C

What’s left here?

iCloud drive? Works okay, I guess, but you’ll never convince me to rely on it.

Apple Arcade? It’s fine, other than it shouldn’t have to exist.

Apple Fitness? No opinion.

Apple News? Really subpar, with the trashiest ads I’ve seen in a while.

Apple Music? The service is outstanding, no notes. The app, however, manages to keep getting worse every OS update, at this point it’s kind of remarkable.

Apple Classical Music? This was the best you could do, really?

iTunes Match? I’m afraid to cancel. Every year I spent 15 bucks so I don’t have to learn which part of my cloud library will vanish.

There’s ones I’m not remembering, right? That’s my review of them.

Homekit: F

I have one homekit device in my house—a smart lightbulb. You can set the color temperature from the app! There is no power in the universe that would convince me to add a second.

HW Reliability: A

I don’t even have a joke about this. The hardware works. I mean, I still have to turn my mouse over to charge it, like it’s a defeated Koopa Troopa, but it charges every time!

SW Quality: D

Let me tell you a story.

For the better part of a decade, my daily driver was a 2013 15-inch MacBook Pro. In that time, I’m pretty sure it ran every OS X flavor from 10.9 to 10.14; we stopped at Mojave because there was some 32-bit only software we needed for work.

My setup was this: I had the laptop itself in the center of the desk, on a little stand, with the clamshell open. On either side, I had an external monitor. Three screens, where the built-in laptop one in the middle was smaller but effectively higher resolution, and the ones on the sides were physically larger but had slightly chunkier pixels. (Eventually, I got a smokin’ deal on some 4k BenQs on Amazon, and that distinction ceased.) A focus monitor in the center for what I was working on that was generally easier to read, and then two outboard monitors for “bonus content.”

The monitor on the right plugged into the laptop’s right-side HDMI port. The monitor on the left plugged into one of the Thunderbolt ports—this was the original thunderbolt when it still looked like firewire or mini-displayport—via a thunderbolt-to-mini-displayport cable. In front of the little stand, I had a wired Apple keyboard with the numeric keypad that plugged into the USB-A port on the left side. I had a wireless Apple mouse. Occasionally, I’d plug into the wired network connection with a thunderbolt-to-Cat6 adapter I kept in my equipment bag. The magsafe power connection clicked in on the left side. Four, sometimes five cables, each clicking into their respective port.

Every night, I’d close the lid, unplug the cables in a random order, and take the laptop home. The next morning, I’d come in, put the laptop down, plug those cables back in via another random order, open the lid, and—this is the important part—every window was exactly where I had left it. I had a “workspace” layout that worked for me—email and slack on the left side, web browser and docs on the right, IDE or text editor in the center. Various Finder windows on the left side pointing at the folders holding what I was working on.

I’d frequently, multiple times a week, unplug the laptop during the middle of the day, and hide over to another building, or a conference room, or the coffee shop. Sometimes I’d plug into another monitor, or a projector? Open the lid, those open windows would re-arrange themselves to what was available. It was smart enough to recognize that if there was only one external display, that was probably a projector, and not put anything on it except the display view of Powerpoint or Keynote.

Then, I’d come back to my desk, plug everything back in, open the lid, and everything was exactly where it was. It worked flawlessly, every time. I was about to type “like magic”, but that’s wrong. It didn’t work like magic, it worked like an extremely expensive professional tool that was doing the exact job I bought it to do.

My daily driver today is a 16-inch 2021 M1 MacBook Pro running, I think, macOS 12. The rest of my peripherals are the same: same two monitors, same keyboard, same mouse. Except now, I have a block of an dock on the left side of my desk for the keyboard and wired network drop.

In the nineteen months I’ve had this computer, let me tell you how many times I plugged the monitors back in and had the desktop windows in the same places they were before: Literally Never.

In fact, the windows wouldn’t even stay put when it went to sleep, much less when I closed the lid. The windows would all snap back to the central monitor, the desktops of the two side monitors would swap places. This never happened on the old rig over nearly a decade, and happens every time with the new one.

Here is what I had to do so that my email is still on the left monitor when I come back from lunch:

- I have a terminal window running

caffeinateall the time. Can’t let it go to sleep! - The cables from the two monitors are plugged into the opposite side of the computer from where they sit: the cables cross over in the back and plug into the far side

- Most damning of all, I can’t use the reintroduced HDMI port, both monitors have to be plugged in via USB-C cables. The cable on the right, which needed an adapter to turn the HDMI cable to a USB-C/Thunderbolt connection is plugged into the USB-C port right next to the HDMI port, which is collecting dust. Can I use it? No, nothing works if that port is lit up.

Please don’t @-me with your solution, I guarantee you whatever you’re thinking of I tried it, I read that article, I downloaded that app. This took me a year to determine by trial and error, like I was a victorian scientist trying to measure the refraction of the æther, and I’m not changing anything now. It’s a laptop in name only, I haven’t closed the lid or moved it in months, and I’m not going to. God help me if I need to travel for work.

I’ve run some sketchy computers, I depended on the original OEM Windows 95 for over a year. I have never, in forty years, needed to deploy a rube goldberg–ass solution like this to keep my computer working right.

And everything is like this. I could put another thousand words here about things that worked great on the old rig—scratch that, that literally still work on the old rig—that just don’t function right on the new one. The hardware is so much better, but everything about using the computer is so much worse.

Screw the chat bubbles, get the DOJ working on why my nice monitors don’t work any more.

Dev Relations: D

Absolutely in the toilet, the worst I have ever seen it. See: just about everything above this. Long-time indie “for the love of the game” mac devs are just openly talking shit at this point. You know that Trust Thermocline we got all excited about as a concept a couple years ago? Yeah, we are well below that now.

Bluntly, the DOJ doesn’t move if all the developers working on the platform are happy and quiet.

I had an iOS-based project get scrapped in part because we weren’t willing to incur the risk of giving Apple total veto power over the product; that was five or six years ago now, and things have only decayed since then.

This is a D instead of an F because I’m quite certain it’s going to get worse before it gets better.

Environ/Social: ¯_(ツ)_/¯

This category feels like one of those weird “no ethical consumption under capitalism” riddles. Grading on the curve of “silicon valley companies”, they’re doing great here. On the other hand, that bar is on the floor. Like, it’s great that they make it easy to recycle your old phones, but maybe just making it less of a problem to throw things out hasn’t really backed up to the fifth “why”, you know?

Potpurri: N/A

This isn’t a sixcolors category, but I”m not sure where to put the fact that I like my HomePod mini? It’s a great speaker!

Also, please start making a wifi router again, thanks.

What Now: ?

Originally, this all wrapped up with an observation that it’s great that the product design is firing on all cylinders and that services revenue is through the roof, but if they don’t figure out how to stop pissing off developers and various governments, things are going to get weird, but I just highlighted all that and hit delete because we’re all the way through that particular looking glass now.

Back in the 90s, there was nothing much else going on, and Microsoft was doing some openly, blatantly illegal shit. Here? There’s a lot else going on, and Apple are mostly just kinda jerks?

I think that here in 2024, if the Attorney General of the United States is inspired to redirect a single brain cell away from figuring out how to stop a racist game show host from overthrowing the government and instead towards the color of the text message bubbles on his kid’s phone, that means that Apple is Well and Truely Fucked. I think the DOJ is gonna carve into them like a swarm of coconut crabs that just found a stranded aviator.

Maybe they shoulder-roll through this, dodge the big hits, settle for a mostly-toothless consent decree. You’d be hard-pressed, from the outside, to point at anything meaningly different about Microsoft in 1999 vs 2002. But before they settled, they did a lot of stuff, put quite a few dents in the universe, to coin a phrase. Afterwards? Not a whole lot. Mostly, it kept them tied up so that they didn’t pay attention to what Google was doing. And we know that that went.

I’m hard-pressed to think of a modern case where antitrust action actually made things better for consumers. I mean, it’s great that Microsoft got slammed for folding IE into Windows, but that didn’t save Netscape, you know? And I was still writing CSS fills for IE6 a decade later. Roughing up Apple over ebooks didn’t fix anything. I’m not sure mandating that I need to buy new charge cables was solving a real problem. And with the benefit of hindsight, I’m not sure breaking up Ma Bell did much beyond make the MCI guy a whole lot of money. AT&T reformed like T2, just without the regulations.

The problem here is that it’s the fear of enforcement thats supposed to do the job, not the actual enforcement itself, but that gun won’t scare anyone if they don’t think you’ll ever fire it. (Recall, this is why the Deliverator carried a sword.) Instead, Apple’s particular brew of arrogance and myopic confidence called this all down on them.

Skimming the lawsuit, and the innumerable articles about the lawsuit, the things the DOJ complains about are about a 50/50 mix of “yeah, make them stop that right now”, and “no wait, I bought my iPhone for that on purpose!” The bit about “shapeshifting app store rules” is already an all-time classic, but man oh man do I not want the Feds to legislate iOS into android, or macOS into Windows. There’s a very loud minority of people who would never buy something from Apple (or Microsoft) on principal, and they really think every computer-like device should be forced to work like Ubuntu or whatever, and that is not what I bought my iPhone for.

I’m pessimistic that this is going to result in any actually positive change, in case that wasn’t coming through clearly. All I want is them to hold up their end of the deal they already offered. And make those upgrades cheaper. Quit trying to soak every possible cent out of every single transaction.

And let my monitors work right.

The Dam

Real blockbuster from David Roth on the Defector this morning, which you should go read: The Coffee Machine That Explained Vice Media

In a large and growing tranche of wildly varied lines of work, this is just what working is like—a series of discrete tasks of various social function that can be done well or less well, with more dignity or less, alongside people you care about or don't, all unfolding in the shadow of a poorly maintained dam.

It goes on like that until such time as the ominous weather upstairs finally breaks or one of the people working at the dam dynamites it out of boredom or curiosity or spite, at which point everyone and everything below is carried off in a cleansing flood.

[…]

That money grew the company in a way that naturally never enriched or empowered the people making the stuff the company sold, but also never went toward making the broader endeavor more likely to succeed in the long term.

Depending on how you count, I’ve had that dam detonated on me a couple of times now. He’s talking about media companies, but everything he describes applies to a lot more than just that. More than once I’ve watched a functional, successful, potentially sustainable outfit get dynamited because someone was afraid they weren’t going to cash out hard enough. And sure, once you realize that to a particular class of ghoul “business” is a synonym for “high-stakes gambling” a lot of the decisions more sense, at least on their own terms.

But what always got me, though, was this:

These are not nurturing types, but they are also not interested in anything—not creating things or even being entertained, and increasingly not even in commerce.

What drove me crazy was that these people didn’t use the money for anything. They all dressed badly, drove expensive but mediocre cars—mid-list Acuras or Ford F-250s—and didn’t seem to care about their families, didn’t seem to have any recognizable interests or hobbies. This wasn’t a case of “they had bad taste in music”, it was “they don’t listen to music at all.” What occasional flickers of interest there were—fancy bicycles or or golf clubs or something—was always more about proving they could spend the money, not that they wanted whatever it was.

It’s one thing if the boss cashes out and drives up to lay everyone off in a Lamborghini, but it’s somehow more insulting when they drive up in the second-best Acura, you know?

I used to look at this people and wonder, what did you dream about when you were young? And now that you could be doing whatever that was, why aren’t you?

Cyber-Curriculum

I very much enjoyed Cory Doctorow’s riff today on why people keep building torment nexii: Pluralistic: The Coprophagic AI crisis (14 Mar 2024).

He hits on an interesting point, namely that for a long time the fact that people couldn’t tell the difference between “science fiction thought experiments” and “futuristic predictions” didn’t matter. But now we have a bunch of aging gen-X tech billionaires waving dog-eared copies of Neuromancer or Moon is a Harsh Mistress or something, and, well…

I was about to make a crack that it sorta feels like high school should spend some time asking students “so, what’s do you think is going on with those robots in Blade Runner?” or the like, but you couldn’t actually show Blade Runner in a high school. Too much topless murder. (Whether or not that should be the case is besides the point.)

I do think we should spend some of that literary analysis time in high school english talking about how science fiction with computers works, but what book do you go with? Is there a cyberpunk novel without weird sex stuff in it? I mean, weird by high school curriculum standards. Off the top of my head, thinking about books and movies, Neuromancer, Snow Crash, Johnny Mnemonic, and Strange Days all have content that wouldn’t get passed the school board. The Matrix is probably borderline, but that’s got a whole different set of philosophical and technological concerns.

Goes and looks at his shelves for a minute

You could make Hitchhiker work. Something from later Gibson? I’m sure there’s a Bruce Sterling or Rudy Rucker novel I’m not thinking of. There’s a whole stack or Ursula LeGuin everyone should read in their teens, but I’m not sure those cover the same things I’m talking about here. I’m starting to see why this hasn’t happened.

(Also, Happy π day to everyone who uses American-style dates!)

The Sky Above The Headset Was The Color Of Cyberpunk’s Dead Hand

Occasionally I poke my head into the burned-out wasteland where twitter used to be, and whilw doing so stumbled over this thread by Neil Stephenson from a couple years ago:

I had to go back and look it up, and yep: Snow Crash came out the year before Doom did. I’d absolutely have stuck this fact in Playthings For The Alone if I’d had remembered, so instead I’m gonna “yes, and” my own post from last month.

One of the oft-remarked on aspects of the 80s cyberpunk movement was that the majority of the authors weren’t “computer guys” before-hand; they were coming at computers from a literary/artist/musician worldview which is part of why cyberpunk hit the way it did; it wasn’t the way computer people thought about computers—it was the street finding it’s own use for things, to quote Gibson. But a less remarked-on aspect was that they also weren’t gamers. Not just not computer games, but any sort of board games, tabletop RPGs.

Snow Crash is still an amazing book, but it was written at the last possible second where you could imagine a multi-user digital world and not treat “pretending to be an elf” as a primary use-case. Instead the Metaverse is sort of a mall? And what “games” there are aren’t really baked in, they’re things a bored kid would do at a mall in the 80s. It’s a wild piece of context drift from the world in which it was written.

In many ways, Neuromancer has aged better than Snow Crash, if for no other reason that it’s clear that the part of The Matrix that Case is interested in is a tiny slice, and it’s easy to imagine Wintermute running several online game competitions off camera, whereas in Snow Crash it sure seems like The Metaverse is all there is; a stack of other big on-line systems next to it doesn’t jive with the rest of the book.

But, all that makes Snow Crash a really useful as a point of reference, because depending on who you talk to it’s either “the last cyberpunk novel”, or “the first post-cyberpunk novel”. Genre boundaries are tricky, especially when you’re talking about artistic movements within a genre, but there’s clearly a set of work that includes Neuromancer, Mirrorshades, Islands in the Net, and Snow Crash, that does not include Pattern Recognition, Shaping Things, or Cryptonomicon; the central aspect probably being “books about computers written by people who do not themselves use computers every day”. Once the authors in question all started writing their novels in Word and looking things up on the web, the whole tenor changed. As such, Snow Crash unexpectedly found itself as the final statement for a set of ideas, a particular mix of how near-future computers, commerce, and the economy might all work together—a vision with strong social predictive power, but unencumbered by the lived experience of actually using computers.

(As the old joke goes, if you’re under 50, you weren’t promised flying cars, you were promised a cyberpunk dystopia, and well, here we are, pick up your complementary torment nexus at the front desk.)

The accidental predictive power of cyberpunk is a whole media thesis on it’s own, but it’s grimly amusing that all the places where cyberpunk gets the future wrong, it’s usually because the author wasn’t being pessimistic enough. The Bridge Trilogy is pretty pessimistic, but there’s no indication that a couple million people died of a preventable disease because the immediate ROI on saving them wasn’t high enough. (And there’s at least two diseases I could be talking about there.)

But for our purposes here, one of the places the genre overshot was this idea that you’d need a 3d display—like a headset—to interact with a 3d world. And this is where I think Stephenson’s thread above is interesting, because it turns out it really didn’t occur to him that 3d on a flat screen would be a thing, and assumed that any sort of 3d interface would require a head-mounted display. As he says, that got stomped the moment Doom came out. I first read Snow Crash in ’98 or so, and even then I was thinking none of this really needs a headset, this would all work find on a decently-sized monitor.

And so we have two takes on the “future of 3d computing”: the literary tradition from the cyberpunk novels of the 80s, and then actual lived experience from people building software since then.

What I think is interesting about the Apple Cyber Goggles, in part, is if feels like that earlier, literary take on how futuristic computers would work re-emerging and directly competing with the last four decades of actual computing that have happened since Neuromancer came out.

In a lot of ways, Meta is doing the funniest and most interesting work here, as the former Oculus headsets are pretty much the cutting edge of “what actually works well with a headset”, while at the same time, Zuck’s “Metaverse” is blatantly an older millennial pointing at a dog-eared copy of Snow Crash saying “no, just build this” to a team of engineers desperately hoping the boss never searches the web for “second life”. They didn’t even change the name! And this makes a sort of sense, there are parts of Snow Crash that read less like fiction and more like Stephenson is writing a pitch deck.

I think this is the fundamental tension behind the reactions to Apple Vision Pro: we can now build the thing we were all imagining in 1984. The headset is designed by cyberpunk’s dead hand; after four decades of lived experience, is it still a good idea?

Time Zones Are Hard

In honor of leap day, my favorite story about working with computerized dates and times.

A few lifetimes ago, I was working on a team that was developing a wearable. It tracked various telemetry about the wearer, including steps. As you might imagine, there was an option to set a Step Goal for the day, and there was a reward the user got for hitting the goal.

Skipping a lot of details, we put together a prototype to do a limited alpha test, a couple hundred folks out in the real world walking around. For reasons that aren’t worth going into, and are probably still under NDA, we had to do this very quickly; on the software side we basically had to start from scratch and have a fully working stack in 2 or 3 months, for a feature set that was probably at minimum 6-9 months worth of work.

There were a couple of ways to slice what we meant by “day”, but we went with the most obvious one, midnight to midnight. Meaning that the user had until midnight to hit your goal, and then at 12:00 your steps for the day resets to 0.