April 8, 2021: A Sketch From The Midst Of A Pandemic

This was originally written for [REDACTED] on April 8, 2021. I’m republishing it here on its 3rd anniversary .

I got my shot at the Sacramento County "distribution center" at Cal Expo. Cal Expo, for those who don't know or don't remember, is the permanent home of the California State Fair. It's plunked down unceremoniously on the north side of the American river, in the middle of a weird swath of the city that's been permanently in the middle of one failing urban renewal project or another for my entire life. These usually involve "rebranding" the area—according to a sign I drove past which is both brand new and already battered, I'm told we're now calling this area "uptown sacramento". It'll have a new name this time next year.

Cal Expo itself is a strange beast—a 900 acre facility built to host an annual 17 day event. The initial fever-dream was that it would become "DisneyLand North", mostly now it plays host to any event that need a whole lot of space for a single weekend—RV fairs, garden shows, school district-wide science fairs.

The line to get in to the vaccination site is identified with a large hand-lettered sign reading "VAX", surrounded by National Guard troops. Everyone stays in their car, and the line of cars snakes between dingy orange cones across acres of cracked parking lot. Enormous yellow weeds pour out of every crack, and I realize, in one of the strangest moments of dissonance of the last year, that this is the section of parking lot that in the before-times hosted the christmas tree lot. Now it's full of idling cars and masked troops in camo.

As befitting it's late 60s origins, Cal Expo mostly composed of bare dirt and giant brutalist retangular concrete buildings. They're all meant to be multi-use, so they've got high ceilings, no permanent internal fittings, and multiple truck-sized roll-up doors. They give the impression of an abandoned warehouse.

The line of cars contines into one of these bunkers. Incredibly friendly workers; a mixed of national guard, CA Department of Health and Human services, and a bunch of older RNs and MDs with strong "retired and now a docent" energy.

The whole thing runs like clockwork - directed into a line of cars, get to the front, get the shot, they drop a timer set to 15 minutes on the dashboard, and then directed out to the "Recovery Area", which is the next parking lot over full of other lines of cars.

The air is incredibly jovial. The woman who gives me my shot compliments my Hawaiian shift, and hopes we'll all be "somewhere like that" soon. A grandmotherly RN comes by and give me advice about where to keep my vax card. A younger guardsman in a medic uniform explains the symptoms to watch for, and then we shoot the breeze about Star Wars for a minute before he moves on. An older Guard Colonel walks by, sees the "JANSSEN" on the card on my dashboard and says "Ahhh! The one and done, NICE!" with a fist pump as he walks on.

Another HHS RN comes by and tells me my timer is up, along with every other car in the row. Another national guardswoman waves at me as I drive off. Everyone is wearing a mask, but you can tell everyone is smiling.

We Need to Form An Alliance! Right Now!!

Today is the fourth anniversary of my single favorite piece of art to come out of the early-pandemic era, this absolute banger by the Auralnauts:

Back when we still thought this was all going to “blow over” in a couple of weeks, my kids were planning to do this song for the talent show at the end of that school year.

(Some slight context; the Auralnauts Star Wars Saga started as kind of a bad lip-reading thing, and then went it’s own way into an alternate version of Star Wars where the jedi are frat-bro jerks and the sith are just trying to run a chain of family restaurants. The actual villain of the series is “Creepio”, who has schemes of his own. I’m not normally a re-edit mash-up guy, but those are amazing.)

Implosions

Despite the fact that basically everyone likes movies, video games, and reading things on websites, every company that does one of those seems to continue to go out of business at an alarming rate?

For the sake of future readers, today I’m subtweeting Vice and Engaget both getting killed by private equity vampires in the same week, but also Coyote vs Acme, and all the video game layoffs, and Sports Illustrated becoming an AI slop shop and… I know “late state capitalism” has been a meme for years now, and the unsustainable has been wrecking out for a while, but this really does feel like we’re coming to the end of the whole neoliberal project.

It seems like we’ve spent the whole last two decades hearing about something valuable or well-liked went under because “their business model wasn’t viable”, but on the other hand, it sure doesn’t seem like anyone was trying to find a viable one?

Rusty Foster asks What Are We Dune 2 Journalism? while Josh Marshall asks over at TPM: Why Is Your News Site Going Out of Business?. Definitely click through for the graph on TPM’s ad revenue.

What I find really wild is that all these big implosions are happening at the same time as folks are figuring out how to make smaller, subscription based coöps work.

Heck, just looking in my RSS reader alone, you have:

Defector,

404 Media,

Aftermath,

Rascal News,

1900HOTDOG,

a dozen other substacks or former substacks,

Achewood has a Patreon!

It’s more possible than ever to actually build a (semi?) sustainable business out there on the web if you want to. Of course, all those sites combined employ less people that Sports Illustrated ever did. Because we’re talking less about “scrappy startups”, and more “survivors of the disaster.”

I think those Defector-style coöps, and substacks, and patreons are less about people finding viable business models then they are the kind of organisms that survive a major plague or extinction event, and have evolved specifically around increasing their resistance to that threat. The only thing left as the private equity vultures turn everything else and each other into financial gray goo.

It’s tempting to see some deeper, sinister purpose in all this, but Instapot wasn’t threatening the global order, Sports Illustrated really wasn’t speaking truth to power, and Adam Smith’s invisible hand didn’t shutter everyone’s favorite toy store. Batgirl wasn’t going to start a socialist revolution.

But I don’t think the ghouls enervating everything we care about have any sort of viewpoint beyond “I bet we could loot that”. If they were creative enough to have some kind of super-villian plan, they’d be doing something else for a living.

I’ve increasingly taken to viewing private equity as the economy equivalent of Covid; a mindless disease ravaging the unfortunate, or the unlucky, or the insufficiently supported, one that we’ve failed as a society to put sufficient public health protections against.

“Hanging Out”

For the most recent entry in asking if ghosts have civil rights, the Atlantic last month wonders: Why Americans Suddenly Stopped Hanging Out.

And it’s an almost perfect Atlantic article, in that it looks at a real trend, finds some really interesting research, and then utterly fails to ask any obvious follow-up questions.

It has all the usual howlers of the genre: it recognizes that something changed in the US somewhere around the late 70s or early 80s without ever wondering what that was, it recognizes that something else changed about 20 years ago without wondering what that was, it displays no curiosity whatsoever around the lack of “third places” and where, exactly kids are supposed to actually go when then try to hang out. It’s got that thing where it has a chart of (something, anything) social over time, and the you can perfectly pick out Reagan’s election and the ’08 recession, and not much else.

There’s lots of passive voice sentences about how “Something’s changed in the past few decades,” coupled with an almost perverse refusal to look for a root cause, or connect any social or political actions to this. You can occasionally feel the panic around the edges as the author starts to suspect that maybe the problem might be “rich people” or “social structures”, so instead of talking to people inspects a bunch of data about what people do, instead of why people do it. It’s the exact opposite of that F1 article; this has nothing in it that might cause the editor to pull it after publication.

In a revelation that will shock no one, the author instead decides that the reason for all this change must be “screens”, without actually checking to see what “the kids these days” are actually using those screens for. (Spoiler: they’re using them to hang out). Because, delightfully, the data the author is basing all this on tracks only in-person socializing, and leaves anything virtual off the table.

This is a great example of something I call “Alvin Toffler Syndrome”, where you correctly identify a really interesting trend, but are then unable to get past the bias that your teenage years were the peak of human civilization and so therefore anything different is bad. Future Shock.

I had three very strong reaction to this, in order:

First, I think that header image is accidentally more revealing than they thought. All those guys eating alone at the diner look like they have a gay son they cut off; maybe we live in an age where people have lower tolerance for spending time with assholes?

Second, I suspect the author is just slightly younger than I am, based on a few of the things he says, but also the list of things “kids should be doing” he cites from another expert:

“There’s very clearly been a striking decline in in-person socializing among teens and young adults, whether it’s going to parties, driving around in cars, going to the mall, or just about anything that has to do with getting together in person”.

Buddy, I was there, and “going to the mall, driving around in cars” sucked. Do you have any idea how much my friends and I would have rather hung out in a shared Minecraft server? Are you seriously telling me that eating a Cinnabon or drinking too much at a high school house party full of college kids home on the prowl was a better use of our time? Also: it’s not the 80s anymore, what malls?

(One of the funniest giveaways is that unlike these sorts of articles from a decade ago, “having sex” doesn’t get listed as one of the activities that teenagers aren’t doing anymore. Like everyone else between 30 and 50, the author grew up in a world where sex with a stranger can kill you, and so that’s slipped out of the domain of things “teenagers ought to be doing, like I was”.)

But mostly, though, I disagree with the fundamental premise. We might have stopped socializing the same ways, but we certainly didn’t stop. How do I know this? Because we’re currently entering year five of a pandemic that became uncontrollable because more Americans were afraid of the silence of their own homes than they were of dying.

Even Further Behind The Velvet Curtain Than We Thought

Kate Wagner, mostly known around these parts for McMansion Hell, but who also does sports journalism, wrote an absolutely incredible piece for Road & Track on F1, which was published and then unpublished nearly instantly. Why yes, the Internet Archive does have a copy: Behind F1's Velvet Curtain. It’s the sort of thing where if you start quoting it, you end up reading the whole thing out loud, so I’ll just block quote the subhead:

If you wanted to turn someone into a socialist you could do it in about an hour by taking them for a spin around the paddock of a Formula 1 race. The kind of money I saw will haunt me forever.

It’s outstanding, and you should go read it.

But, so, how exactly does a piece like this get all the way to being published out on Al Gore’s Internet, and then spiked? The Last Good Website tries to get to the bottom of it: Road & Track EIC Tries To Explain Why He Deleted An Article About Formula 1 Power Dynamics.

Road & Track’s editor’s response to the Defector is one of the most brazen “there was no pressure because I never would have gotten this job if I waited until they called me to censor things they didn’t like” responses since, well, the Hugos, I guess?

Edited to add: Today in Tabs—The Road & Track Formula One Scandal Makes No Sense

March Fifth. Fifth March.

Today is Tuesday, March 1465th, 2020, COVID Standard Time.

Three more weeks to flatten the curve!

What else even is there to say at this point? Covid is real. Long Covid is real. You don’t want either of them. Masks work. Vaccines work.

It didn’t have to be like this.

Every day, I mourn the futures we lost because our civilization wasn’t willing to put in the effort to try to protect everyone. And we might have even pulled it off, if the people who already had too much had been willing to make a little less money for a while. But instead, we're walking into year five of this thing.

The Sky Above The Headset Was The Color Of Cyberpunk’s Dead Hand

Occasionally I poke my head into the burned-out wasteland where twitter used to be, and whilw doing so stumbled over this thread by Neil Stephenson from a couple years ago:

I had to go back and look it up, and yep: Snow Crash came out the year before Doom did. I’d absolutely have stuck this fact in Playthings For The Alone if I’d had remembered, so instead I’m gonna “yes, and” my own post from last month.

One of the oft-remarked on aspects of the 80s cyberpunk movement was that the majority of the authors weren’t “computer guys” before-hand; they were coming at computers from a literary/artist/musician worldview which is part of why cyberpunk hit the way it did; it wasn’t the way computer people thought about computers—it was the street finding it’s own use for things, to quote Gibson. But a less remarked-on aspect was that they also weren’t gamers. Not just not computer games, but any sort of board games, tabletop RPGs.

Snow Crash is still an amazing book, but it was written at the last possible second where you could imagine a multi-user digital world and not treat “pretending to be an elf” as a primary use-case. Instead the Metaverse is sort of a mall? And what “games” there are aren’t really baked in, they’re things a bored kid would do at a mall in the 80s. It’s a wild piece of context drift from the world in which it was written.

In many ways, Neuromancer has aged better than Snow Crash, if for no other reason that it’s clear that the part of The Matrix that Case is interested in is a tiny slice, and it’s easy to imagine Wintermute running several online game competitions off camera, whereas in Snow Crash it sure seems like The Metaverse is all there is; a stack of other big on-line systems next to it doesn’t jive with the rest of the book.

But, all that makes Snow Crash a really useful as a point of reference, because depending on who you talk to it’s either “the last cyberpunk novel”, or “the first post-cyberpunk novel”. Genre boundaries are tricky, especially when you’re talking about artistic movements within a genre, but there’s clearly a set of work that includes Neuromancer, Mirrorshades, Islands in the Net, and Snow Crash, that does not include Pattern Recognition, Shaping Things, or Cryptonomicon; the central aspect probably being “books about computers written by people who do not themselves use computers every day”. Once the authors in question all started writing their novels in Word and looking things up on the web, the whole tenor changed. As such, Snow Crash unexpectedly found itself as the final statement for a set of ideas, a particular mix of how near-future computers, commerce, and the economy might all work together—a vision with strong social predictive power, but unencumbered by the lived experience of actually using computers.

(As the old joke goes, if you’re under 50, you weren’t promised flying cars, you were promised a cyberpunk dystopia, and well, here we are, pick up your complementary torment nexus at the front desk.)

The accidental predictive power of cyberpunk is a whole media thesis on it’s own, but it’s grimly amusing that all the places where cyberpunk gets the future wrong, it’s usually because the author wasn’t being pessimistic enough. The Bridge Trilogy is pretty pessimistic, but there’s no indication that a couple million people died of a preventable disease because the immediate ROI on saving them wasn’t high enough. (And there’s at least two diseases I could be talking about there.)

But for our purposes here, one of the places the genre overshot was this idea that you’d need a 3d display—like a headset—to interact with a 3d world. And this is where I think Stephenson’s thread above is interesting, because it turns out it really didn’t occur to him that 3d on a flat screen would be a thing, and assumed that any sort of 3d interface would require a head-mounted display. As he says, that got stomped the moment Doom came out. I first read Snow Crash in ’98 or so, and even then I was thinking none of this really needs a headset, this would all work find on a decently-sized monitor.

And so we have two takes on the “future of 3d computing”: the literary tradition from the cyberpunk novels of the 80s, and then actual lived experience from people building software since then.

What I think is interesting about the Apple Cyber Goggles, in part, is if feels like that earlier, literary take on how futuristic computers would work re-emerging and directly competing with the last four decades of actual computing that have happened since Neuromancer came out.

In a lot of ways, Meta is doing the funniest and most interesting work here, as the former Oculus headsets are pretty much the cutting edge of “what actually works well with a headset”, while at the same time, Zuck’s “Metaverse” is blatantly an older millennial pointing at a dog-eared copy of Snow Crash saying “no, just build this” to a team of engineers desperately hoping the boss never searches the web for “second life”. They didn’t even change the name! And this makes a sort of sense, there are parts of Snow Crash that read less like fiction and more like Stephenson is writing a pitch deck.

I think this is the fundamental tension behind the reactions to Apple Vision Pro: we can now build the thing we were all imagining in 1984. The headset is designed by cyberpunk’s dead hand; after four decades of lived experience, is it still a good idea?

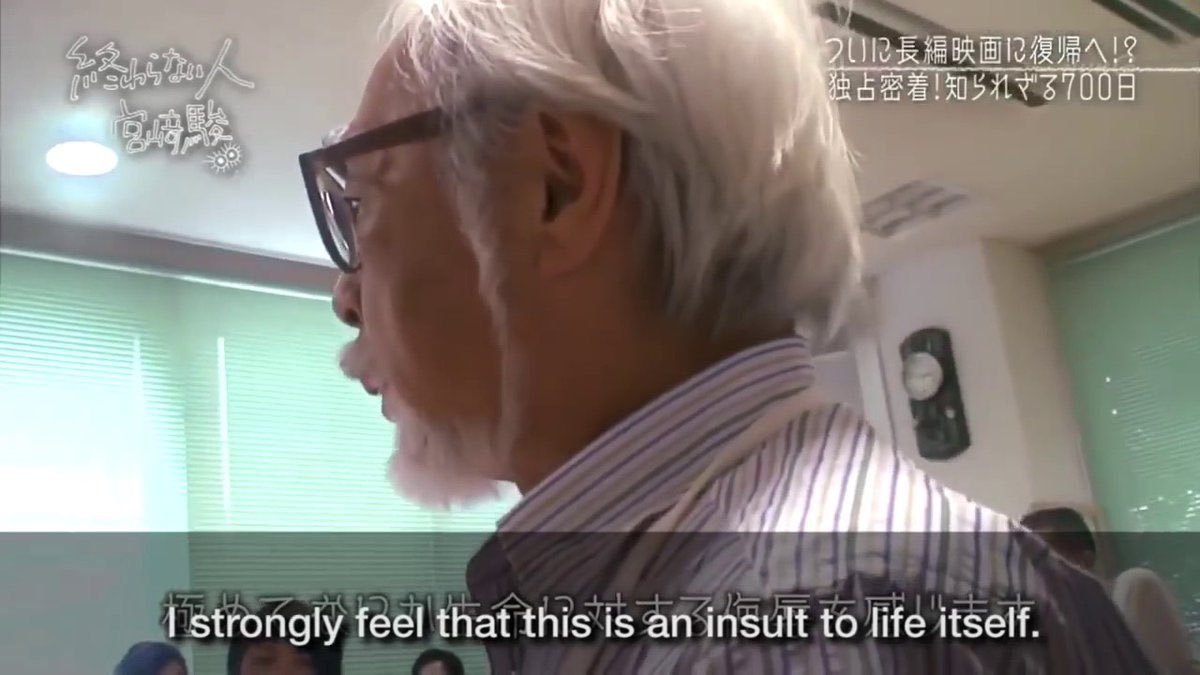

Fully Automated Insults to Life Itself

In 20 years time, we’re going to be talking about “generative AI”, in the same tone of voice we currently use to talk about asbestos. A bad idea that initially seemed promising which ultimately caused far more harm than good, and that left a swathe of deeply embedded pollution across the landscape that we’re still cleaning up.

It’s the final apotheosis of three decades of valuing STEM over the Humanities, in parallel with the broader tech industry being gutted and replaced by a string of venture-backed pyramid schemes, casinos, and outright cons.

The entire technology is utterly without value and needs to be scrapped, legislated out of existence, and the people involved need to be forcibly invited to find something better to send their time on. We’ve spent decades operating under the unspoken assumption that just because we can build something, that means it’s inevitable and we have to build it first before someone else does. It’s time to knock that off, and start asking better questions.

AI is the ultimate form of the joke about the restaurant where the food is terrible and also the portions are too small. The technology has two core problems, both of which are intractable:

- The output is terrible

- It’s deeply, fundamentally unethical

Probably the definite article on generative AI’s quality, or profound lack thereof, is Ted Chiang’s ChatGPT Is a Blurry JPEG of the Web; that’s almost a year old now, and everything that’s happened in 2023 has only underscored his points. Fundamentally, we’re not talking about vast cyber-intelligences, we’re talking Sparkling Autocorrect.

Let me provide a personal anecdote.

Earlier this year, a coworker of mine was working on some documentation, and had worked up a fairly detailed outline of what needed to be covered. As an experiment, he fed that outline into ChatGPT, intended to publish the output, and I offered to look over the result.

At first glance it was fine. Digging in, thought, it wasn’t great. It wasn’t terrible either—nothing in it was technically incorrect, but it had the quality of a high school book report written by someone who had only read the back cover. Or like documentation written by a tech writer who had a detailed outline they didn’t understand and a word count to hit? It repeated itself, it used far too many words to cover very little ground. It was, for lack of a better word, just kind of a “glurge”. Just room-temperature tepidarium generic garbage.

I started to jot down some editing notes, as you do, and found that I would stare at a sentence, then the whole paragraph, before crossing the paragraph out and writing “rephrase” in the margin. To try and be actually productive, I took a section and started to rewrite in what I thought was better, more concise manner—removing duplicates, omitting needless words. De-glurgifying.

Of course, I discovered I had essentially reconstituted the outline.

I called my friend back and found the most professional possible way to tell him he needed to scrap the whole thing start over.

It left me with a strange feeling, that we had this tool that could instantly generate a couple thousand words of worthless text that at first glance seemed to pass muster. Which is so, so much worse than something written by a junior tech writer who doesn’t understand the subject, because this was produced by something that you can’t talk to, you can’t coach, that will never learn.

On a pretty regular basis this year, someone would pop up and say something along the lines of “I didn’t know the answer, and the docs were bad, so I asked the robot and it wrote the code for me!” and then they would post some screenshots of ChatGPTs output full of a terribly wrong answer. Human’s AI pin demo was full of wrong answers, for heaven’s sake. And so we get this trend where ChatGPT manages to be an expert in things you know nothing about, but a moron about things you’re an expert in. I’m baffled by the responses to the GPT-n “search” “results”; they’re universally terrible and wrong.

And this is all baked in to the technology! It’s a very, very fancy set of pattern recognition based on a huge corpus of (mostly stolen?) text, computing the most probable next word, but not in any way considering if the answer might be correct. Because it has no way to, thats totally outside the bounds of what the system can achieve.

A year and a bit later, and the web is absolutely drowning in AI glurge. Clarkesworld had to suspend submissions for a while to get a handle on blocking the tide of AI garbage. Page after page of fake content with fake images, content no one ever wrote and only meant for other robots to read. Fake articles. Lists of things that don’t exist, recipes no one has ever cooked.

And we were already drowning in “AI” “machine learning” gludge, and it all sucks. The autocorrect on my phone got so bad when they went from the hard-coded list to the ML one that I had to turn it off. Google’s search results are terrible. The “we found this answer for you” thing at the top of the search results are terrible.

It’s bad, and bad by design, it can’t ever be more than a thoughtless mashup of material it pulled in. Or even worse, it’s not wrong so much as it’s all bullshit. Not outright lies, but vaguely truthy-shaped “content”, freely mixing copied facts with pure fiction, speech intended to persuade without regard for truth: Bullshit.

Every generated image would have been better and funnier if you gave the prompt to a real artist. But that would cost money—and that’s not even the problem, the problem is that would take time. Can’t we just have the computer kick something out now? Something that looks good enough from a distance? If I don’t count the fingers?

My question, though, is this: what future do these people want to live in? Is it really this? Swimming a sea of glurge? Just endless mechanized bullshit flooding every corner of the Web?Who looked at the state of the world here in the Twenties and thought “what the world needs right now is a way to generate Infinite Bullshit”?

Of course, the fact that the results are terrible-but-occasionally-fascinating obscure the deeper issue: It’s a massive plagiarism machine.

Thanks to copyleft and free & open source, the tech industry has a pretty comprehensive—if idiosyncratic—understanding of copyright, fair use, and licensing. But that’s the wrong model. This isn’t about “fair use” or “transformative works”, this is about Plagiarism.

This is a real “humanities and the liberal arts vs technology” moment, because STEM really has no concept of plagiarism. Copying and pasting from the web is a legit way to do your job.

(I mean, stop and think about that for a second. There’s no other industry on earth where copying other people’s work verbatim into your own is a widely accepted technique. We had a sign up a few jobs back that read “Expert level copy and paste from stack overflow” and people would point at it when other people had questions about how to solve a problem!)

We have this massive cultural disconnect that would be interesting or funny if it wasn’t causing so much ruin. This feels like nothing so much as the end result of valuing STEM over the Humanities and Liberal Arts in education for the last few decades. Maybe we should have made sure all those kids we told to “learn to code” also had some, you know, ethics? Maybe had read a couple of books written since they turned fourteen?

So we land in a place where a bunch of people convinced they’re the princes of the universe have sucked up everything written on the internet and built a giant machine for laundering plagiarism; regurgitating and shuffling the content they didn’t ask permission to use. There’s a whole end-state libertarian angle here too; just because it’s not explicitly illegal, that means it’s okay to do it, ethics or morals be damned.

“It’s fair use!” Then the hell with fair use. I’d hate to lose the wayback machine, but even that respects robots.txt.

I used to be a hard core open source, public domain, fair use guy, but then the worst people alive taught a bunch of if-statements to make unreadable counterfit Calvin & Hobbes comics, and now I’m ready to join the Butlerian Jihad.

Why should I bother reading something that no one bothered to write?

Why should I bother looking at a picure that no one could be bothered to draw?

Generative AI and it’s ilk are the final apotheosis of the people who started calling art “content”, and meant it.

These are people who think art or creativity are fundamentally a trick, a confidence game. They don’t believe or understand that art can be about something. They reject utter the concept of “about-ness”, the basic concept of “theme” is utterly beyond comprehension. The idea that art might contain anything other than its most surface qualities never crosses their mind. The sort of people who would say “Art should soothe, not distract”. Entirely about the surface aesthetic over anything.

(To put that another way, these are the same kind people who vote Republican but listen to Rage Against the Machine.)

Don’t respect or value creativity.

Don’t respect actual expertise.

Don’t understand why they can’t just have what someone else worked for. It’s even worse than wanting to pay for it, these creatures actually think they’re entitled to it for free because they know how to parse a JSON file. It feels like the final end-point of a certain flavor of free software thought: no one deserves to be paid for anything. A key cultual and conceptual point past “information wants to be free” and “everything is a remix”. Just a machine that endlessly spits out bad copies of other work.

They don’y understand that these are skills you can learn, you have to work at, become an expert in. Not one of these people who spend hours upon hours training models or crafting prompts ever considered using that time to learn how to draw. Because if someone else can do it, they should get access to that skill for free, with no compensation or even credit.

This is why those machine generated Calvin & Hobbes comics were such a shock last summer; anyone who had understood a single thing about Bill Watterson’s work would have understood that he’d be utterly opposed to something like that. It’s difficult to fathom someone who liked the strip enough to do the work to train up a model to generate new ones while still not understanding what it was about.

“Consent” doesn’t even come up. These are not people you should leave your drink uncovered around.

But then you combine all that with the fact that we have a whole industry of neo-philes, desperate to work on something New and Important, terrified their work might have no value.

(See also: the number of abandoned javascript frameworks that re-solve all the problems that have already been solved.)

As a result, tech has an ongoing issue with cool technology that’s a solution in search of a problem, but ultimately is only good for some kind of grift. The classical examples here are the blockchain, bitcoin, NFTs. But the list is endless: so-called “4th generation languages”, “rational rose”, the CueCat, basically anything that ever got put on the cover of Wired.

My go-to example is usually bittorrent, which seemed really exciting at first, but turned out to only be good at acquiring TV shows that hadn’t aired in the US yet. (As they say, “If you want to know how to use bittorrent, ask a Doctor Who fan.”)

And now generative AI.

There’s that scene at the end of Fargo, where Frances McDormand is scolding The Shoveler for “all this for such a tiny amount of money”, and thats how I keep thinking about the AI grift carnival. So much stupid collateral damage we’re gonna be cleaning up for years, and it’s not like any of them are going to get Fuck You(tm) rich. No one is buying an island or founding a university here, this is all so some tech bros can buy the deluxe package on their next SUV. At least crypto got some people rich, and was just those dorks milking each other; here we all gotta deal with the pollution.

But this feels weirdly personal in a way the dunning-krugerrands were not. How on earth did we end up in a place where we automated art, but not making fast food, or some other minimum wage, minimum respect job?

For a while I thought this was something along one of the asides in David Graeber’s Bullshit Jobs, where people with meaningless jobs hate it when other people have meaningful ones. The phenomenon of “If we have to work crappy jobs, we want to pull everyone down to our level, not pull everyone up”. See also: “waffle house workers shouldn’t make 25 bucks an hour”, “state workers should have to work like a dog for that pension”, etc.

But no, these are not people with “bullshit jobs”, these are upper-middle class, incredibly comfortable tech bros pulling down a half a million dollars a year. They just don’t believe creativity is real.

But because all that apparently isn’t fulfilling enough, they make up ghost stories about how their stochastic parrots are going to come alive and conquer the world, how we have to build good ones to fight the bad ones, but they can’t be stopped because it’s inevitable. Breathless article after article about whistleblowers worried about how dangerous it all is.

Just the self-declared best minds of our generation failing the mirror test over and over again.

This is usually where someone says something about how this isn’t a problem and we can all learn to be “prompt engineers”, or “advisors”. The people trying to become a prompt advisor are the same sort who would be proud they convinced Immortan Joe to strap them to the back of the car instead of the front.

This isn’t about computers, or technology, or “the future”, or the inevitability of change, or the march or progress. This is about what we value as a culture. What do we want?

“Thus did a handful of rapacious citizens come to control all that was worth controlling in America. Thus was the savage and stupid and entirely inappropriate and unnecessary and humorless American class system created. Honest, industrious, peaceful citizens were classed as bloodsuckers, if they asked to be paid a living wage. And they saw that praise was reserved henceforth for those who devised means of getting paid enormously for committing crimes against which no laws had been passed. Thus the American dream turned belly up, turned green, bobbed to the scummy surface of cupidity unlimited, filled with gas, went bang in the noonday sun.” ― Kurt Vonnegut, God Bless You, Mr. Rosewater

At the start of the year, the dominant narrative was that AI was inevitable, this was how things are going, get on board or get left behind.

Thats… not quite how the year went?

AI was a centerpiece in both Hollywood strikes, and both the Writers and Actors basically ran the table, getting everything they asked for, and enshrining a set of protections from AI into a contract for the first time. Excuse me, not protection from AI, but protection from the sort of empty suits that would use it to undercut working writers and performers.

Publisher after publisher has been updating their guidelines to forbid AI art. A remarkable number of other places that support artists instituted guidlines to ban or curtail AI. Even Kickstarter, which plunged into the blockchain with both feet, seemed to have learned their lesson and rolled out some pretty stringent rules.

Oh! And there’s some actual high-powered lawsuits bearing down on the industry, not to mention investigations of, shall we say, “unsavory” material in the training sets?

The initial shine seems to be off, where last year was all about sharing goofy AI-generated garbage, there’s been a real shift in the air as everyone gets tired of it and starts pointing out that it sucks, actually. And that the people still boosting it all seem to have some kind of scam going. Oh, and in a lot of cases, it’s literally the same people who were hyping blockchain a year or two ago, and who seem to have found a new use for their warehouses full of GPUs.

One of the more heartening and interesting developments this year was the (long overdue) start of a re-evaluation of the Luddites. Despite the popular stereotype, they weren’t anti-technology, but anti-technology-being-used-to-disenfrancise-workers. This seems to be the year a lot of people sat up and said “hey, me too!”

AI isn’t the only reason “hot labor summer” rolled into “eternal labor september”, but it’s pretty high on the list.

Theres an argument thats sometimes made that we don’t have any way as a society to throw away a technology that already exists, but that’s not true. You can’t buy gasoline with lead in it, or hairspray with CFCs, and my late lamented McDLT vanished along with the Styrofoam that kept the hot side hot and the cold side cold.

And yes, asbestos made a bunch of people a lot of money and was very good at being children’s pyjamas that didn’t catch fire, as long as that child didn’t need to breathe as an adult.

But, we've never done that for software.

Back around the turn of the century, there was some argument around if cryptography software should be classified as a munition. The Feds wanted stronger export controls, and there was a contingent of technologists who thought, basically, “Hey, it might be neat if our compiler had first and second amendment protection”. Obviously, that didn’t happen. “You can’t regulate math! It’s free expression!”

I don’t have a fully developed argument on this, but I’ve never been able to shake the feeling like that was a mistake, that we all got conned while we thought we were winning.

Maybe some precedent for heavily “regulating math” would be really useful right about now.

Maybe we need to start making some.

There’s a persistant belief in computer science since computers were invented that brains are a really fancy powerful computer and if we can just figure out how to program them, intelligent robots are right around the corner.

Theres an analogy that floats around that says if the human mind is a bird, then AI will be a plane, flying, but very different application of the same principals.

The human mind is not a computer.

At best, AI is a paper airplane. Sometimes a very fancy one! With nice paper and stickers and tricky folds! Byt the key is that a hand has to throw it.

The act of a person looking at bunch of art and trying to build their own skills is fundamentally different than a software pattern recognition algorithm drawing a picture from pieces of other ones.

Anyone who claims otherwise has no concept of creativity other than as an abstract concept. The creative impulse is fundamental to the human condition. Everyone has it. In some people it’s repressed, or withered, or undeveloped, but it’s always there.

Back in the early days of the pandemic, people posted all these stories about the “crazy stuff they were making!” It wasn’t crazy, that was just the urge to create, it’s always there, and capitalism finally got quiet enough that you could hear it.

“Making Art” is what humans do. The rest of society is there so we stay alive long enough to do so. It’s not the part we need to automate away so we can spend more time delivering value to the shareholders.

AI isn’t going to turn into skynet and take over the world. There won’t be killer robots coming for your life, or your job, or your kids.

However, the sort of soulless goons who thought it was a good idea to computer automate “writing poetry” before “fixing plumbing” are absolutely coming to take away your job, turn you into a gig worker, replace whoever they can with a chatbot, keep all the money for themselves.

I can’t think of anything more profoundly evil than trying to automate creativity and leaving humans to do the grunt manual labor.

Fuck those people. And fuck everyone who ever enabled them.

Covering the Exits

So! Adobe has quietly canceled their plans to acquire Figma. For everyone playing the home game, Figma is a startup that makes web-based design tools, and was one of the first companies to make some actual headway into Adobe’s domination of the market. (At least, since Adobe acquired Macromedia, anyway.). Much ink has been spilled on Figma “disrupting” Adobe.

Adobe cited regulatory concerns as the main reason to cancel the acquisition, which tracks with the broader story of the antitrust and regulatory apparatus slowly awakening from its long slumber.

On the one hand, this was blatantly a large company buying up their only outside competition in a decade. On the other hand, it’s not clear Figma had any long-term business plan other than “sell out to Adobe?”

Respones to this have been muted, but there’s a distinct set of “temporarily embarrassed” tech billionaries saying things like “well, tut tut, regulations are good in theory, but I can still sell my startup, right?”

There’s an entire business model thats emerged over the last few decades, fueled by venture capital and low interest rates, where the company itself is the product. Grow fast, build up a huge user-base, then sell out to someone. Don’t worry about the long term, take “the exit.”

This is usually described in short-hand as “if you’re not paying for something, you’re not the customer, you’re the product”, which isn’t wrong, but it’s not totally right either. There’s one product: the company itself. The founders are making one thing, then they’re going to sell it to someone else.

And sure, because if that’s the plan, things get so easy. Who cares what the long-term vacation accural schedule is, or the promotional tracks, or how we’re going to turn a profit? In five years, that’ll be Microsoft/Adobe/Facebook/Google’s problem, and we’ll be on a beach earning twenty percent.

Anf there’s a real thread of fear out there now that the “sell the company” exit might not be as easy as deal as it used to be?

There’s nothing I can think of would have a more positive effect on the whole tech industry than taking “…and then sell the company” off the table as a startup exit. Imagine if that just… wasn’t an option? If startups had to start with “how are we going to become self-funding”, if VCs knew they weren’t going to walk away with a couple billion dollars of cash from Redmond?

I was going to put a longer rant here, but there must be something in the water today because Ed Zitron covered all the same ground but in more detail and angrier today—Software Is Beating The World:

Every single stupid, loathsome, and ugly story in tech is a result of the fundamentally broken relationship between venture capital and technology. And, as with many things, it started with a blog.

While I’m here, though, I’m going to throw one elbow that Ed didn’t: I’m not sure any book has had more toxic, unintended consequences than The Innovator’s Dilemma. While “Disruption Theory” remains an intellectually attractive description of how new products enter the market, it turns out it only had useful explanatory power once: when the iPhone shipped. Here in the twenties, if anyone is still using the term “Disruption” with a straight face they’re almost certainly full of crap, and are probably about to tell you about their “cool business hack” which actually ends up being “ignore labor laws until we get acquired.”

It’s time to stop talking about disruption, and start talking about construction. Stop eying the exits. What what it look like if people started building tech companies they expected their kids to take over?

2023’s strange box office

Weird year for the box office, huh? Back in July, we had that whole rash of articles about the “age of the flopbuster” as movie after movie face-planted. Maybe things hadn’t recovered from the pandemic like people hoped?

And then, you know, Barbenheimer made a bazillion dollars.

And really, nothing hit like it was supposed to all year. People kept throwing out theories. Elemental did badly, and it was “maybe kids are done with animation!” Ant-Man did badly, and it was “Super-Hero fatigue!” Then Spider-Verse made a ton of money disproving both. And Super Mario made a billion dollars. And then Elemental recovered on the long tail and ended up making half a billion? And Guardians 3 did just fine. But Captain Marvel flopped. Harrison Ford came back for one more Indiana Jones and no one cared.

Somewhere around the second weekend of Barbenheimer everyone seemed to throw up their hands as if to say “we don’t even know what’ll make money any more”.

Where does all that leave us? Well, we clearly have a post-pandemic audience that’s willing to show up and watch movies, but sure seems more choosy than they used to be. (Or choosy about different things?)

Here’s my take on some reasons why:

The Pandemic. I know we as a society have decided to act like COVID never happened, but it’s still out there. Folks may not admit it, but it’s still influencing decisions. Sure, it probably wont land you in the hospital, but do you really want to risk your kid missing two weeks of school just so you can see the tenth Fast and the Furious in the theatre? It may not be the key decision input anymore, but that’s enough potential friction to give you pause.

Speaking of the theatre, the actual theater experience sucks most of the time. We all like to wax poetic about the magic of the shared theatre experience, but in actual theaters, not the fancy ones down in LA, that “experience” is kids talking, the guy in front of you on his phone, the couple behind you being confused, gross floors, and half an hour of the worst commercials you’ve ever seen before the picture starts out of focus and too dim.

On the other hand, you know what everyone did while they were stuck at home for that first year of COVID? Upgrade their home theatre rig. I didn’t spend a whole lot of money, but the rig in my living room is better than every mall theatre I went to in the 90s, and I can put the volume where I want it, stop the show when the kids need to go to the bathroom, and my snacks are better, and my chairs are more comfortable.

Finally, and I think this is the key one—The value proposition has gotten out of wack in a way I don’t think the industry has reckoned with. Let me put my cards down on the table here: I think I saw just about every movie released theatrically in the US between about 1997 and maybe 2005. I’m pro–movie theatre. It was fun and I enjoyed it, but also that was absolutely the cheapest way to spend 2-3 hours. Tickets were five bucks, you could basically fund a whole day on a $20 bill if you were deliberate about it.

But now, taking a family of four to a movie is in the $60-70 range. And, thats a whole different category. That’s what a new video game costs. That’s what I paid for the new Zelda, which the whole family is still playing and enjoying six months later, hundreds of hours in. Thats Mario Kart with all the DLC, which we’ve also got about a million hours in. You’re telling me that I should pay the same amount of money that got me all that for one viewing of The Flash? Absolutely Not. I just told the kids we weren’t going to buy the new Mario before christmas, but I’m supposed to blow that on… well, literally anything that only takes up two hours?

And looking at that from the other direction, I’m paying twelve bucks a month for Paramount +, for mostly Star Trek–related reasons. But that also has the first six Mission: Impossible movies on it right now. Twelve bucks, you could cram ‘em all in a long weekend if you were serious about it. And that’s not really even a streaming thing, you could have netted six not-so-new release movies for that back in the Blockbuster days too. And like I said, I have some really nice speakers and a 4k projector, those movies look great in my living room. You’re trying to tell me that the new one is so much better that I need to pay five times what watching all the other movies cost me, just to see it now? As opposed to waiting a couple of months?

And I think that’s the key I’m driving towards here: movies in the theatre have found themselves with a premium price without offering a premium product.

So what’s premium even mean in this context? Clicking back and forth between Box Office Mojo’s domestic grosses for 2023 and 2019, this year didn’t end up being that much worse, it just wasn’t the movies people were betting on that made money.

There’s a line I can’t remember the source of that goes something to the effect of “hollywood doesn’t have a superhero movie problem, it has a ‘worse copy of movies we’ve already seen’ problem.” Which dovetails nicely with John Scalzi’s twitter quip about The Flash bombing: “…the fact is we’re in the “Paint Your Wagon” phase of the superhero film era, in which the genre is played out, the tropes are tired and everyone’s waiting for what the next economic engine of movies will be.”

Of course, when we say “Superhero”, we mostly mean Marvel Studios, since the recent DC movies have never been that good or successful. And Marvel did one of the dumbest things I’ve ever seen, which is gave everyone an off ramp. For a decade they had everyone in a groove to go see two or three movies a year and keep up on what those Avengers and their buddies were up to. Sure, people would skip one or two here or there, a Thor, an Ant-Man, but everyone would click back in for one of the big team up movies. And then they made Endgame, and said “you’re good, story is over, you can stop now!” And so people did! The movie they did right after Endgame needed to be absolutely the best movie they had ever done, and instead it was Black Widow. Which was fine, but didn’t convince anyone they needed to keep watching.

And I’d extend all this out to not just Superheros, but also “superhero adjacent” moves, your Fast and Furious, Mission: Impossible, Indiana Jones. Basically all the “big noise” action blockbusters. I mean, what’s different about this one versus the other half-dozen I’ve already seen?

(Indiana Jones is kind of funny for other reasons, because I think Disney dramatically underestimated how much the general audience knew or cared about Spielburg. His name on those movies mattered! The guy who made “The Wolverine” is fine and all, but I’m gonna watch that one at home. I’m pretty sure if Steve had directed it instead of going off to do West Side Story it would have made a zillion dollars.)

But on the other hand, the three highest grossing movies that weren’t Barbenheimer were Super Mario Bros, Spider-Verse, and Guardians of the Galaxy 3, so clearly superheros and animation are still popular, just the right superheros and animation. Dragging the superhero-movies-are-musicals metaphor to the limit, there were plenty of successful musicals after Paint your Wagon, but they were the ones that did something interesting or different. They stopped being automatically required viewing.

At this point, I feel like we gotta talk about budgets for a second, only only for a second because it is not that interesting. If you don’t care about this, I’ll meet down on the other side of the horizontal line.

Because the thing is, most of those movies that, ahem, “underperformed” cost a ton. The new M:I movie payed the salaries for everyone working on it through the whole COVID lockdown, so they get a pass. (Nice work, Tom Cruise!). Everyone else, though, what are you even doing? If you spend so much money making a movie that you need to be one of the highest grossing films of all time just to break even, maybe that’s the problem right there? Dial of Destiny cost 300 million dollars. Last Crusade cost forty eight. Adjusted for inflation, thats (checks wolfram alpha) …$116 million? Okay, that amount of inflation surprised me too, but the point stands: is Dial three times as much movie as Last Crusade? Don’t bother answering that, no it is not, and thats even before pointing out the cheap one was the one with Sean friggin’ Connery.

This where everyone brings up Sound of Freedom. Let’s just go ahead and ignore, well, literally everything else about the movie and just point out that it made just slightly more money than the new Indiana Jones movie, but also only cost, what, 14 million bucks? Less than five percent of what Indy cost?

There’s another much repeated bon mot I can’t seem to find an origin for that goes something along the lines of “They used to used to make ten movies hoping one would be successful enough to pay for the other nine, but then decided to just make the one that makes money, which worked great until it didn’t.” And look, pulpy little 14 million dollar action movies are exactly the kind of movie they’re talking about there. Sometimes they hit a chord! Next time you’re tempted to make a sequel to a Spielburg/Lucas movie without them, maybe just scrap that movie and make twenty one little movies instead.

—

So, okay. What’s the point, what can we learn from this strange year in a strange decade? Well, people like movies. They like going to see movies. But they aren’t going to pay to see a worse version of something they can already watch at home on their giant surround-sound-equipped TV for “free”. Or risk getting sick for the privilege.

Looking at the movies that did well this year, it was the movies that had something to say, that had a take, movies that had ambitions beyond being “the next one.”

Hand more beloved brand names to indie film directors and let them do whatever they want. Or, make a movie based on something kids love that doesn’t already have a movie. Or make a biography about how sad it is that the guy who invented the atomic bomb lost his security clearance because iron man hated him. That one feels less applicable, but you never know. If you can build a whole social event around an inexplicable double-feature, so much the better.

And, look, basically none of this is new. The pandemic hyper-charged a whole bunch of trends, but I feel like I could have written a version of this after Thanksgiving weekend for any year in the past decade.

That’s not the point. This is:

My favorite movie of the year was Asteroid City. That was only allegedly released into theatres. It made, statistically speaking, no money. Those kinds of movies never do! They make it up on the long tail.

I like superhero/action movies movies as much as the next dork who knew who “Rocket Racoon” was before 2014, but I’m not about to pretend they’re high art or anything. They’re junk food, sometimes well made very entertaining junk food, but lets not kid ourselves about the rest of this complete breakfast.

“Actually good” movies (as opposed to “fun and loud”) don’t do well in the theatre, they do well on home video.

Go back and look at that 2019 list I linked above. On my monitor, the list cuts off at number fifteen before you have to scroll, and every one of those fifteen movies is garbage. Fun garbage, in most cases! On average, well made, popular, very enjoyable. (Well, mostly, Rise of Skywalker is the worst movie I’ve ever paid to see.)

Thats what was so weird about Barbenheimer, and Spider-Verse, and 2023’s box office. For once, objectively actually good movies made all the money.

Go watch Asteroid City at home, that’s what I’m saying.

What was happening: Twitter, 2006-2023

Twitter! What can I tell ya? It was the best of times, it was the worst of times. It was a huge part my life for a long time. It was so full of art, and humor, and joy, and community, and ideas, and insight. It was also deeply flawed and profoundly toxic, but many of those flaws were fundamental to what made it so great.

It’s almost all gone now, though. The thing called X that currently lives where twitter used to be is a pale, evil, corrupted shadow of what used to be there. I keep trying to explain what we lost, and I can’t, it’s just too big.1 So let me sum up. Let me tell you why I loved it, and why I left. As the man2 said, let me tell you of the days of high adventure.

I can’t now remember when I first heard the word “twitter”. I distinctly remember a friend complaining that this “new twitter thing” had blown out the number of free SMS messages he got on his nokia flip phone, and that feels like a very 2006 conversation.

I tend to be pretty online, and have been since the dawn of the web, but I’m not usually an early adopter of social networks, so I largely ignored twitter for the first couple of years. Then, for reasons downstream of the Great Recession, I found myself unemployed for most of the summer of 2009.3 Suddenly finding myself with a surfit of free time, I worked my way down that list of “things I’ll do if I ever get time,” including signing up for “that twitter thing.” (I think that’s the same summer I lit up my now-unused Facebook account, too.) Smartphones existed by then, and it wasn’t SMS-based anymore, but had a website, and apps.4

It was great. This was still in it’s original “microblogging” configuration, where it was essentially an Instant Messenger status with history. You logged in, and there was the statuses of the people you followed, in chronological order, and nothing else.

It was instantly clear that this wasn’t a replacement for something that already existed—this wasn't going to do away with your LiveJournal, or Tumblr, or Facebook, or blog. This was something new, something extra, something yes and. The question was, what was it for? Where did it fit in?

Personally, at first I used my account as a “current baby status” feed, updating extended family about what words my kids had learned that day. The early iteration of the site was perfect for that—terse updates to and from people you knew.

Over time, it accumulated various social & conversational features, not unlike a Katamari rolling around Usenet, BBSes, forums, discussion boards, other early internet communication systems. It kept growing, and it became less useful as a micro-blogging system and more of a free-wheeling world-wide discussion forum.

It was a huge part of my life, and for a while there, everyone’s life. Most of that time, I enjoyed it an awful lot, and got a lot out of it. Everyone had their own take on what it was Twitter had that set it apart, but for me it was three main things, all of which reinforced each other:

It was a great way to share work. If you made things, no matter how “big” you were, it was a great way to get your work out there. And, it was a great way to re-share other people’s work. As a “discovery engine” it was unmatched.

Looking at that the other way, It was an amazing content aggregator. It essentially turned into “RSS, but Better”; at the time RSS feeds had pretty much shrunk to just “google reader’s website”. It turns out that sharing things from your RSS feed into the feeds of other people, plus a discussion thread, was the key missing feature. If you had work of your own to share, or wanted to talk about something someone else had done elsewhere on the internet, twitter was a great way to share a link and talk about it. But, it also worked equally well for work native to twitter itself. Critically, the joke about the web shrinking to five websites full of screenshots of the other four5 was posted to twitter, which was absolutely the first of those five websites.

Most importantly, folks who weren’t anywhere else on the web were on twitter. Folks with day jobs, who didn’t consider themselves web content people were there; these people didn’t have a blog, or facebook, or instagram, but they were cracking jokes and hanging out in twitter.

There is a type of person whom twitter appealed to in a way that no other social networking did. A particular kind of weirdo that took Twitter’s limitations—all text, 140 or 280 characters max—and turned them into a playground.

And that’s the real thing—twitter was for writers. Obviously it was text based, and not a lot of text at that, so you had to be good at making language work for you. As much as the web was originally built around “hypertext”, most of the modern social web is built around photos, pictures, memes, video. Twitter was for people who didn’t want to deal with that, who could make the language sing in a few dozen words.

It had the vibe of getting to sit in on the funniest people you know’s group text, mixed with this free-wheeling chaos energy. On it’s best days, it had the vibe of the snarky kids at the back of the bus, except the bus was the internet, and most of the kids were world-class expoerts in something.

There’s a certain class of literary writer goofballs that all glommed onto twitter in a way none of us did with any other “social network.” Finally, something that rewarded what we liked and were good at!

Writers, comedians, poets, cartoonists, rabbis, just hanging out. There was a consistent informality to the place—this wasn’t the show, this was the hotel bar after the show. The big important stuff happened over in blogs, or columns, or novels, or wherever everyone’s “real job” was, this was where everyone let their hair down and cracked jokes.

But most of all, it was weird. Way, way weirder than any other social system has ever been or probably ever will be again, this was a system that ran on the same energy you use to make your friends laugh in class when you’re supposed to be paying attention.

It got at least one thing exactly right: it was no harder to sign into twitter and fire off a joke than it was to fire a message off to the group chat. Between the low bar to entry and the emphasis on words over everthing else, it managed to attract a crowd of folks that liked computers, but didn’t see them as a path to self-actualization.

But what made twitter truly great were all the little (and not so little) communities that formed. It wasn’t the feature set, or the website, or the tech, it was the people, and the groups they formed. It’s hard to start making lists, because we could be here all night and still leave things out. In no particular order, here’s the communities I think I’ll miss the most:

- Weird Twitter—Twitter was such a great vector for being strange. Micro-fiction, non-sequiturs, cats sending their mothers to jail, dispatches from the apocalypse.

- Comedians—professional and otherwise, people who could craft a whole joke in one sentence.

- Writers—A whole lot of people who write for a living ended up on twitter in a way they hadn’t anywhere else on the web.

- Jewish Twitter—Speaking as a Jew largely disconnection from the local Jewish community , it was so much fun to get to hang out with the Rabbis and other Jews.

But also! The tech crowd! Legal experts! Minorities of all possible interpretations of the word sharing their experiences.

And the thing is, other than the tech crowd,6 most of those people didn’t go anywhere else. They hadn’t been active on the previous sites, and many of them drifted away again the wheels started coming off twitter. There was a unique alchemy on twitter for forming communities that no other system has ever had.

And so the real tragedy of twitter’s implosion is that those people aren’t going somewhere else. That particular alchemy doesn’t exist elsewhere, and so the built up community is blowing away on the wind.

Because all that’s almost entirely gone now, though. I miss it a lot, but I realize I’ve been missing it for a year now. There had been a vague sense of rot and decline for a while. You can draw a pretty straight line from gamergate, to the 2016 Hugos, to the 2016 election, to everything around The Last Jedi, to now, as the site rotted out from the inside; a mounting sense that things were increasingly worse than they used to be. The Pandemic saw a resurgence of energy as everyone was stuck at home hanging out via tweets, but in retrospect that was a final gasp.7

Once The New Guy took over, there was a real sense of impending closure. There were plenty of accounts that made a big deal out of Formally Leaving the site and flouncing out to “greener pastures”, either to make a statement, or (more common) to let their followers know where they were. There were also plenty of accounts saying things like “you’ll all be back”, or “I was here before he got here and I’ll be here after he leaves”, but over the last year mostly people just drifted away. People just stopped posting and disappeared.

It’s like the loss of a favorite restaurant —the people who went there already know, and when people who wen’t there express disbelief, the response is to tell them how sorry you are they missed the party!

The closest comparison I can make to the decayed community is my last year of college. (Bear with me, this’ll make sense.). For a variety of reasons, mostly good, it took me 5 years to get my 4 year degree. I picked up a minor, did some other bits and bobs on the side, and it made sense to tack on an extra semester, and at that point you might as well do the whole extra year.

I went to a medium sized school in a small town.8 Among the many, many positive features of that school was the community. It seemed like everyone knew everyone, and you couldn’t go anywhere without running into someone you knew. More than once, when I didn’t have anything better to do, I’d just hike downtown and inevitably I’d run into someone I knew and the day would vector off from there.9

And I’d be lying if I said this sense of community wasn’t one of the reasons I stuck around a little longer—I wasn’t ready to give all that up. Of course, what I hadn’t realized was that not everyone else was doing that. So one by one, everyone left town, and by the end, there I was in downtown surrounded by faces I didn’t know. My lease had a end-date, and I knew I was moving out of town on that day no matter what, so what, was I going to build up a whole new peer group with a short-term expiration date? That last six months or so was probably the weirdest, loneliest time of my whole lide. When the lease ended, I couldn’t move out fast enough.

The point is: twitter got to be like that. I was only there for the people, and nearly all the people I was there for had already gone. Being the one to close out the party isn’t always the right move.

One of the things that made it so frustrating was that it had always problems, but it had the same problems that any under-moderated semi-anonymous internet system had. “How to stop assholes from screwing up your board” is a 4 decade old playbook at this point, and twitter consistently failed to actually deploy any of the solutions, or at least deploy them at a scale that made a difference. The maddening thing was always that the only unique thing about twitter’s problems was the scale.

I had a soft rule that I could only read Twitter when using my exercise bike, and a year or two ago I couldn’t get to the end of the tweets from people I followed before I collapsed from exhaustion. Recently, I’d run out of things to read before I was done with my workout. People were posting less, and less often, but mostly they were just… gone. Quietly fading away as the site got worse.

In the end, though, it was the tsunami of antisemitism that got me. “Seeing only what you wanted to see” was always a skill on twitter, but the unfolding disaster in Israel and Gaza broke that. Not only did you have the literal nazis showing up and spewing their garbage without check, but you had otherwise progressive liberal leftists (accidentally?) doing the same thing, without pushback or attempt at discussion, because all the people that would have done that are gone. So instead it’s just a nazi sludge.10

There was so much great stuff on there—art, ideas, people, history, jokes. Work I never would have seen, things I wouldn’t have learned, books I wouldn’t have read, people I wouldn’t know about. I keep trying to encompass what’s been lost, make lists, but it’s too big. Instead, let me tell you one story about the old twitter:

One of the people I follow(ed) was Kate Beaton, originally known for the webcomic Hark A Vagrant!, most recently the author of Ducks (the best book I read last year). One day, something like seven years ago, she started enthusing about a book called Tough Guys Have Feelings Too. I don’t think she had a connection to the book? I remember it being an unsolicited rave from someone who had read it and was stuck by it.

The cover is a striking piece of art of a superhero, head bowed, eyes closed, a tear rolling down his cheek. The premise of the book is what it says on the cover—even tough guys have feelings. The book goes through a set of sterotypical “tough guys”—pirates, ninjas, wrestlers, superheros, race car drivers, lumberjacks, and shows them having bad days, breaking their tools, crashing their cars, hurting themselves. The tough guys have to stop, and maybe shed a tear, or mourn, or comfort themselves or each other, and the text points out, if even the tough guys can have a hard time, we shouldn’t feel bad for doing the same. The art is striking and beautiful, the prose is well written, the theme clearly and well delivered.

I bought it immediately. You see, my at-the-time four-year-old son was a child of Big Feelings, but frequently had trouble handling those feelings. I thought this might help him. Overnight, this book became almost a mantra. For years after this, when he was having Big Feelings, we’d read this book, and it would help him calm down and take control of what he was feeling.

It’s not an exaggeration to say this book changed all our lives for the better. And in the years since then, I’ve often been struck that despite all the infrastructure of moden capitalism—marketing, book tours, reviews, blogs, none of those ever got that book into my hands. There’s only been one system where an unsolicited rave from a web cartoonist being excited about a book outside their normal professional wheelhouse could reach someone they’ve never met or heard of and change that person’s son’s life.

And that’s gone now.

-

I’ve been trying to write something about the loss of twitter for a while now. The first draft of this post has a date back in May, to give you some idea.

-

Mako.

-

As as aside, everyone should take a summer off every decade or so.

-

I tried them all, I think, but settled on the late, lamented Tweetbot.

-

The tech crowd all headed to mastodon, but didn’t build that into a place that any of those other communities could thrive. Don’t @-me, it’s true.

-

In retrospect, getting Morbius to flop a second time was probably the high point, it was all downhill after that.

-

CSU Chico in Chico, California!

-

Yes, this is what we did back in the 90s before cellphones and texting, kids.

-

This is out of band for the rest of the post, so I’m jamming all this into a footnote:

Obviously, criticizing the actions of the government of Israel is no more antisemitic than criticizing Hamas would be islamophobic. But objecting to the actions of Israel ’s government with “how do the Jews not know they’re the bad guys” sure as heck is, and I really didn’t need to see that kind of stuff being retweeted by the eve6 guy.

A lot of things are true. Hamas is not Palestine is not “The Arabs”, and the Netanyahu administration is not Israel is not “The Jews.” To be clear, Hamas is a terror organization, and Israel is on the functional equivalent of Year 14 of the Trump administration.

The whole disaster hits at a pair of weird seams in the US—the Israel-Palestine conflict maps very strangely to the American political left-right divide, and the US left has always had a deep-rooted antisemitism problem. As such, what really got me was watching all the comments criticizing “the Jews” for this conflict come from _literally_ the same people who spent four years wearing “not my president” t-shirts and absolving themselves from any responsibility for their governments actions because they voted for “the email lady”. They get the benefit of infinite nuance, but the Jews are all somehow responsible for Bibi’s incompetent choices.

Fall ’23 Good TV Thursdays: “The real TVA was the friends we made along the way”

For a moment there, we had a real embarrassment of riches: three of the best genre shows of the year so far—Loki, Lower Decks, and Our Flag Means Death—were not only all on in October, but they all posted the same night: Thursdays. While none of the people making those three shows thought of themselves as part of a triple-feature, that’s where they ended up, and they contrasted and complimented each other in interesting ways. It’s been a week or two now, let’s get into the weeds.

Heavy Spoilers Ahoy for Loki S2, Lower Decks S4, and Our Flag Means Death S2

Loki season 2

The first season of Loki was a unexpected delight. Fun, exciting, and different, it took Tom Hiddleston’s Loki and put him, basically, in a minor league ball version of Doctor Who.

(The minor league Who comparison was exacerbated later when the press release announcing that Loki S1 director Kate Herron was writing an episode of Who had real “we were so impressed with their work we called them up to the majors” energy.)

Everything about the show worked. The production design was uniformly outstanding, from the TVA’s “fifties-punk” aesthetic , to the cyberpunk city and luxury train on the doomed planet of Lamentis-1, to Alabama of the near future, to casually tossing off Pompei at the moment the volcano exploded.

The core engine of the show was genius—stick Loki in what amounted to a buddy time cop show with Owen Wilson’s Mobius and let things cook. But it wasn’t content to stop there; it took all the character development Loki had picked up since the first Avengers, and worked outwards from “what would you do if you found out your whole life was a waste, and then got a second chance?” What does the norse god of chaos do when he gets a second chance, but also starts working with The Man? The answer is, he turned into Doctor Who.

And, like the Doctor, Loki himself had a catalytic effect on the world around him; not the god of “mischief”, necessarily, but certainly a force for chaos; every other character who interacted with him was changed by the encounter, learning things they’d have rather not learned and having to change in one way or another having learned it.

While not the showiest, or most publicized, the standout for me was Wunmi Mosaku’s Hunter B-15, who went from a true believer soldier to standing in the rain outside a futuristic Wal-Mart asking someone she’d been trying to kill (sorry, “prune”) to show her the truth of what had been done to her.

The first season also got as close as I ever want to get to a Doctor Who origin—not from Loki, but in the form of Mobius. He also starts as a dedicated company man, unorthodox maybe, but a true believer in the greater mission. The more he learns, the more he realizes that the TVA were the bad guys all along, and ends up in full revolt against his former colleagues; by the end I was half expecting him to steal a time machine and run off with his granddaughter.

But look, Loki as a Marvel character never would have shown up again after the first Thor and the Avengers if Tom Hiddleston hadn’t hit it out of the park as hard as he did. Here, he finally gets a chance to be the lead, and he makes the most of the opportunity. He should have had a starring vehicle long before this, and it manages to make killing Loki off in the opening scene of Infinity War even stupider in retrospect than it was at the time.

All in all, a huge success (I’m making a note here) and a full-throated endorsement of Marvel’s plan for Disney+ (Especially coming right after the nigh-unwatchable Falcon and the Winter Soldier).

Season 2, then, was a crushing disappointment.

So slow, so boring. All the actors who are not Tom Hiddleston are visibly checked out; thinking about what’s next. The characters, so vibrant in the first season , are hollowed-out shells of themselves.

As jwz quips, there isn’t anything left of this show other than the leftover production design.

As an example of the slide, I was obsessed with Loki’s season 1 look where he had, essentially, a Miami Vice under-shoulder holster for his sword under his FBI-agent style jacket, with that square tie. Just a great look, a perfect encapsulation of the shows mashup of influences and genres. And this year, they took that away and he wore a kinda boring Doctor Who cosplay coat. The same basic idea, but worse in every conceivable way.

And the whole season was like that, the same ideas but worse.

Such a smaller scope this year, nothing on the order of the first season’s “city on a doomed planet.” The show seemed trapped inside the TVA, sets we had seen time and time before. Even the excursion to the World’s Fair seemed claustrophobic. And wasted, those events could have happened anywhere. Whereas the first season was centered around what Loki would do with a second chance armed with the knowledge that his life came to nothing, here things just happened. Why were any of these people doing any of these things? Who knows? Motivations are non-existant, characters have been flattened out to, basically, the individual actor’s charisma and not much else. Every episode I wanted to sit the writer down and dare them to explain why any character did why they did.

The most painful was probably poor B-15 who was long way from heartbreaking revelations in the rain in front of futuristic WalMarts; this year the character has shrunk to a sub-Riker level of showing up once a week to bark exposition at the audience. She’s basically Sigourney Weaver’s character from Galaxy Quest, but meant seriously, repeating what we can already see on the computer screen.

And Ke Huy Quan, fresh from winning an Oscar for his stunning performance in Everything Everywhere all at Once, is maybe even more wasted, as he also has to recite plot-mechanic dialogue, but he doesn’t even have a well-written version of his character to remember.

And all the female characters were constantly in conflict with each other, mostly over men? What was that even about?

Actually, I take that back, the most disappointing was Tara Strong’s Miss Minutes, a whimsical and mysterious character who became steadily more menacing over the course of the first season, here reduced to less than nothing, practically absent from the show, suddenly pining for Johnathan Majors, and then casually murdered (?) by the main characters in an aside while the show’s attention was somewhere else.

There was a gesture towards an actually interesting idea in the form of “the god of chaos wants to re-fund the police”, but the show didn’t even seem to notice that it had that at hand.

The second to last episode was where I finally lost patience. The TVA has seemingly been destroyed, and Loki has snapped backwards in time. Meeting each of the other characters as who they were before they were absorbed into the TVA, Loki spends the episode trying to get them to remember him and to get back to the “present” to save the TVA. Slowly, painfully, the show arrived at a conclusion where Hiddleston looked the camera in the eye and delivered the punchline that “The real TVA was the friends we made along the way”.

And, what? Stepping past the deeply banal moral, I flatly refuse to believe that these characters, whom Loki has known for, what, a couple of days? Are such great friends of his that he manages to learn how to time travel from sheer will to rescue them. These people? More so than his brother, more so than anyone else from Asgard? (This is where the shared universe fails the show, we know too much about the character to buy what this show is selling.)

The last episode was actually pretty good—this was the kind of streaming show that was really a movie idea with 4 hours of foreplay before getting to the real meat. Loki choosing to shoulder the responsibility for the multiverse and get his throne at the center of the world tree as the god of time (?) is a cool visual, but utterly squanders the potential of the show, Loki and Morbius having cool timecop adventures.