Fully Automated Insults to Life Itself

In 20 years time, we’re going to be talking about “generative AI”, in the same tone of voice we currently use to talk about asbestos. A bad idea that initially seemed promising which ultimately caused far more harm than good, and that left a swathe of deeply embedded pollution across the landscape that we’re still cleaning up.

It’s the final apotheosis of three decades of valuing STEM over the Humanities, in parallel with the broader tech industry being gutted and replaced by a string of venture-backed pyramid schemes, casinos, and outright cons.

The entire technology is utterly without value and needs to be scrapped, legislated out of existence, and the people involved need to be forcibly invited to find something better to send their time on. We’ve spent decades operating under the unspoken assumption that just because we can build something, that means it’s inevitable and we have to build it first before someone else does. It’s time to knock that off, and start asking better questions.

AI is the ultimate form of the joke about the restaurant where the food is terrible and also the portions are too small. The technology has two core problems, both of which are intractable:

- The output is terrible

- It’s deeply, fundamentally unethical

Probably the definite article on generative AI’s quality, or profound lack thereof, is Ted Chiang’s ChatGPT Is a Blurry JPEG of the Web; that’s almost a year old now, and everything that’s happened in 2023 has only underscored his points. Fundamentally, we’re not talking about vast cyber-intelligences, we’re talking Sparkling Autocorrect.

Let me provide a personal anecdote.

Earlier this year, a coworker of mine was working on some documentation, and had worked up a fairly detailed outline of what needed to be covered. As an experiment, he fed that outline into ChatGPT, intended to publish the output, and I offered to look over the result.

At first glance it was fine. Digging in, thought, it wasn’t great. It wasn’t terrible either—nothing in it was technically incorrect, but it had the quality of a high school book report written by someone who had only read the back cover. Or like documentation written by a tech writer who had a detailed outline they didn’t understand and a word count to hit? It repeated itself, it used far too many words to cover very little ground. It was, for lack of a better word, just kind of a “glurge”. Just room-temperature tepidarium generic garbage.

I started to jot down some editing notes, as you do, and found that I would stare at a sentence, then the whole paragraph, before crossing the paragraph out and writing “rephrase” in the margin. To try and be actually productive, I took a section and started to rewrite in what I thought was better, more concise manner—removing duplicates, omitting needless words. De-glurgifying.

Of course, I discovered I had essentially reconstituted the outline.

I called my friend back and found the most professional possible way to tell him he needed to scrap the whole thing start over.

It left me with a strange feeling, that we had this tool that could instantly generate a couple thousand words of worthless text that at first glance seemed to pass muster. Which is so, so much worse than something written by a junior tech writer who doesn’t understand the subject, because this was produced by something that you can’t talk to, you can’t coach, that will never learn.

On a pretty regular basis this year, someone would pop up and say something along the lines of “I didn’t know the answer, and the docs were bad, so I asked the robot and it wrote the code for me!” and then they would post some screenshots of ChatGPTs output full of a terribly wrong answer. Human’s AI pin demo was full of wrong answers, for heaven’s sake. And so we get this trend where ChatGPT manages to be an expert in things you know nothing about, but a moron about things you’re an expert in. I’m baffled by the responses to the GPT-n “search” “results”; they’re universally terrible and wrong.

And this is all baked in to the technology! It’s a very, very fancy set of pattern recognition based on a huge corpus of (mostly stolen?) text, computing the most probable next word, but not in any way considering if the answer might be correct. Because it has no way to, thats totally outside the bounds of what the system can achieve.

A year and a bit later, and the web is absolutely drowning in AI glurge. Clarkesworld had to suspend submissions for a while to get a handle on blocking the tide of AI garbage. Page after page of fake content with fake images, content no one ever wrote and only meant for other robots to read. Fake articles. Lists of things that don’t exist, recipes no one has ever cooked.

And we were already drowning in “AI” “machine learning” gludge, and it all sucks. The autocorrect on my phone got so bad when they went from the hard-coded list to the ML one that I had to turn it off. Google’s search results are terrible. The “we found this answer for you” thing at the top of the search results are terrible.

It’s bad, and bad by design, it can’t ever be more than a thoughtless mashup of material it pulled in. Or even worse, it’s not wrong so much as it’s all bullshit. Not outright lies, but vaguely truthy-shaped “content”, freely mixing copied facts with pure fiction, speech intended to persuade without regard for truth: Bullshit.

Every generated image would have been better and funnier if you gave the prompt to a real artist. But that would cost money—and that’s not even the problem, the problem is that would take time. Can’t we just have the computer kick something out now? Something that looks good enough from a distance? If I don’t count the fingers?

My question, though, is this: what future do these people want to live in? Is it really this? Swimming a sea of glurge? Just endless mechanized bullshit flooding every corner of the Web?Who looked at the state of the world here in the Twenties and thought “what the world needs right now is a way to generate Infinite Bullshit”?

Of course, the fact that the results are terrible-but-occasionally-fascinating obscure the deeper issue: It’s a massive plagiarism machine.

Thanks to copyleft and free & open source, the tech industry has a pretty comprehensive—if idiosyncratic—understanding of copyright, fair use, and licensing. But that’s the wrong model. This isn’t about “fair use” or “transformative works”, this is about Plagiarism.

This is a real “humanities and the liberal arts vs technology” moment, because STEM really has no concept of plagiarism. Copying and pasting from the web is a legit way to do your job.

(I mean, stop and think about that for a second. There’s no other industry on earth where copying other people’s work verbatim into your own is a widely accepted technique. We had a sign up a few jobs back that read “Expert level copy and paste from stack overflow” and people would point at it when other people had questions about how to solve a problem!)

We have this massive cultural disconnect that would be interesting or funny if it wasn’t causing so much ruin. This feels like nothing so much as the end result of valuing STEM over the Humanities and Liberal Arts in education for the last few decades. Maybe we should have made sure all those kids we told to “learn to code” also had some, you know, ethics? Maybe had read a couple of books written since they turned fourteen?

So we land in a place where a bunch of people convinced they’re the princes of the universe have sucked up everything written on the internet and built a giant machine for laundering plagiarism; regurgitating and shuffling the content they didn’t ask permission to use. There’s a whole end-state libertarian angle here too; just because it’s not explicitly illegal, that means it’s okay to do it, ethics or morals be damned.

“It’s fair use!” Then the hell with fair use. I’d hate to lose the wayback machine, but even that respects robots.txt.

I used to be a hard core open source, public domain, fair use guy, but then the worst people alive taught a bunch of if-statements to make unreadable counterfit Calvin & Hobbes comics, and now I’m ready to join the Butlerian Jihad.

Why should I bother reading something that no one bothered to write?

Why should I bother looking at a picure that no one could be bothered to draw?

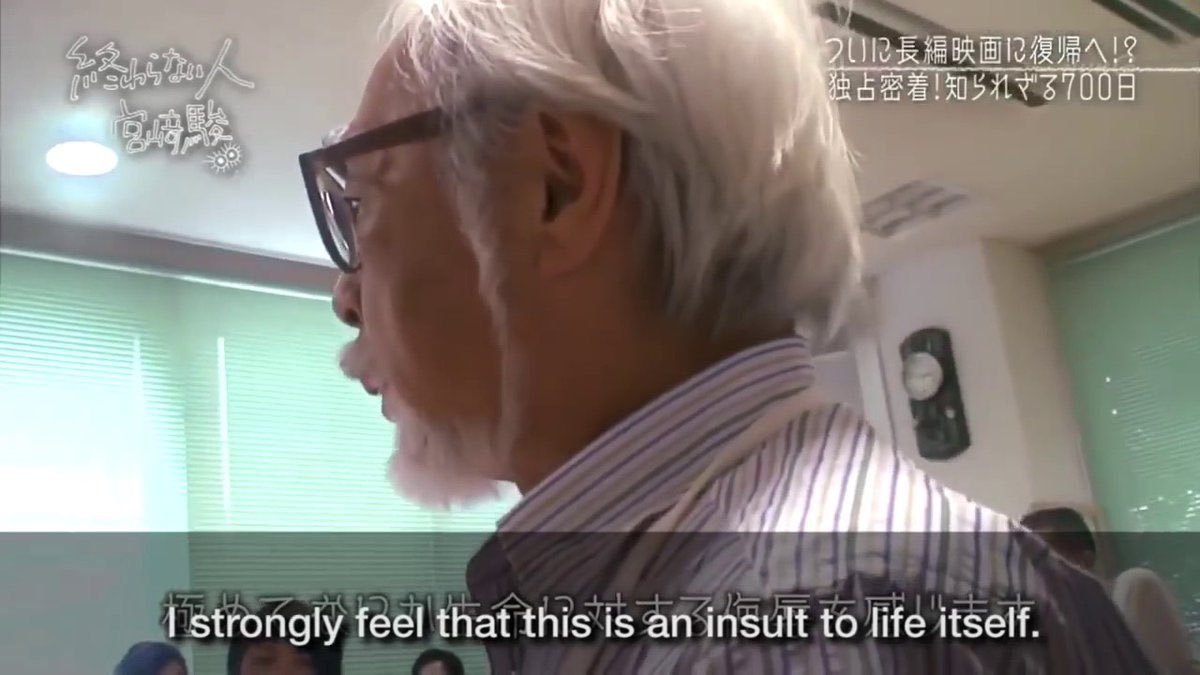

Generative AI and it’s ilk are the final apotheosis of the people who started calling art “content”, and meant it.

These are people who think art or creativity are fundamentally a trick, a confidence game. They don’t believe or understand that art can be about something. They reject utter the concept of “about-ness”, the basic concept of “theme” is utterly beyond comprehension. The idea that art might contain anything other than its most surface qualities never crosses their mind. The sort of people who would say “Art should soothe, not distract”. Entirely about the surface aesthetic over anything.

(To put that another way, these are the same kind people who vote Republican but listen to Rage Against the Machine.)

Don’t respect or value creativity.

Don’t respect actual expertise.

Don’t understand why they can’t just have what someone else worked for. It’s even worse than wanting to pay for it, these creatures actually think they’re entitled to it for free because they know how to parse a JSON file. It feels like the final end-point of a certain flavor of free software thought: no one deserves to be paid for anything. A key cultual and conceptual point past “information wants to be free” and “everything is a remix”. Just a machine that endlessly spits out bad copies of other work.

They don’y understand that these are skills you can learn, you have to work at, become an expert in. Not one of these people who spend hours upon hours training models or crafting prompts ever considered using that time to learn how to draw. Because if someone else can do it, they should get access to that skill for free, with no compensation or even credit.

This is why those machine generated Calvin & Hobbes comics were such a shock last summer; anyone who had understood a single thing about Bill Watterson’s work would have understood that he’d be utterly opposed to something like that. It’s difficult to fathom someone who liked the strip enough to do the work to train up a model to generate new ones while still not understanding what it was about.

“Consent” doesn’t even come up. These are not people you should leave your drink uncovered around.

But then you combine all that with the fact that we have a whole industry of neo-philes, desperate to work on something New and Important, terrified their work might have no value.

(See also: the number of abandoned javascript frameworks that re-solve all the problems that have already been solved.)

As a result, tech has an ongoing issue with cool technology that’s a solution in search of a problem, but ultimately is only good for some kind of grift. The classical examples here are the blockchain, bitcoin, NFTs. But the list is endless: so-called “4th generation languages”, “rational rose”, the CueCat, basically anything that ever got put on the cover of Wired.

My go-to example is usually bittorrent, which seemed really exciting at first, but turned out to only be good at acquiring TV shows that hadn’t aired in the US yet. (As they say, “If you want to know how to use bittorrent, ask a Doctor Who fan.”)

And now generative AI.

There’s that scene at the end of Fargo, where Frances McDormand is scolding The Shoveler for “all this for such a tiny amount of money”, and thats how I keep thinking about the AI grift carnival. So much stupid collateral damage we’re gonna be cleaning up for years, and it’s not like any of them are going to get Fuck You(tm) rich. No one is buying an island or founding a university here, this is all so some tech bros can buy the deluxe package on their next SUV. At least crypto got some people rich, and was just those dorks milking each other; here we all gotta deal with the pollution.

But this feels weirdly personal in a way the dunning-krugerrands were not. How on earth did we end up in a place where we automated art, but not making fast food, or some other minimum wage, minimum respect job?

For a while I thought this was something along one of the asides in David Graeber’s Bullshit Jobs, where people with meaningless jobs hate it when other people have meaningful ones. The phenomenon of “If we have to work crappy jobs, we want to pull everyone down to our level, not pull everyone up”. See also: “waffle house workers shouldn’t make 25 bucks an hour”, “state workers should have to work like a dog for that pension”, etc.

But no, these are not people with “bullshit jobs”, these are upper-middle class, incredibly comfortable tech bros pulling down a half a million dollars a year. They just don’t believe creativity is real.

But because all that apparently isn’t fulfilling enough, they make up ghost stories about how their stochastic parrots are going to come alive and conquer the world, how we have to build good ones to fight the bad ones, but they can’t be stopped because it’s inevitable. Breathless article after article about whistleblowers worried about how dangerous it all is.

Just the self-declared best minds of our generation failing the mirror test over and over again.

This is usually where someone says something about how this isn’t a problem and we can all learn to be “prompt engineers”, or “advisors”. The people trying to become a prompt advisor are the same sort who would be proud they convinced Immortan Joe to strap them to the back of the car instead of the front.

This isn’t about computers, or technology, or “the future”, or the inevitability of change, or the march or progress. This is about what we value as a culture. What do we want?

“Thus did a handful of rapacious citizens come to control all that was worth controlling in America. Thus was the savage and stupid and entirely inappropriate and unnecessary and humorless American class system created. Honest, industrious, peaceful citizens were classed as bloodsuckers, if they asked to be paid a living wage. And they saw that praise was reserved henceforth for those who devised means of getting paid enormously for committing crimes against which no laws had been passed. Thus the American dream turned belly up, turned green, bobbed to the scummy surface of cupidity unlimited, filled with gas, went bang in the noonday sun.” ― Kurt Vonnegut, God Bless You, Mr. Rosewater

At the start of the year, the dominant narrative was that AI was inevitable, this was how things are going, get on board or get left behind.

Thats… not quite how the year went?

AI was a centerpiece in both Hollywood strikes, and both the Writers and Actors basically ran the table, getting everything they asked for, and enshrining a set of protections from AI into a contract for the first time. Excuse me, not protection from AI, but protection from the sort of empty suits that would use it to undercut working writers and performers.

Publisher after publisher has been updating their guidelines to forbid AI art. A remarkable number of other places that support artists instituted guidlines to ban or curtail AI. Even Kickstarter, which plunged into the blockchain with both feet, seemed to have learned their lesson and rolled out some pretty stringent rules.

Oh! And there’s some actual high-powered lawsuits bearing down on the industry, not to mention investigations of, shall we say, “unsavory” material in the training sets?

The initial shine seems to be off, where last year was all about sharing goofy AI-generated garbage, there’s been a real shift in the air as everyone gets tired of it and starts pointing out that it sucks, actually. And that the people still boosting it all seem to have some kind of scam going. Oh, and in a lot of cases, it’s literally the same people who were hyping blockchain a year or two ago, and who seem to have found a new use for their warehouses full of GPUs.

One of the more heartening and interesting developments this year was the (long overdue) start of a re-evaluation of the Luddites. Despite the popular stereotype, they weren’t anti-technology, but anti-technology-being-used-to-disenfrancise-workers. This seems to be the year a lot of people sat up and said “hey, me too!”

AI isn’t the only reason “hot labor summer” rolled into “eternal labor september”, but it’s pretty high on the list.

Theres an argument thats sometimes made that we don’t have any way as a society to throw away a technology that already exists, but that’s not true. You can’t buy gasoline with lead in it, or hairspray with CFCs, and my late lamented McDLT vanished along with the Styrofoam that kept the hot side hot and the cold side cold.

And yes, asbestos made a bunch of people a lot of money and was very good at being children’s pyjamas that didn’t catch fire, as long as that child didn’t need to breathe as an adult.

But, we've never done that for software.

Back around the turn of the century, there was some argument around if cryptography software should be classified as a munition. The Feds wanted stronger export controls, and there was a contingent of technologists who thought, basically, “Hey, it might be neat if our compiler had first and second amendment protection”. Obviously, that didn’t happen. “You can’t regulate math! It’s free expression!”

I don’t have a fully developed argument on this, but I’ve never been able to shake the feeling like that was a mistake, that we all got conned while we thought we were winning.

Maybe some precedent for heavily “regulating math” would be really useful right about now.

Maybe we need to start making some.

There’s a persistant belief in computer science since computers were invented that brains are a really fancy powerful computer and if we can just figure out how to program them, intelligent robots are right around the corner.

Theres an analogy that floats around that says if the human mind is a bird, then AI will be a plane, flying, but very different application of the same principals.

The human mind is not a computer.

At best, AI is a paper airplane. Sometimes a very fancy one! With nice paper and stickers and tricky folds! Byt the key is that a hand has to throw it.

The act of a person looking at bunch of art and trying to build their own skills is fundamentally different than a software pattern recognition algorithm drawing a picture from pieces of other ones.

Anyone who claims otherwise has no concept of creativity other than as an abstract concept. The creative impulse is fundamental to the human condition. Everyone has it. In some people it’s repressed, or withered, or undeveloped, but it’s always there.

Back in the early days of the pandemic, people posted all these stories about the “crazy stuff they were making!” It wasn’t crazy, that was just the urge to create, it’s always there, and capitalism finally got quiet enough that you could hear it.

“Making Art” is what humans do. The rest of society is there so we stay alive long enough to do so. It’s not the part we need to automate away so we can spend more time delivering value to the shareholders.

AI isn’t going to turn into skynet and take over the world. There won’t be killer robots coming for your life, or your job, or your kids.

However, the sort of soulless goons who thought it was a good idea to computer automate “writing poetry” before “fixing plumbing” are absolutely coming to take away your job, turn you into a gig worker, replace whoever they can with a chatbot, keep all the money for themselves.

I can’t think of anything more profoundly evil than trying to automate creativity and leaving humans to do the grunt manual labor.

Fuck those people. And fuck everyone who ever enabled them.

It’ll Be Worth It

An early version of this got worked out in a sprawling Slack thread with some friends. Thanks helping me work out why my perfectly nice neighbor’s garage banner bugs me, fellas

There’s this house about a dozen doors down from mine. Friendly people, I don’t really know them, but my son went to school with their youngest kid, so we kinda know each other in passing. They frequently have the door to the garage open, and they have some home gym equipment, some tools, and a huge banner that reads in big block capital letters:

NOBODY CARES WORK HARDER

My reaction is always to recoil slightly. Really, nobody? Even at your own home, nobody? And I think “you need better friends, man. Maybe not everyone cares, but someone should.” I keep wanting to say “hey man, I care. Good job, keep it up!” It feels so toxic in a way I can’t quite put my finger on.

And, look, I get it. It’s a shorthand to communicate that we’re in a space where the goal is what matters, and the work is assumed. It’s very sports-oriented worldview, where the message is that the complaints don’t matter, only the results matter. But my reaction to things like that from coaches in a sports context was always kinda “well, if no one cares, can I go home?”

(Well, that, and I would always think “I’d love to see you come on over to my world and slam into a compiler error for 2 hours and then have me tell you ‘nobody cares, do better’ when you ask for help and see how you handle that. Because you would lose your mind”)

Because that’s the thing: if nobody cared, we woudn’t be here. We’re hever because we think everyone cares.

The actual message isn’t “nobody cares,” but:

“All this will be worth it in the end, you’ll see”

Now, that’s a banner I could get behind.

To come at it another way, there’s the goal and there’s the work. Depending on the context, people care about one or the other. I used to work with some people who would always put the number of hours spent on a project as the first slide of their final read-outs, and the rest of us used to make terrible fun of them. (As did the execs they were presenting to.)

And it’s not that the “seventeen million hours” wasn’t worth celebrating, or that we didn’t care about it, but that the slide touting it was in the wrong venue. Here, we’re in an environment where only care about the end goal. High fives for working hard go in a different meeting, you know?

But what really, really bugs me about that banner specifically, and things like it, that that they’re so fake. If you really didn’t think anyone cares, you wouldn’t hang a banner up where all your neighbors could see it over your weight set. If you really thought no one cared, you wouldn’t even own the exercise gear, you’d be inside doing something you want to do! Because no one has to hang a “WORK HARDER” banner over a Magic: The Gathering tournament, or a plant nursery, or a book club. But no, it’s there because you think everyone cares, and you want them to think you’re cool because you don’t have feelings. A banner like that is just performative; you hang something like that because you want others to care about you not caring.

There’s a thing where people try and hold up their lack of emotional support as a kind of badge of honor, and like, if you’re at home and really nobody cares, you gotta rethink your life. And if people do care, why do you find pretending they don’t motivating? What’s missing from your life such that pretending you’re on your own is better than embracing your support?

The older I get, the less tolerance I have for people who think empathy is some kind of weakness, that emotional isolation is some kind of strength. The only way any of us are going to get through any of this is together.

Work Harder.

Everyone Cares.

I’ll Be Worth It.

A Story About Beep the Meep

Up until last weekend, Doctor Who’s “Beep the Meep” was an extremely deep cut. Especially for American fans who didn’t have access to Doctor Who Monthly back in the 80s, you had to be a vary particular kind of invested to know who The Meep was. And, you know, guilty as charged.

We bought our first car with a lock remote maybe fifteen years ago? And when we get home, I’ll frequently ask something like “did you beep the car?” And I always want to make the joke “did you beep the meep”. And I always stop myself, because look, my family already knows more about Doctor Who then they ever, ever wanted to, but the seminar required to explain that joke? “So, the meep is a cute little fuzzy guy, but he’s actually the galaxy’s most wanted war criminal, and so the Doctor gets it wrong at first, and the art is done by the watchmen guy before he teamed up with The Magus, and it’s a commentary on the show using ugly as a signifier of evil, and actually it came before ET and gremlins, and…”

And just, no. Nope, no deal. That’s beyond the pale. I could explain the joke, but not in a way where it would ever be close to funny. So instead, about once a month, I stop myself from asking if the meep got beeped.

Flash forward to this week.

We all piled out of the car after something or other. Bundling into the house. Like normal, the joke flashed through my mind and I was about to dismis it. But then it suddenly came to me: this was it. They all know who the Meep is now! Through the strangest of happenstances, a dumb joke I thought of in 2008 and haven’t been able to use finally, finally, became usable. This was my moment! A profound sense of satisfaction filled my body, the deep sense of fulfillment of checking off a box long un-checked.

“Hey!” I said, “Did you Beep the Meep?”

...

Turns out, even with context, still not that funny.

Email Verification

The best and worst thing about email is that anyone can send an email to anyone else without permission. The people designing email didn’t think this was a problem, of course. They were following the pattern of all the other communications technology of the time—regular mail, the phones, telegrams. Why would I need permission to send a letter? That’s crazy.

Of course, here in the Twenties, three of those systems are choked by robot-fueled marketing spam, and the fourth no longer exists. Of all the ways we ended up living in a cyberpunk dystopia, the fact that no one will answer their phone anymore because they don’t want to be harassed by a robot is the most openly absurd; less Gibson, more Vonnegut-meets-Ballard.

(I know I heard that joke somewhere, but I cannot remember where. Sorry, whoever I stole that from!)

Arguably, there are whole social networks who built outward from the basic concept of “what if you had to get permission to send a message directly to someone?”

With email though, I’m always surprised that systems don’t require you to verify your email before sending messages to it. This is actually very easy to do! Most web systems these days use the user’s email address as their identity. This is very convenient, because someone else is handling the problem of making sure your ids are unique, and you always have a way to contact your users. All you have to do is make them click a link in an email you sent them, and now you know they gave you a live address and it’s really them. Easy!

(And look, as a bonus, if you email them “magic links” you also don’t have to worry about a whole lot of password garbage. But thats a whole different topic.)

But instead a remarkable number of places just let people type some stuff that looks like an email address into a web form and then just use it.

And I don’t get it. Presumably you’re collecting user emails because you want to be able to contact them about whatever service you’re providing them, and probably also send them marketing. And if they put an email in that isn’t correct you can’t do either. I mean, if they somehow to put in a fake or misspelled address that happens to turn out to be valid, I guess you can still send that address stuff, but it’s not like the person at the other end of that is going to be receptive.

Okay great, but, ummmmmm, why do you bring this up?

I’m glad you ask! I mention this because there are at least three people out there in the world that keep misspelling their email addresses as mine. Presumably their initials are close to mine, and they have similar names, and they decomposed their names into an available gmail address in a manner similar to how I did. Or even worse—I was early to the gmail party, so I got an address with no numbers, maybe these folks got

My last name is one that came into existence because someone at Ellis Island didn’t care to decipher my great-grandfather’s accent and wrote down something “pretty close.” As a side effect of this, I’ve personally met every human that’s ever had that last name—to whom I’m related. I suspect this name was a fairly common Ellis Island shortcut, however, since there a surprising number of people out there with the same last name whom I’ve never heard of and am not related to.

But so the upshot is that I keep getting email meant for other people. Never anything personal, never anything I could respond to, but spam, or newsletters, or updates about their newspaper account.

I’ve slowly built up a mental image of these people. They all seem older, two midwest or east coast, one in Texas.

One, though, has been a real spree the last year or so. I think he’s somewhere in the greater Chicago area. He signed up for news from Men’s Wearhouse, he ordered a new cable install from Spectrum Cable. Unlike previous people, since this guy started showing up, it’s been a deluge.

And what do you do? I unsubscribe when I can, but that never works. But I don’t just want to unsubscribe, I want to find a third party to whom I can respond and say “hey, can you tell that guy that he keeps spelling his email wrong?”

The Spectrum bills drive me crazy. There were weeks where he didn’t “activate his new equipment”, and I kept shaking my head thinking, yeah, no wonder, he’s not getting the emails with the link to activate in them. He finally solved this problem, but now I get a monthly notification that his bill is ready to be paid. And I know that Spectrum has his actual address, and could technically pass a message along, but there is absolutely no customer support flow to pass a message along that they typed their email wrong.

So, delete, mark as spam, unsubscribe. Just one more thing that clogs up our brief time on Earth.

And then, two weeks ago, I got a google calendar invite.

The single word “counseling” was the meeting summary. No body, just google meet link. My great regret was that I didn’t see this until after the time had passed. It had been cancelled, but there it was. Sitting in my inbox. Having been sent from what was clearly a personal email address.

Was this it? The moment?

I thought about it. A lot. I had to try, right?

After spending the day turning it over in my head, I sent this email back to the person who was trying to do “counseling”:

Hello!

This is a long shot, but on the off chance that someone gave you this address rather than it being a typo, could you please tell whomever you it from to please be more careful entering their email? I've been getting a lot of emails for someone else recently that are clearly the result of someone typing their email wrong and ending up typing mine by mistake. While I can happily ignore the extra spam, I suspect that person would rather be the one receiving the emails they signed up for? Also, their cable bill is ready.

If you typoed it, obviously, no worries! Hope you found the person you meant to send that to.

In any case, have a great weekend!

I never got a response.

But the next day I got an email telling me my free trial for some business scheduling software was ready for me to use.